SEARCH 202 - Implement a new search

Introduction

This course is aimed at frontend and backend developers and takes you through search creation and advanced configuration of Funnelback implementations.

-

A summary: A brief overview of what you will accomplish and learn throughout the exercise.

-

Exercise requirements: A list of requirements, such as files, that are needed to complete the exercise.

The exercises can be read as tutorials or completed interactively. In order to interactively complete the exercises you will need to set up the training searches, or use a training environment. -

Detailed step-by-step instructions: detailed step-by-step instructions to guide you through completing the exercise.

-

Some extended exercises are also provided. These exercises can be attempted if the standard exercises are completed early, or as some review exercises that can be attempted in your own time.

Prerequisites to completing the course:

-

HTML, JavaScript and CSS familiarity.

1. Basics

Every search provided by Funnelback contains a number of basic components that come together to form the search.

1.1. Search packages

A search package is used to provide search across one or more data sources. It packages up the set of data sources into a searchable bundle and provides the different search pages used to display the search results that are retrieved from the index.

| A search package is similar to a meta collection from older versions of Funnelback |

1.2. Data sources

A data source contains configuration and indexes relating to a set of information resources such as web pages, documents or social media posts.

Each data source implements a gatherer (or connector) that is responsible for connecting to the content repository and gathering the information to include in the index.

| A data source is similar to a non-meta collection from older versions of Funnelback. |

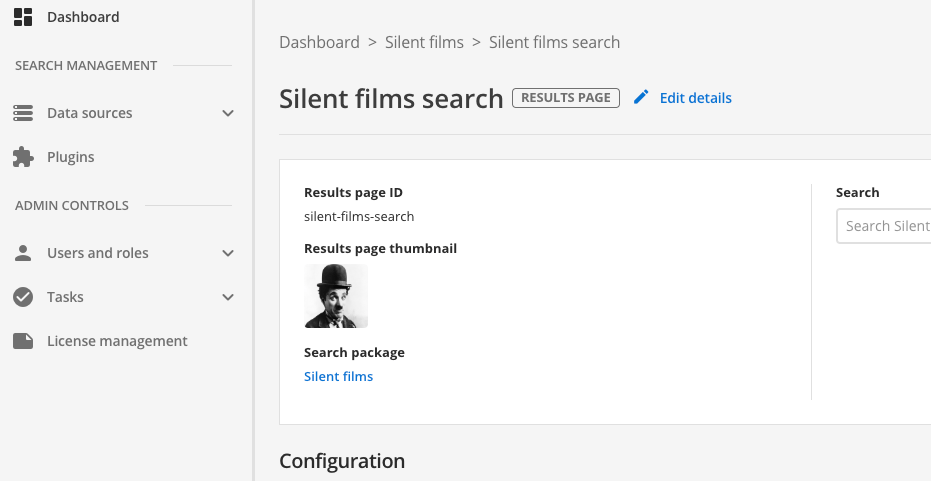

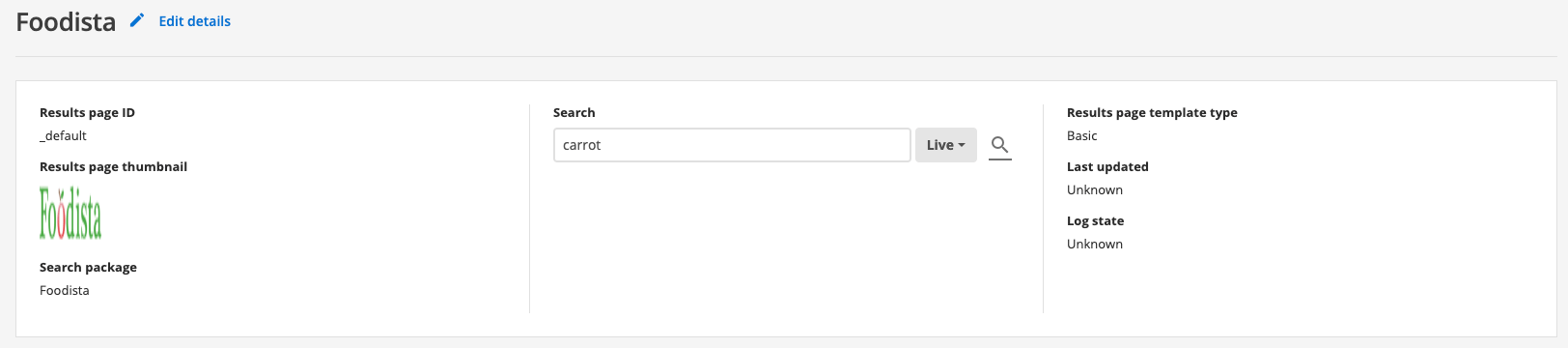

1.3. Results pages

A results page contains configuration and templating relating to a search results page provided by Funnelback.

Results page configuration includes:

-

Templating for the search results

-

Display settings that define properties about what is displayed by Funnelback (for example, what metadata fields to return, how to sort the search results).

-

Ranking settings that define how the order of search results will be determined.

-

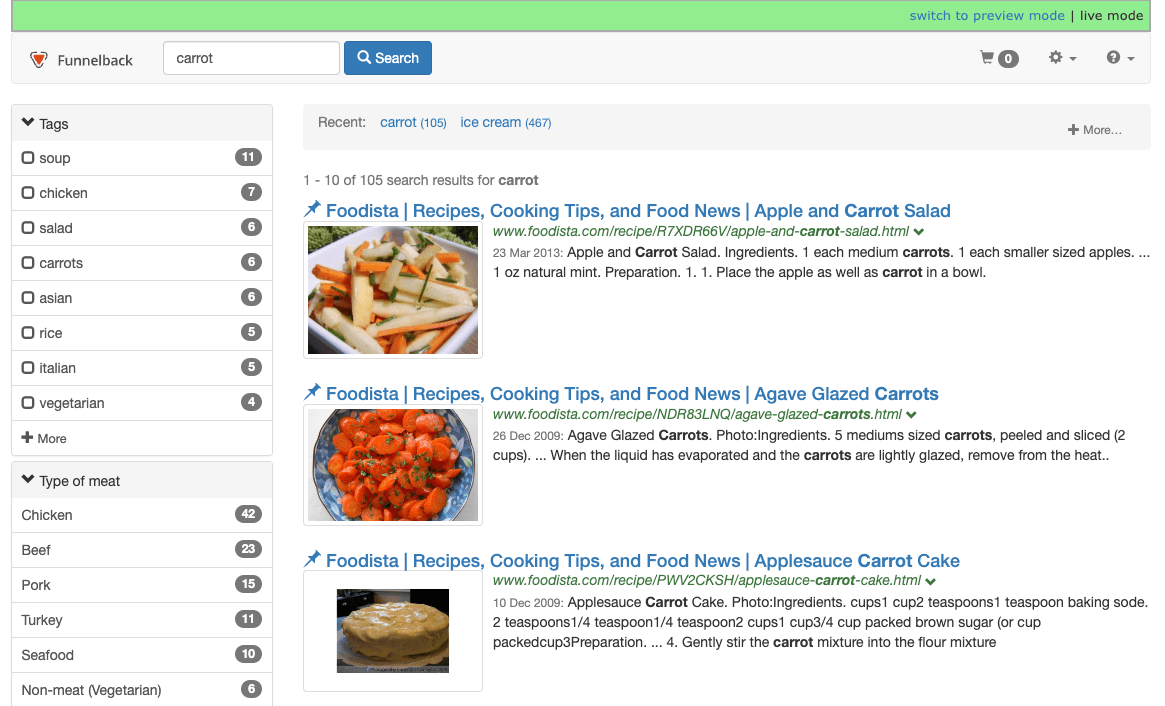

Feature configuration such as faceted navigation, auto-completion and extra searches that will be used to supplement the search results.

Reporting such as usage analytics, content and accessibility auditing is also produced for each search page

| A results page is similar to a service-enabled profile from older versions of Funnelback. |

2. Create a basic web search

A web search is probably the simplest search that you will create with Funnelback. It is used to provide a search for one or more websites.

In this exercise you will create a very basic search of a couple of websites containing the full text of some online books.

At a minimum, setting up a basic web search requires a search package containing a data source and a results page.

2.1. Step 1: Create a search package for your search

A search package is required for every search that you create. The search package bundles together all the data sources into a single index that you can then search across using results pages.

Tutorial: Create a search package

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

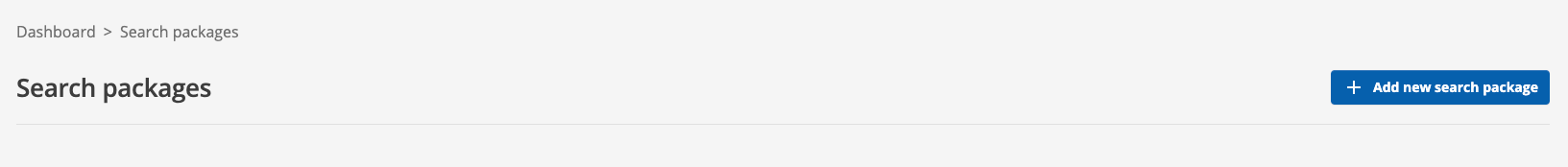

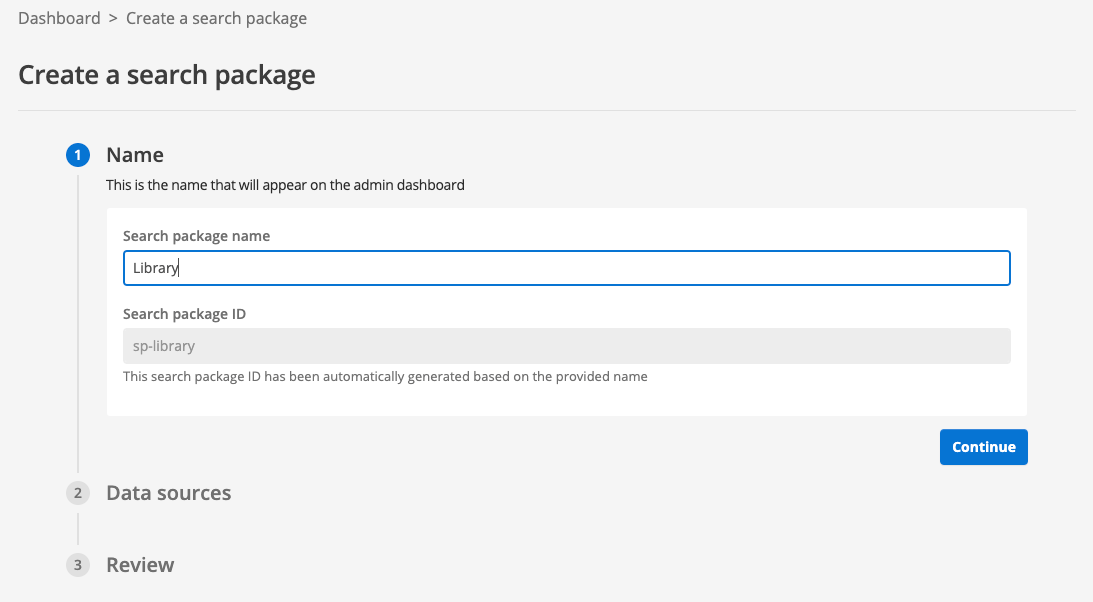

Click the add new search package button. This opens the screen used to create a new search package.

-

When prompted, enter Library as the search package name. Observe that the search package ID is automatically generated from the name that you enter. Click the continue button to proceed to the second step of search package creation.

-

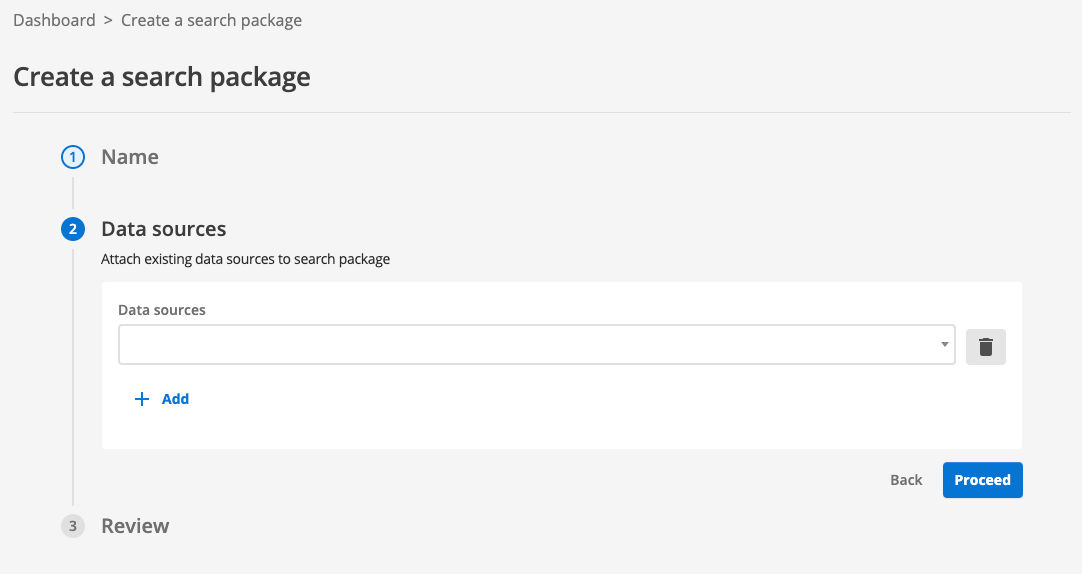

Step 2 of search package creation enables you to (optionally) attach existing data sources to your new search package, which you pick from the drop-down menu. Click the proceed button to skip this step as we will create our data source later.

-

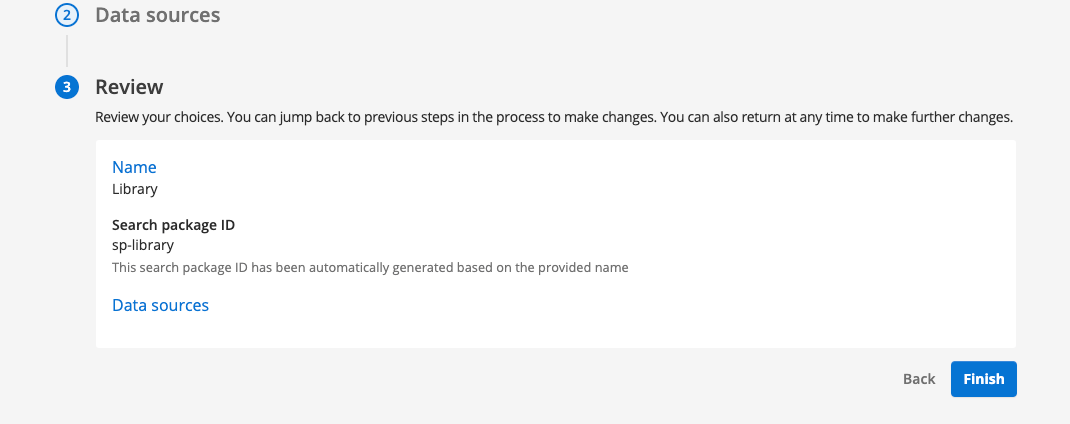

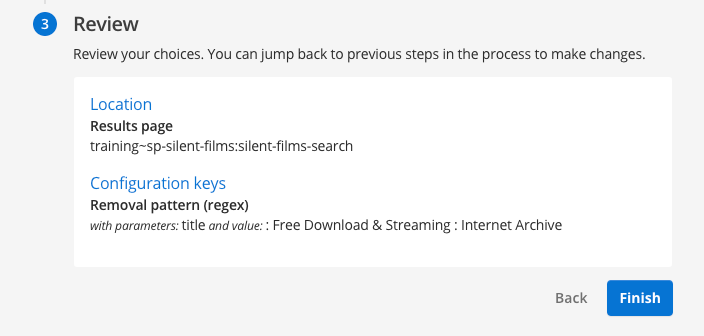

Review the information that you have entered. If you find any mistakes in the information you have entered you can return to a previous step by clicking on the step number. Once you are satisfied with the information in the review panel, click the finish button to create your search package.

-

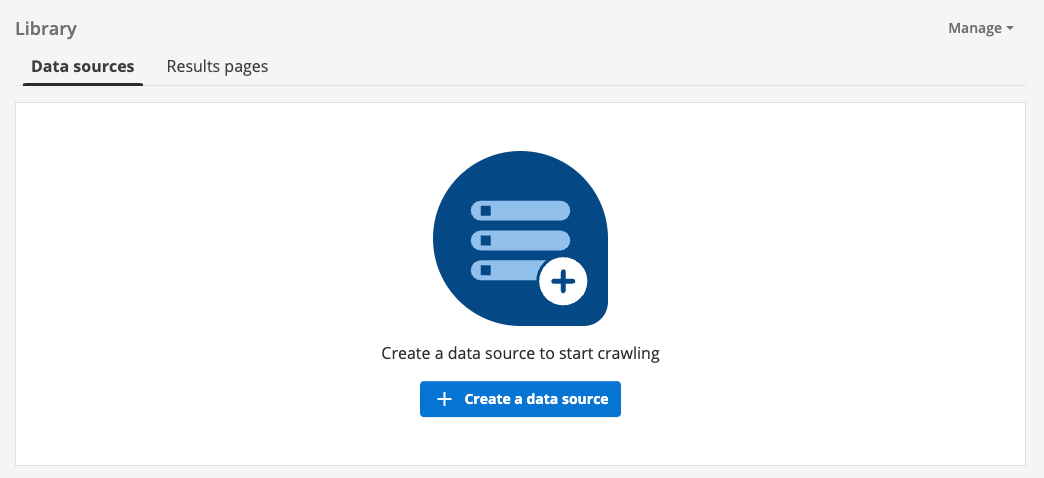

The search package management screen is displayed. Data sources and results pages can be set up from the panel located at the bottom of your page. However, for this exercise we will return to the main search dashboard before setting these up. Return to the search dashboard home page by clicking the dashboard item in the breadcrumb trail, the search dashboard item in the side navigation, or by clicking on the Funnelback logo on the menu bar.

-

You are returned to the search package listing. Scroll down the list of search packages and observe that the Library search package that you just created is now listed with the other search packages.

2.2. Step 2: Create your data sources

Tutorial: Create a web data source

This exercise sets up the data source that will crawl and index a website that contains the complete works of Shakespeare.

A web data source is used to gather and index the content of a website or set of websites. Web data sources contain HTML, PDF and MS Office files that are gathered by crawling a website or set of websites.

A web crawler is a bit like a user that loads a web page and then clicks on every link on the web page in turn, repeating this process until all the links on the website have been visited.

| The web crawler’s view of a website is similar to what you would see if you visited the website in a web browser, but with Javascript disabled. The web crawler doesn’t execute Javascript so cannot crawl a website if it requires Javascript to generate the page. |

This exercise continues on directly from the previous exercise.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Start the process to create a new data source by clicking on the create a data source button, located on the data sources tab that is displayed for the search package you created in the previous exercise.

-

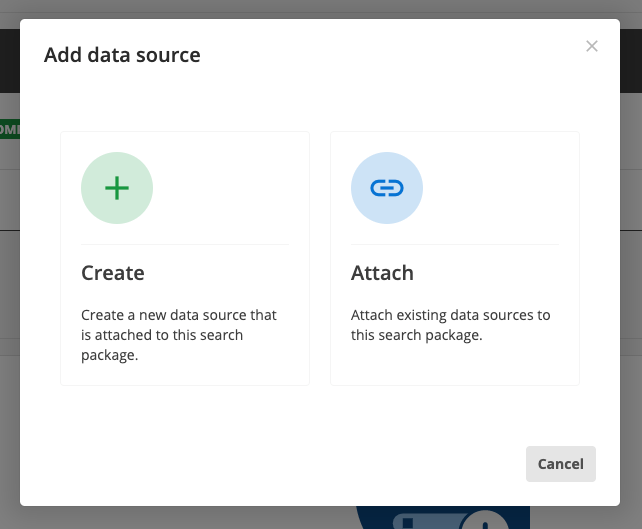

A modal opens providing you with the option to create or attach a data source. The attach option can be used to attach an existing data source to your search package. We are not interested in using any of the existing data sources in our new Library search, so we will create a new data source. Click the create button.

-

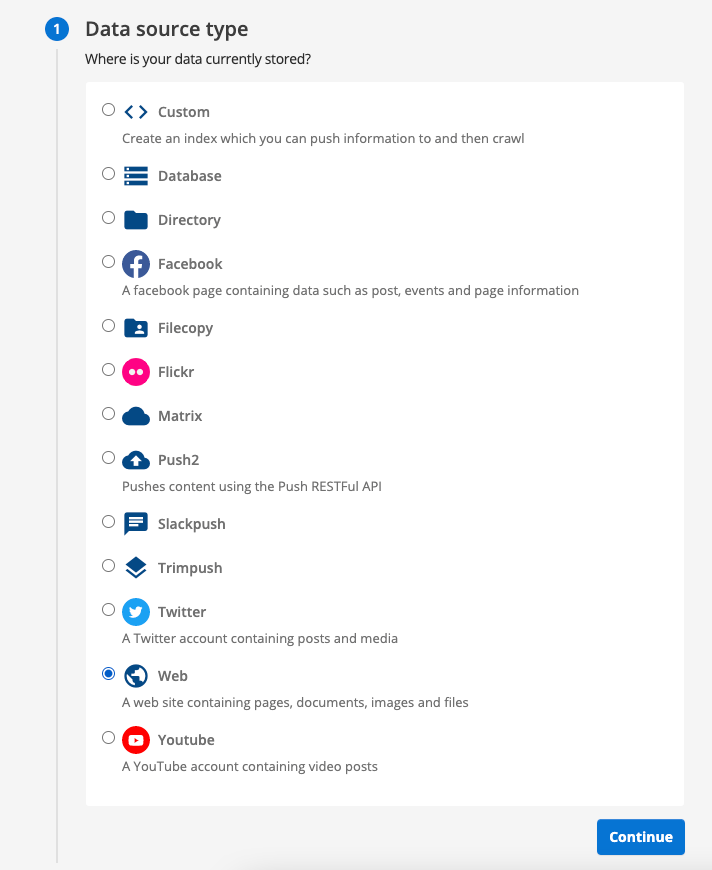

The first step of data source creation requires you to choose your data source type. Select the web radio button then click the continue button.

-

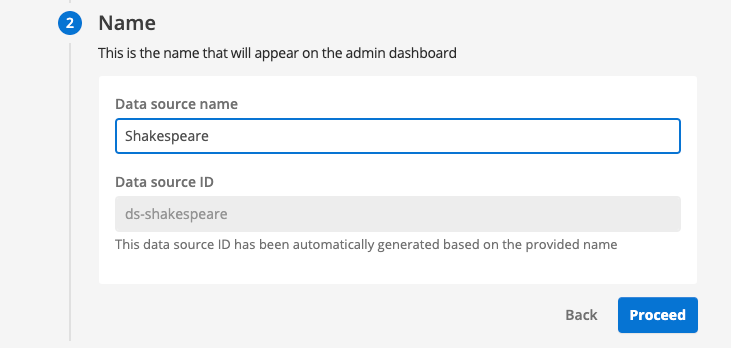

Enter Shakespeare when prompted for a data source name. Observe that the data source ID is automatically generated from your chosen name. Click the proceed button to move to the configuration step.

-

Enter some basic configuration for the data source. At a minimum you need to tell the crawler what should and shouldn’t be included in the crawl.

-

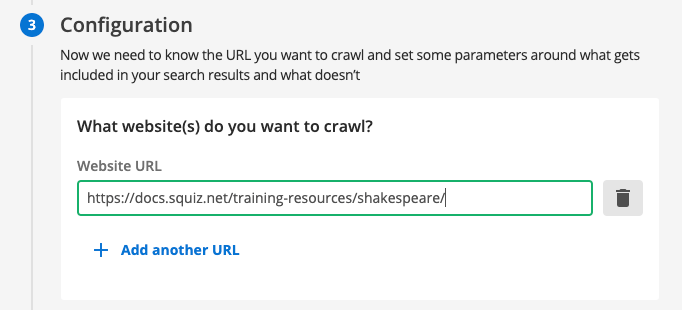

Enter the following into the

What website(s) do you want to crawl?field:https://docs.squiz.net/training-resources/shakespeare/Whatever you enter into this box will be included in your search, if the URL of the page matches.

Normally you would just enter your website home page here (for example, https://www.example.com/) - this example is a little more complicated because we are crawling a local copy of the website for training purposes. The two following sections following this can be used to further adjust what is included or excluded from your search:

- What do you want to exclude from your crawl?

-

If any of the items listed here fully or partially match a page URL, then that page will not be included in the search (and links contained in the page won’t be followed).

- Which non-HTML file types do you want to crawl as well?

-

This is a list of non-HTML documents that will be included in the search.

-

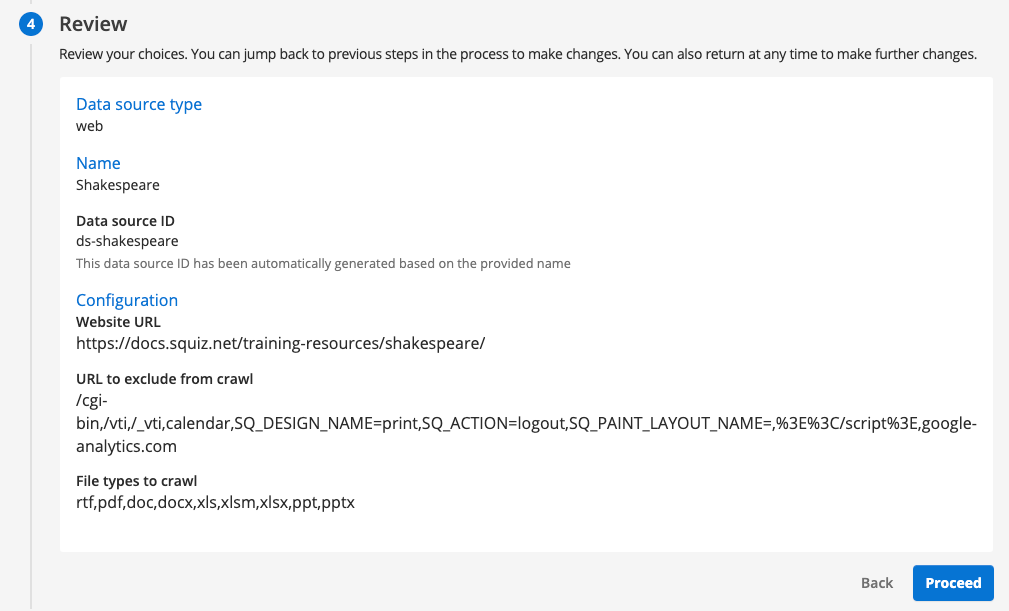

Click the proceed button to review the information you have provided. If you find any errors in what you’ve provided you can click on the step number to update the information. Once you are happy with the information in the review panel click the proceed button to create your data source.

-

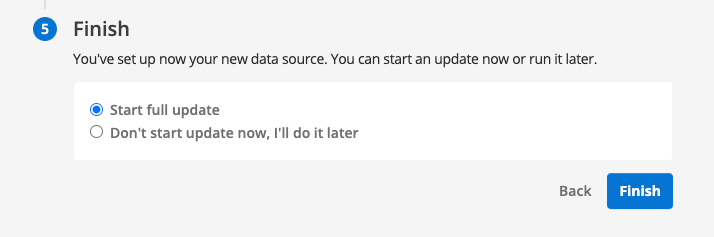

You are provided with a final option to update your data source now, or to update it later. Select the option to update now, then click the finish button to complete the data source creation steps.

In most cases you will want to update your data source later because you will want to provide additional configuration such as metadata. -

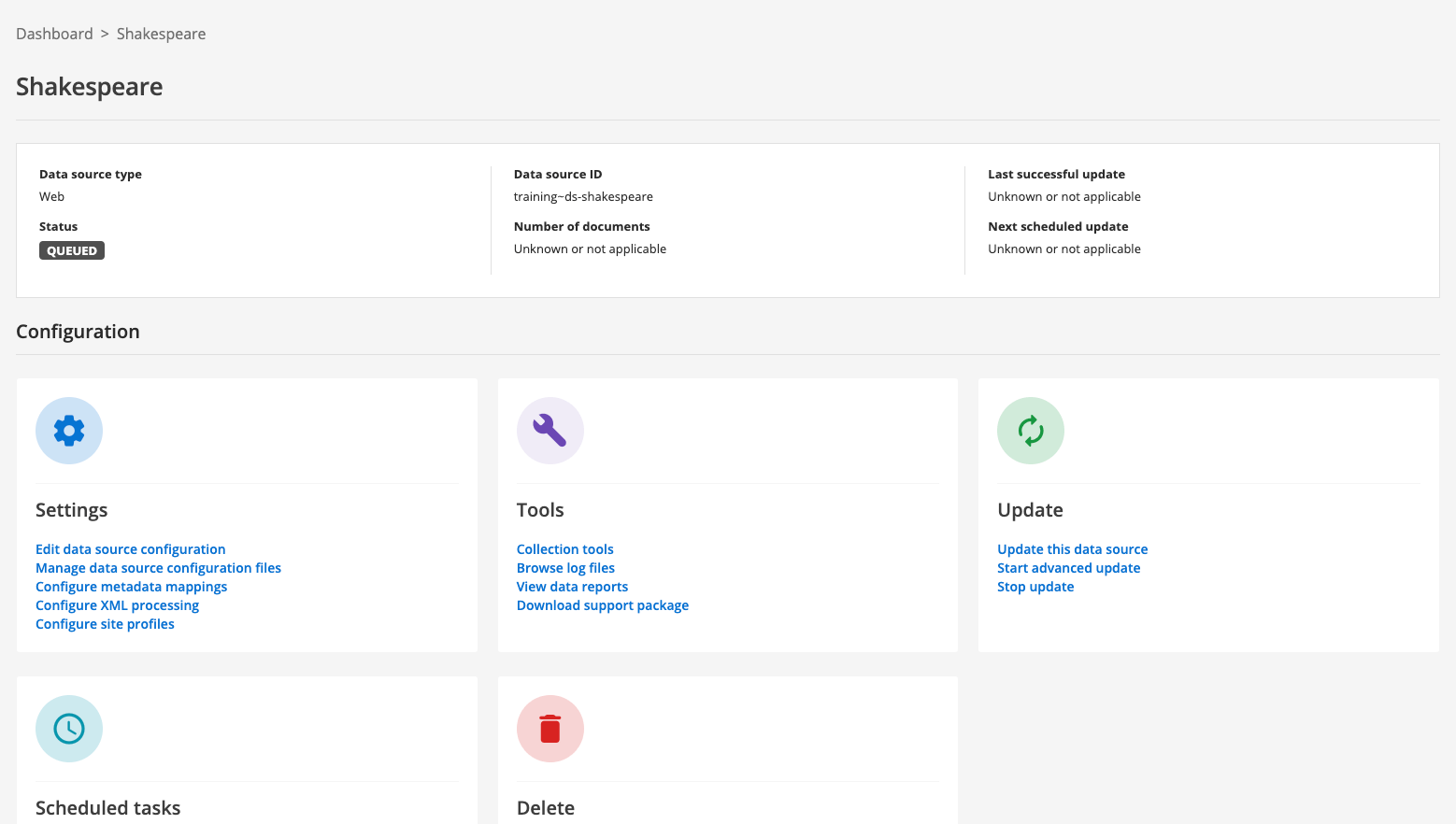

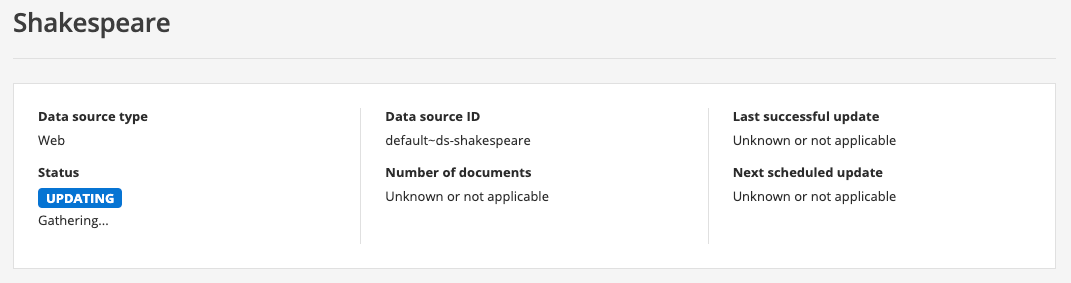

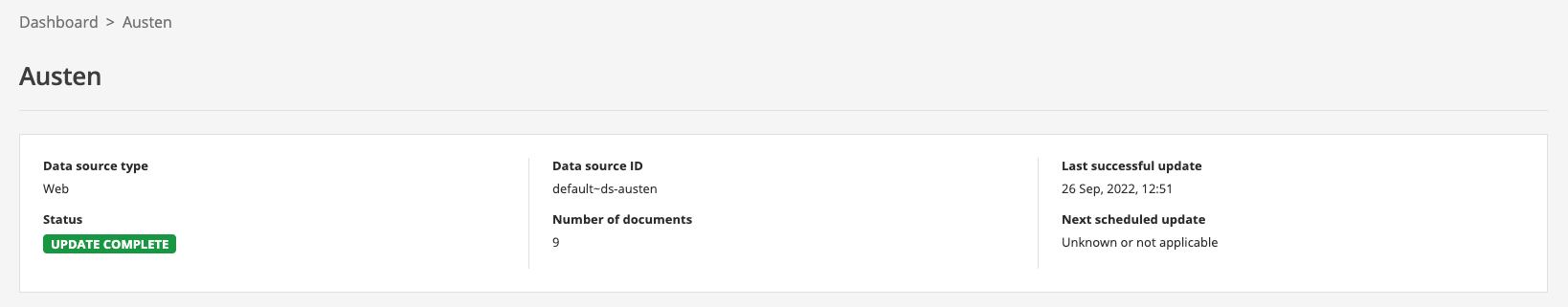

The data source management screen loads. Note that the status of the data source reads queued. This means that an update of the data source has been added to the task queue, but hasn’t yet started. The status will refresh as the update progresses.

-

After a short time the status message should update to indicate that the update is running (or has finished).

-

Repeat the above steps to create a second data source with the following details:

- Data source type

-

web

- Data source name

-

Austen - What website(s) do you want to crawl?

- What do you want to exclude from your crawl?

-

leave as default value

- Which non-HTML file types do you want to crawl as well?

-

leave as default value

Here we are creating a second data source to independently crawl the austen website. In general, we recommend that you add the website to your existing web data source (especially if you are likely to have cross-linking between the websites) as this results in better ranking quality.

-

When you’ve finished creating the data source, run an update.

2.3. Step 3: Create a results page

Before we delve more into data sources, we’ll take a quick look at results pages, which is the final element required to provide a working search.

Every search package in Funnelback contains one or more results pages which underpin the preview/live templates and configuration and also which can be used to provide access to scoped versions of the search.

Once a results page is created, default display and ranking options can be applied.

Separate results pages should be created:

-

For each search provided by the search package.

-

When separate analytics are desired for the search.

-

When independent templates, best bets, synonyms or curator rules are required for the search.

Tutorial: Create a results page

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Locate the library search package that you created in a previous exercise.

-

Click on the results pages tab then click the create a results page button.

-

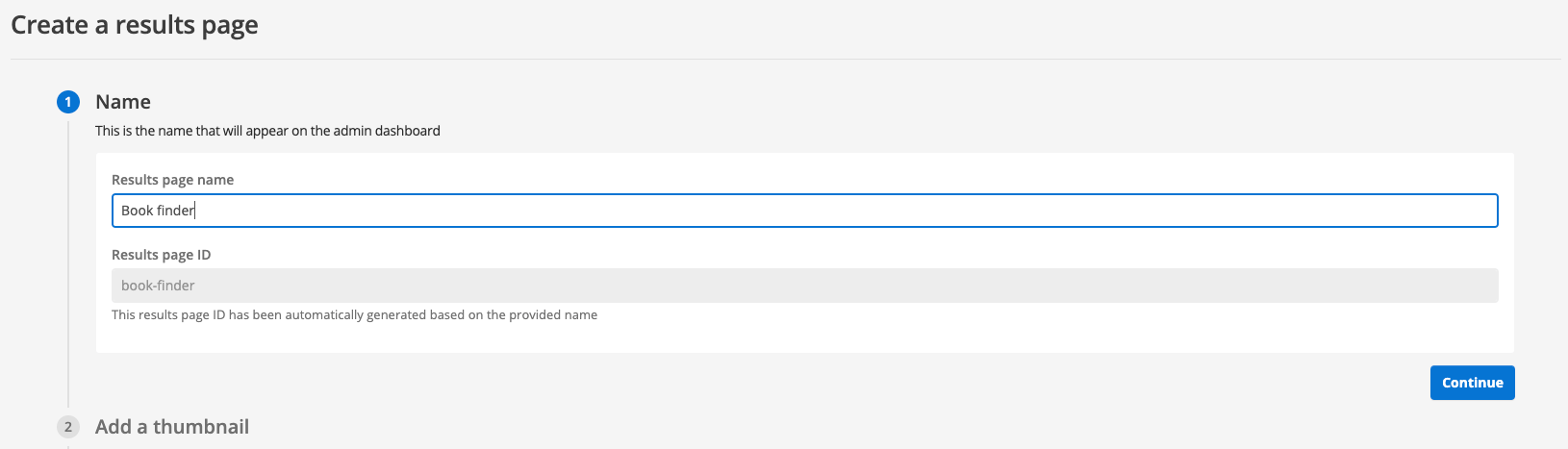

Enter Book finder as the results page name then click the continue button.

-

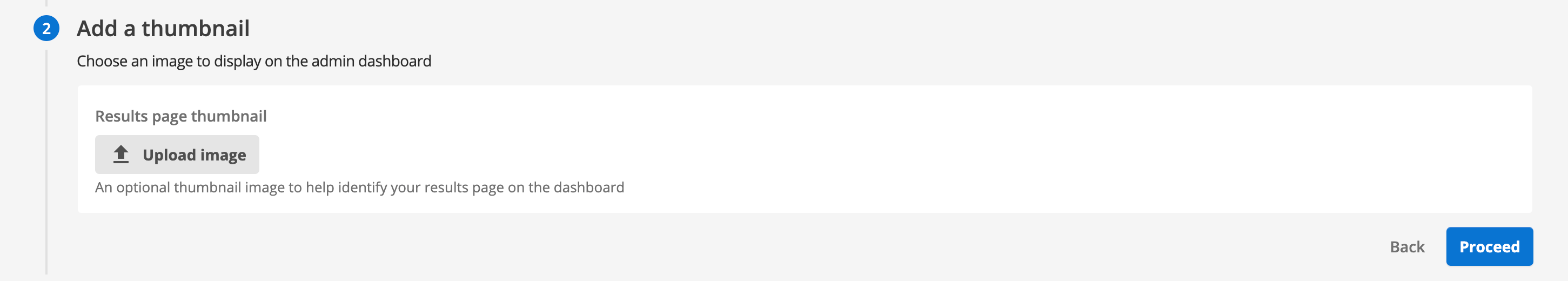

You are then provided with an option to add a thumbnail image for the results page - this image is displayed on the results page management screen and insights dashboard. We’ll skip this step now (an image is easily added later). Click the proceed button to move to the next step of the results page creation process.

-

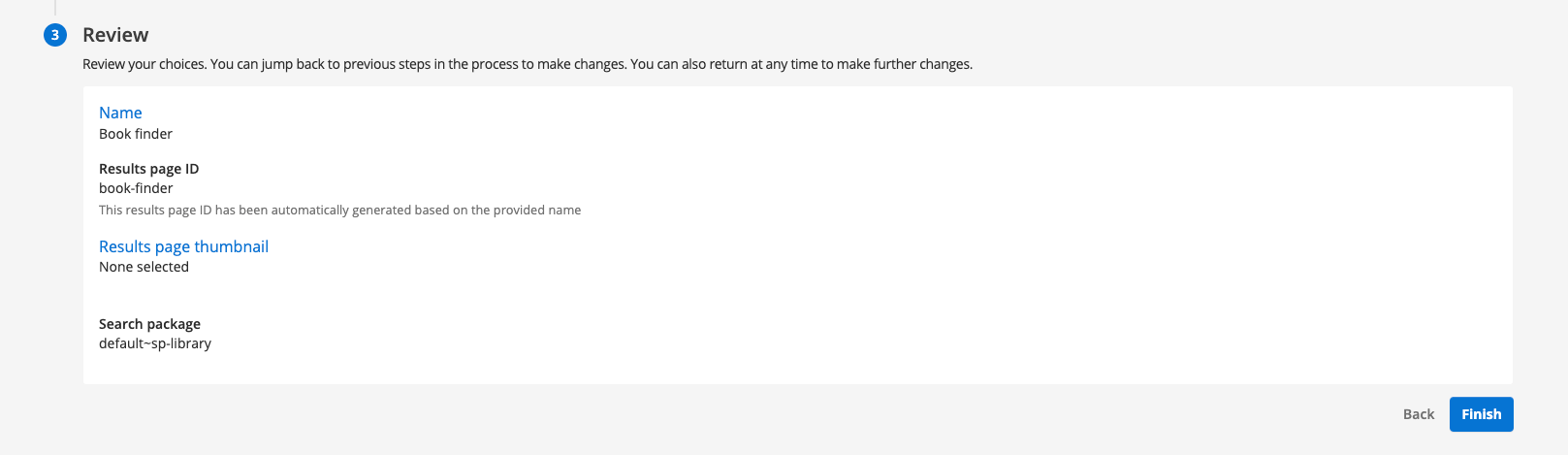

Review the settings that you have entered. Once satisfied, click the finish button to create the results page.

-

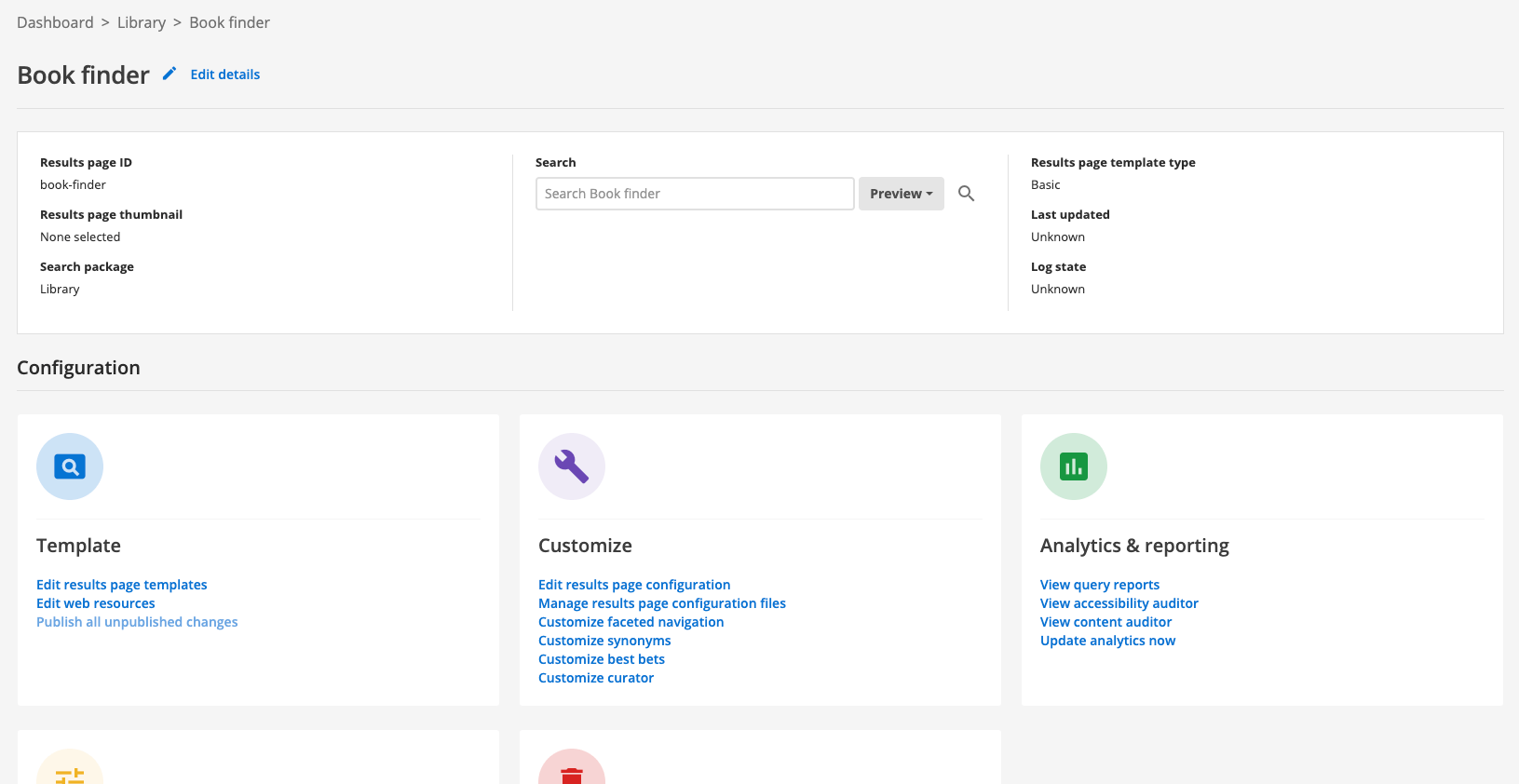

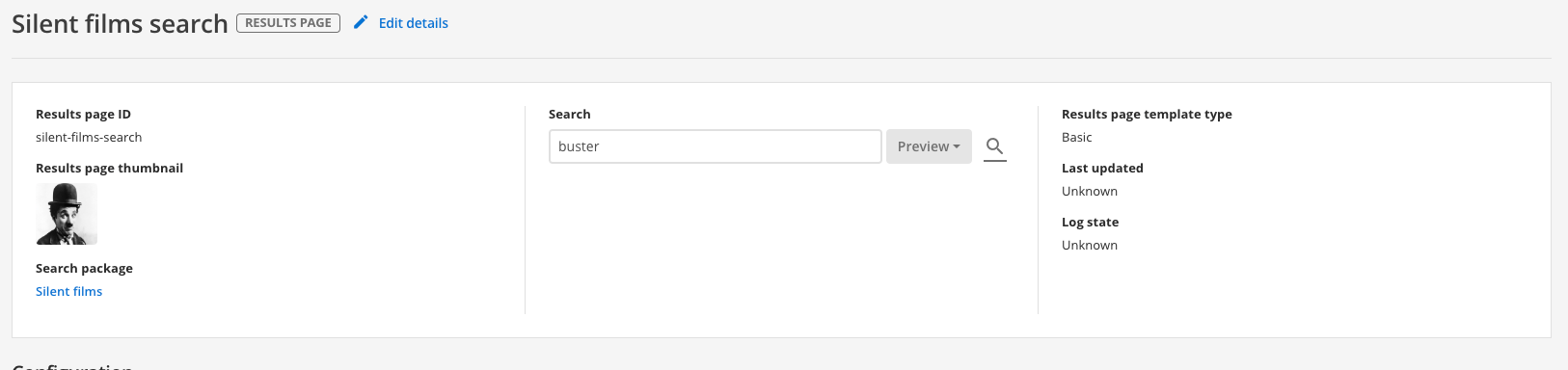

You are redirected to the results page management screen where you can perform further setup of your new results page.

3. Web data sources - update cycle

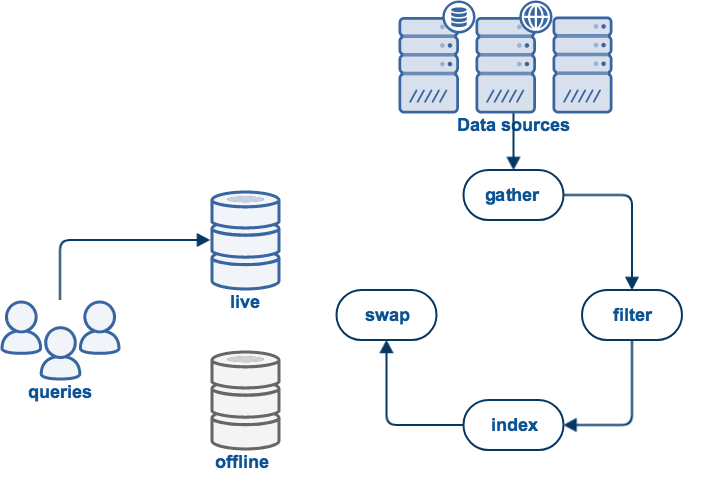

When you start an update, Funnelback commences on a journey that consists of a number of processes or phases.

Each of these phases must be completed for a successful update to occur. If something goes wrong an error will be raised and this will result in a failed update.

The exact set of phases will depend on what type of data source is being updated - however all data source generally have the following phases:

-

A gather phase that is the process of Funnelback accessing and storing the source data.

-

A filter phase that transforms the stored data.

-

An index phase that results in a searchable index of the data.

-

A swap phase that makes the updated search live.

3.1. Gathering

The gather phase covers the set of processes involved in retrieving the content from the data source.

The gather process needs to implement any logic required to connect to the data source and fetch the content.

The overall scope of what to gather also needs to be considered.

For a web data source the process of gathering is performed by a web crawler. The web crawler works by accessing a seed URL (or set of URLs). The seed (or start) URLs are the same as the URL(s) you entered in the What website(s) do you want to crawl? step when creating the data source.

The seed URLs are always fetched by the web crawler and stored locally. The crawler then parses the downloaded HTML content and extracts all the links contained within the file. These links are added to a list of URLs (known as the crawl frontier) that the crawler needs to process.

Each URL in the frontier is processed in turn. The crawler needs to decide if this URL should be included in the search - this includes checking a set of include / exclude patterns, robots.txt rules, file type and other attributes about the page. If all the checks are passed the crawler fetches the URL and stores the document locally. Links contained within the HTML are extracted and the process continues until the crawl frontier is empty, or a pre-determined timeout is reached.

The logic implemented by the web crawler includes a lot of additional features designed to optimize the crawl. On subsequent updates this includes the ability to decide if a URL has changed since the last visit by the crawler.

3.1.1. Crawler limitations

The web crawler has some limitations that are important to understand:

-

The web crawler does not process JavaScript. Any content that can’t be accessed when JavaScript is disabled will be hidden from the web crawler.

-

It is possible to crawl some authenticated websites, however this happens as a specified user. If content is personalized, then what is included in the index is what the crawler’s user can see.

-

By default, the crawler will skip documents that are larger than 10MB in size (this value can be adjusted).

3.2. Filtering

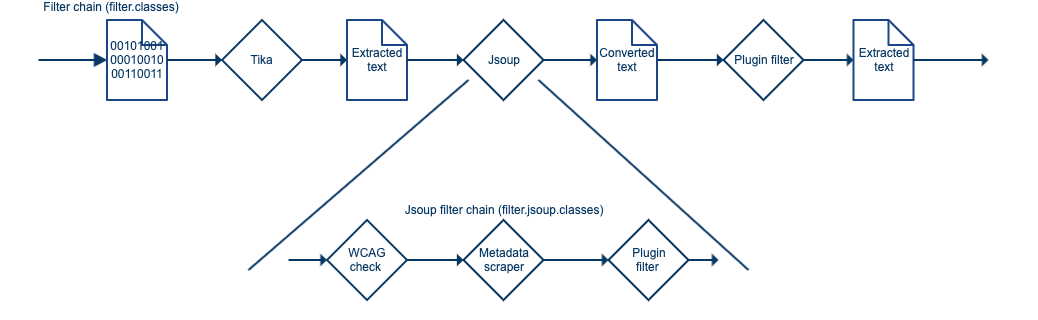

Filtering is the process of transforming the downloaded content into text suitable for indexing by Funnelback.

This can cover a number of different scenarios including:

-

file format conversion - converting binary file formats such as PDF and Word documents into text.

-

text mining and entity extraction

-

document geocoding

-

metadata generation

-

content and WCAG checking

-

content cleaning

-

additional or custom transformations defined in a filter plugin.

3.3. Indexing

The indexing phase creates a searchable index from the set of filtered documents downloaded by Funnelback.

The main search index is made up of an index of all words found in the filtered content and where the words occur. Additional indexes are built containing other data pertaining to the documents. These indexes include document metadata, link information, auto-completion and other document attributes (such as the modified date and file size and type).

Once the index is built it can be queried and does not require the source data used to produce the search results.

3.4. Swap views

The swap views phase serves two functions - it provides a sanity check on the index size and performs the operation of making the updated indexes live.

All Funnelback data sources (except push data sources) maintain two copies of the search index known as the live view and the offline view.

When the update is run all processes operate on an offline view of the data source. This offline view is used to store all the content from the new update and build the indexes. Once the indexes are built they are compared to what is currently in the live view - the set of indexes that are currently in a live state and available for querying.

The index sizes are compared. If Funnelback finds that the index has shrunk in size below a definable value (for example, 50%) then the update will fail. This sanity check means that an update won’t succeed if the website was unavailable for a significant duration of the crawl.

An administrator can override this if the size reduction is expected. For example, a new site has been launched, and it’s a fraction of the size of the old site.

Push data sources used an API based mechanism to update and will be covered separately.

4. Checking an update

Funnelback maintains detailed logs for all processes that run during an update.

When there is a problem and an update fails the logs should contain information that allows the cause to be determined.

It is good practice to also check the log files while performing the setup of a new data source - some errors don’t cause an update to fail. A bit of log analysis while building a data source can allow you to identify:

-

pages that should be excluded from the crawl

-

crawler traps

-

documents that are too large

-

documents of other types

Each of the phases above generate their own log files - learning what these are and what to look out for will help you to solve problems much more quickly.

Tutorial: Examine update logs

In this exercise some of the most useful log files generated during an update of a web data source will be examined.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Locate the austen data source that you created in the previous exercise.

-

Open the manage data source screen (by clicking on the funnelback website title, or selecting the > configuration menu item. The status should now be showing update complete, which indicates that the update completed successfully.

-

View the log files for the update by clicking on the browse log files link in the tools section.

-

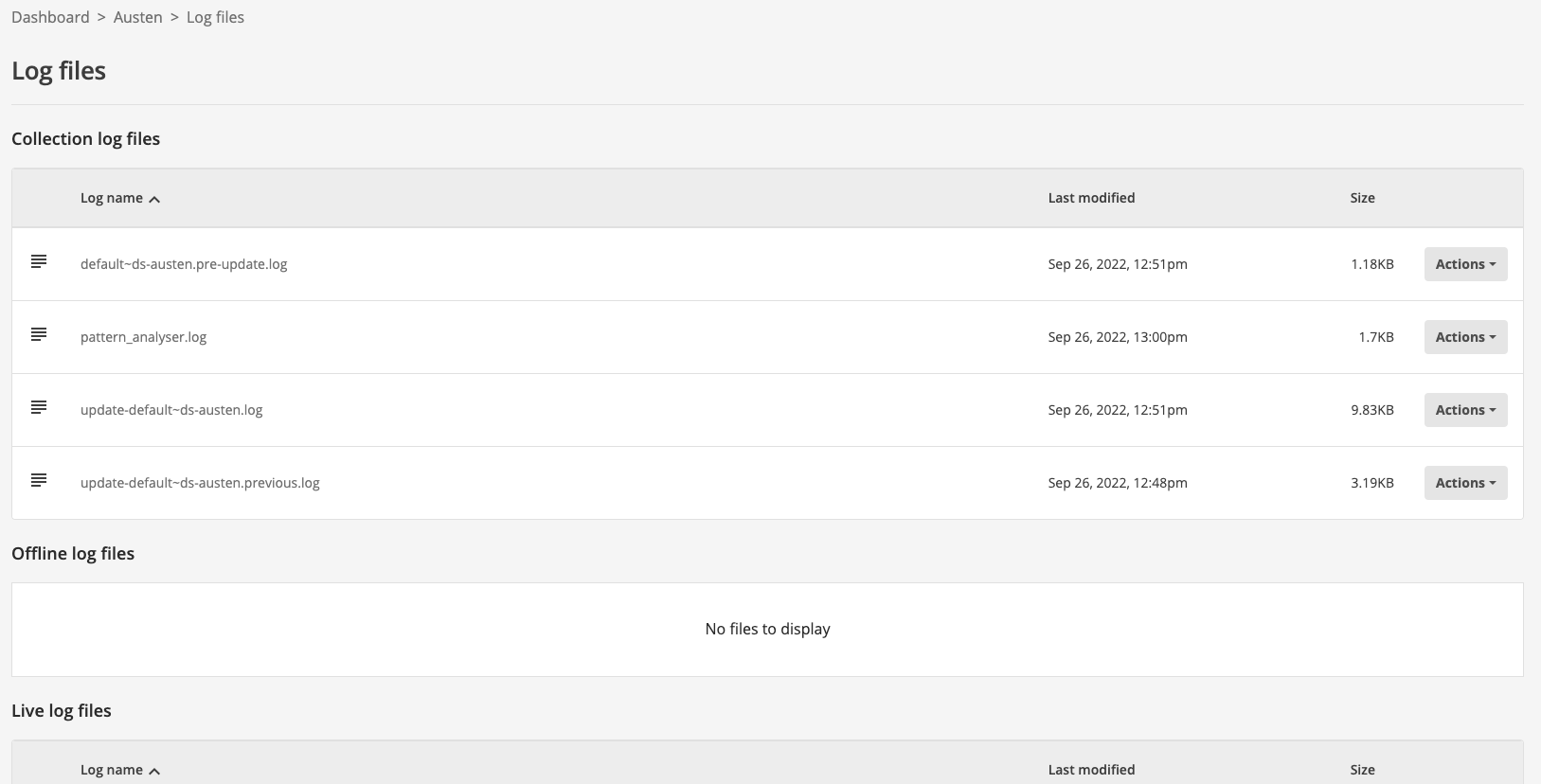

Observe the log manager view of the available log files. The files are grouped under several headings including: collection log files, offline log files and live log files.

The collection log files section contains the top-level update and report logs for the data source. The top-level update log contains high-level information relating to the data source update.

The live log files section includes all the detailed logs for the currently live view of the search. This is where you will find logs for the last successful update.

The offline log files section includes detailed logs for the offline view of the search. The state of the log files will depend on the data source’s state - it will contain one of the following:

-

Detailed logs for an update that is currently in progress

-

Detailed logs for the previous update (that failed)

-

Detailed logs for the successful update that occurred prior to the currently live update.

-

4.1. Debugging failed updates

An update can fail for numerous reasons. The following provides some high level guidance by providing some common failures and how to debug them.

The first step is to check the data source’s update log and see where and why the update failed. Look for error lines. Common errors include:

-

No documents stored: For some reason Funnelback was unable to access any documents so the whole update failed (as there is nothing to crawl). Look at the offline crawl logs (

crawler.log,crawl.log.X.gz) andurl_errors.logfor more information. The failure could be the result of a timeout, or a password expiring if you are crawling with authentication. -

Failed changeover conditions: After building the index a check is done comparing with the previous index. If the index shrinks below a threshold then the update will fail. This can occur if one of the sites was down when the crawl occurred, or if there were excessive timeouts, or if the site has shrunk (for example, because it has been redeveloped or part of it archived). If a shrink in size is expected you can run an advanced update and swap the views.

-

Failures during filtering: Occasionally the filtering process crashes causing an update to fail. The

crawler.logorgather.logmay provide further information to the cause. -

Lock file exists: The update could not start because a lock file was preventing the update. This could be because another update on the data source was running; or a previous update crashed leaving the lock files in place. The lock can be cleared from the search dashboard by selecting the data source then clicking on the clear locks link that should be showing on the update tab.

-

Failures during indexing: Have a look at the offline index logs (

Step-*.log) for more details.

Tutorial: Debug a failed update

In this exercise we will update the configuration so that the crawler is unable to access the start URL to provide a simple example of debugging an update.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

locate the austen data source.

-

Open the manage data source screen (by clicking on the austen title, or selecting the > configuration menu item. The status should now be showing update complete, which indicates that the update completed successfully.

-

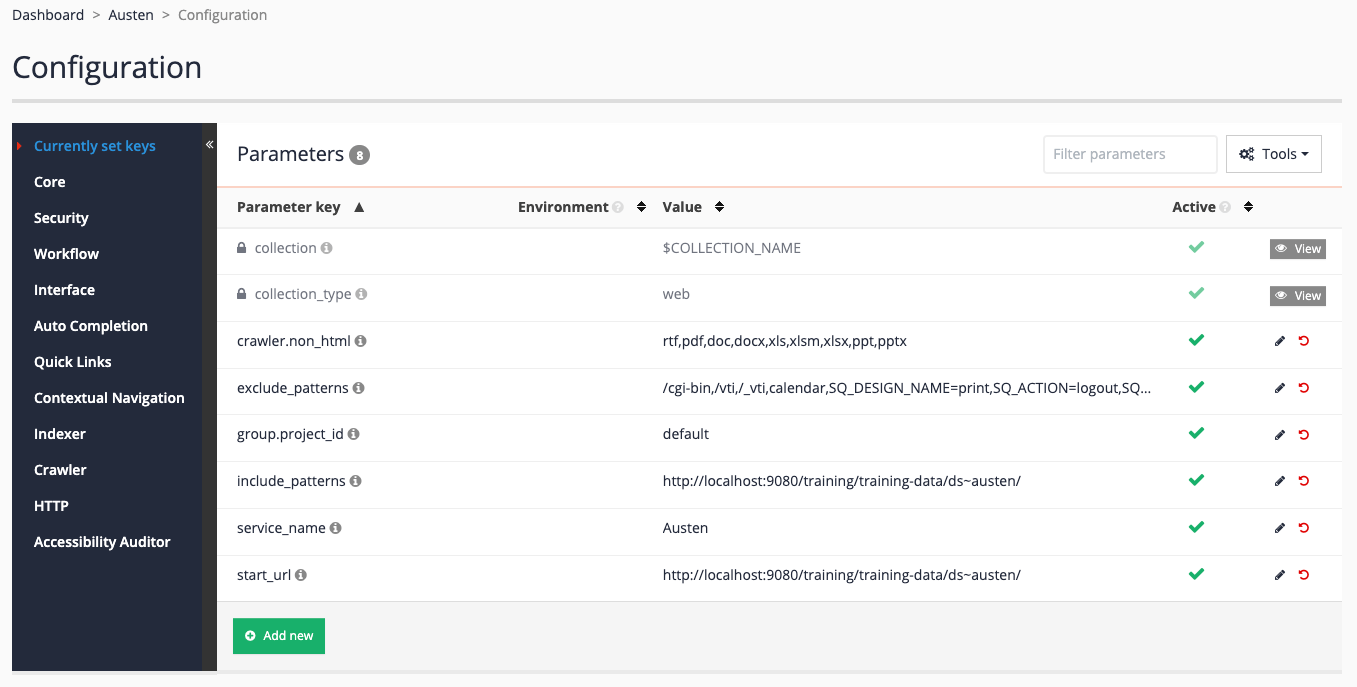

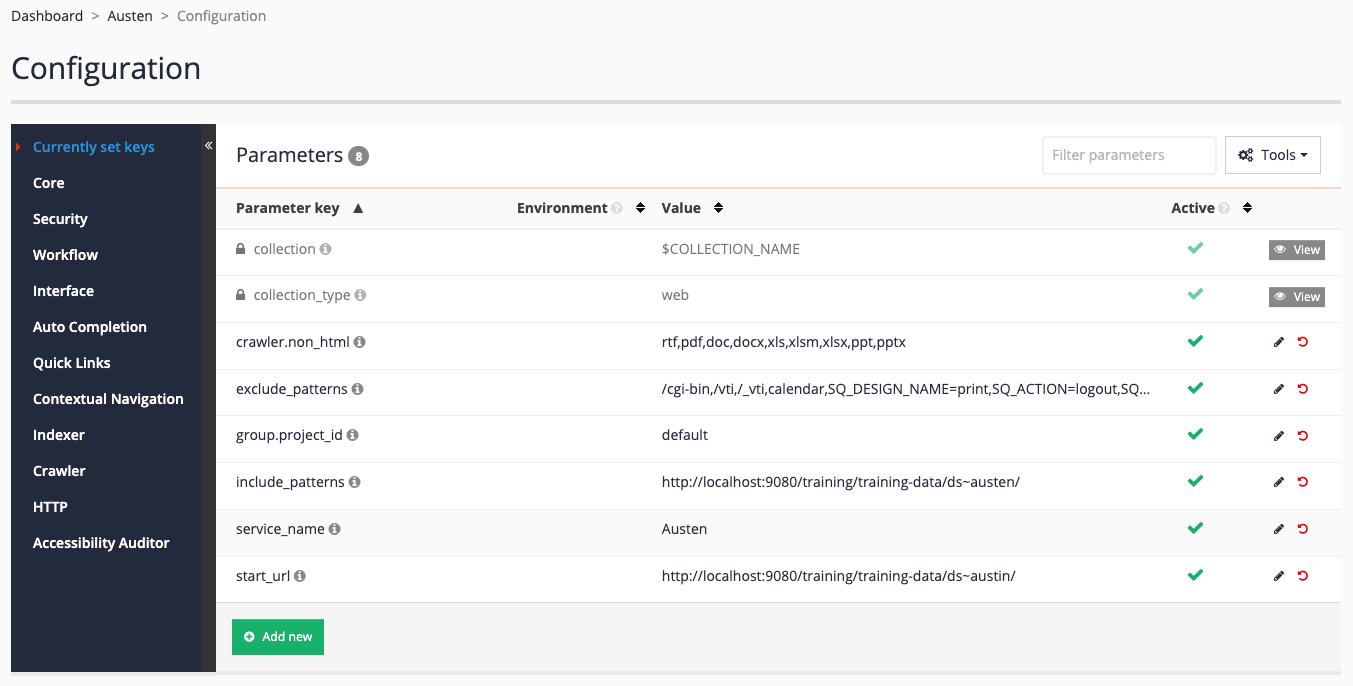

Access the data source configuration by clicking the edit data source configuration option in the settings section.

-

Update the start url by clicking on the

start_urlitem. Update the value tohttps://docs.squiz.net/training-resources/austin/(changeaustentoaustin) then save your changes. We are intentionally using a URL containing a typo in this example so we can examine the resulting errors.

-

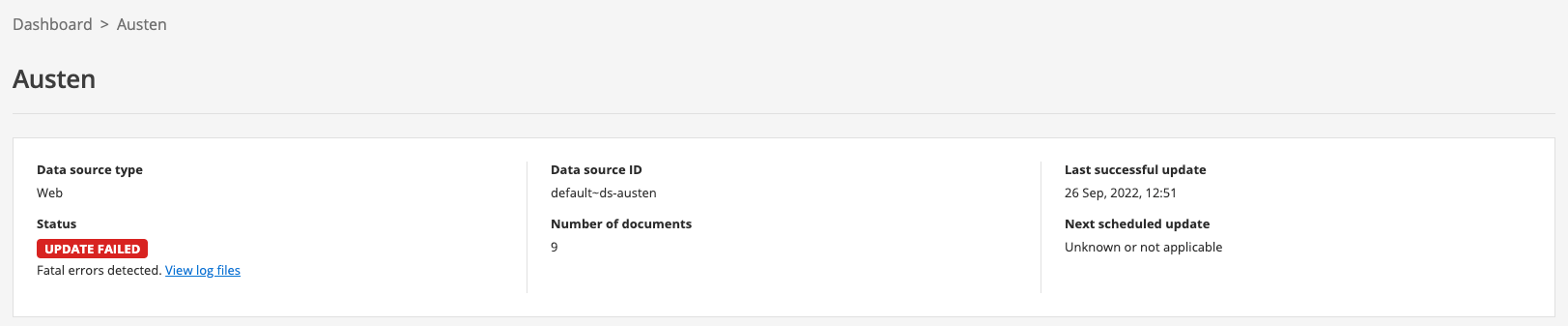

Return to the data source manage screen by clicking Austen in the breadcrumbs, then start an update by clicking on the update data source item in the update section. The update should fail almost immediately, and the status will update to show update failed (note you might need to refresh your browser screen).

-

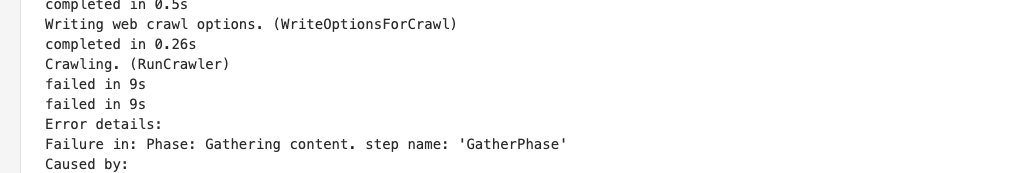

Access the logs (click on browse log files in the tools section). Inspect the log files for the data source - starting with the

update-training~ds-austen.log. Theupdate-<DATA-SOURCE-ID>.logprovides an overview of the update process and should give you an idea of where the update failed. Observe that there is an error returned by the crawl step. This suggests that we should investigate the crawl logs further.

-

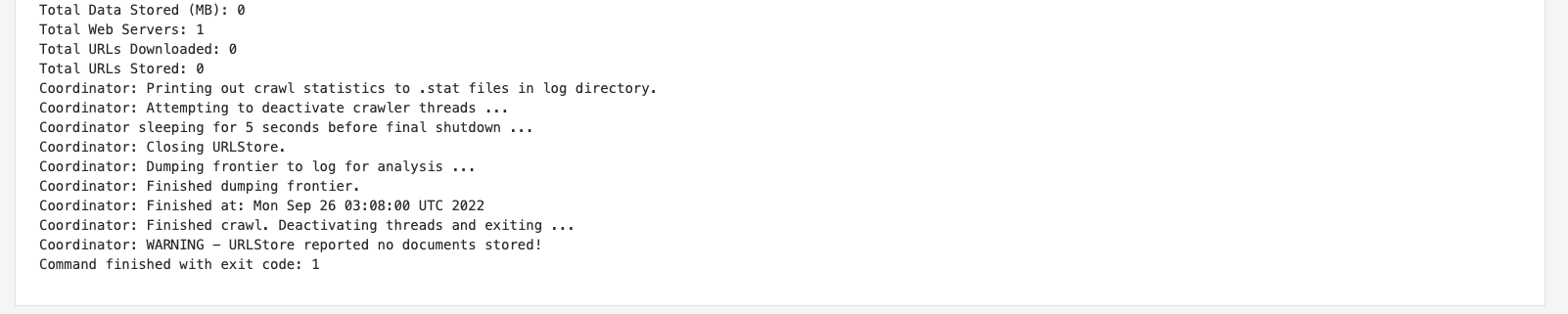

As the data source update failed, the offline view will contain all the log files for the update that failed. Locate the

crawler.logfrom the offline logs section and inspect this. The log reports that no URLs were stored.

-

Examine the

url_errors.logwhich logs errors that occurred during the crawl. From this log you can see that a 404 not found error was returned when accessinghttp://localhost:9080/training/training-data/ds~austin/which is the seed URL for the crawl. This explains why nothing was indexed because the start page was not found, so the crawl could not progress any further.E https://docs.squiz.net/training-resources/austin/ [404 Not Found] [2022:09:26:03:07:54] -

With this information at hand you can investigate further. In this case the reason the crawl failed was due to the seed URL being incorrectly typed. But you might visit the seed URL from your browser to investigate further.

-

Return to the edit data source configuration screen and correct the start URL.

4.2. Review questions: debugging failed updates

-

What’s the difference between the live and offline logs and when would you look at logs from each of these log folders?

-

Which logs would you look at to solve the following?

-

Find files that were rejected due to size during the last update?

-

Find the cause of an update that failed?

-

Determine why a URL is missing from an index?

-

Identify unwanted items that are being stored?

-

5. Search packages

5.1. Understanding how search packages combine indexes

It is important to understand a few basics about how search packages aggregate content from the different data sources.

-

Metadata classes of all included data sources are combined: this means if you have a class called title in data source A and a class called title in data source B there will be a field called title in the search package that searches the title metadata from both data sources. This means you need to be careful about the names you choose for your metadata classes, ensuring that they only overlap when you intend them to. One technique you can use to avoid this is to namespace your metadata fields to keep them separate. (for example, use something like

websiteTitleinstead oftitlein your website data source). -

Generalized scopes of all included data sources are combined: the same principles as outlined above for metadata apply to gscopes. You can use gscopes to combine or group URLs across data sources by assigning using the same gscope ID in each data source, but only do this when it makes sense - otherwise you may get results that you don’t want if you choose to scope the search results using your defined gscopes.

-

Geospatial and numeric metadata: these are special metadata types and the value of the fields are interpreted in a special way. If you have any of these classes defined in multiple data sources ensure they are of the same type where they are defined.

-

Search packages combine the indexes at query time: this means you can add and remove data sources from the search package and immediately start searching across the indexes.

auto-completion and spelling suggestions for the search package won’t be updated to match the changed search package content until one of the data sources completes a successful update. -

You can scope a query only return information from specific data sources within a search package by supplying the

cliveparameter with a list of data sources to include. -

If combined indexes contain an overlapping set of URLs then duplicates will be present in the search results (as duplicates are not removed at query time).

5.2. Configuring search packages

There are a few things that must be considered when configuring search packages - this is due to the separation between the querying of the search packages and the fact that the indexes for included data sources are not directly part of the search package.

Results pages are used to configure the different searches provided by the search package and configuration for most query-time behaviour needs to be made on the results page. Because results pages part of a search package, they inherit configuration from the search package.

Items that should be configured on a search package include:

-

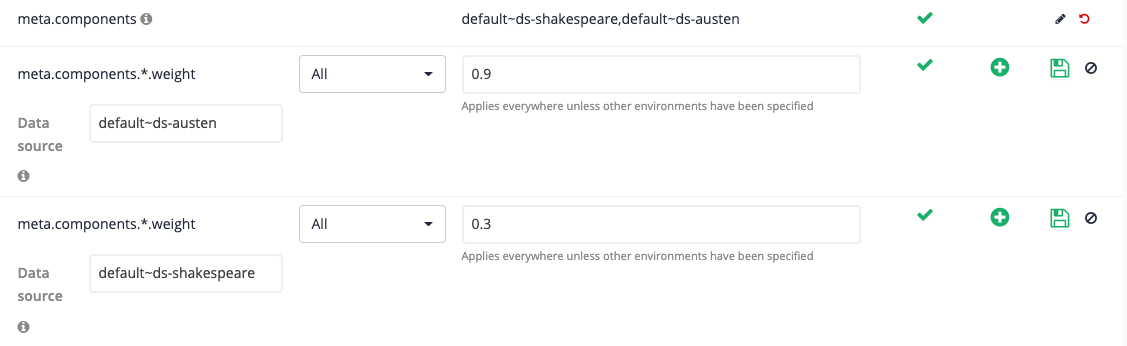

included data sources (meta components)

-

analytics

-

contextual navigation

-

quick links display

-

content auditor

-

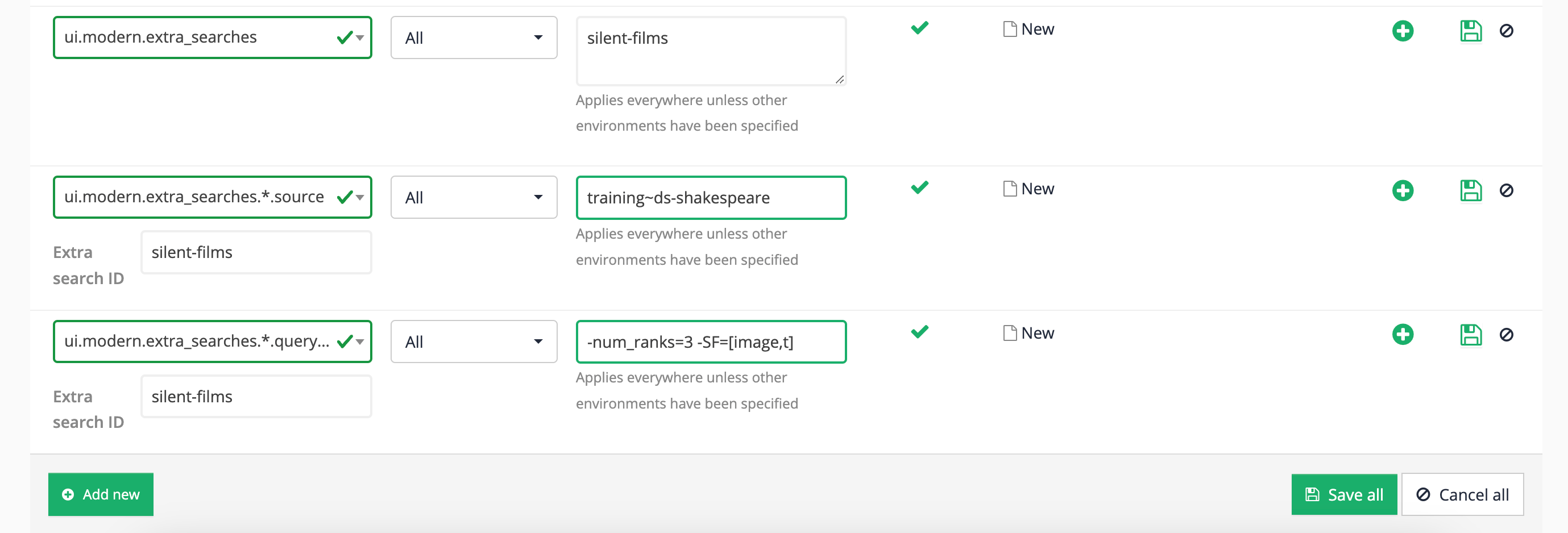

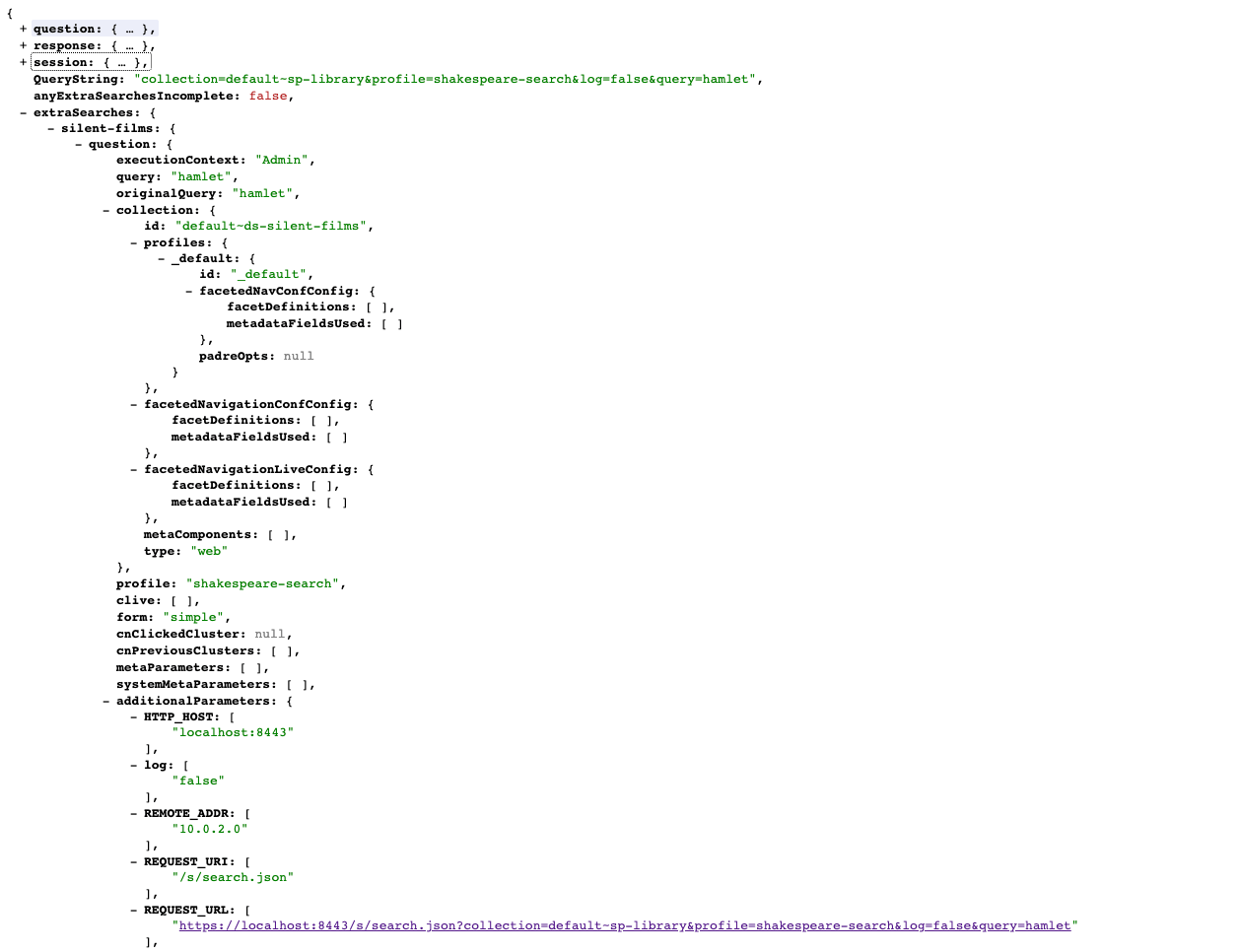

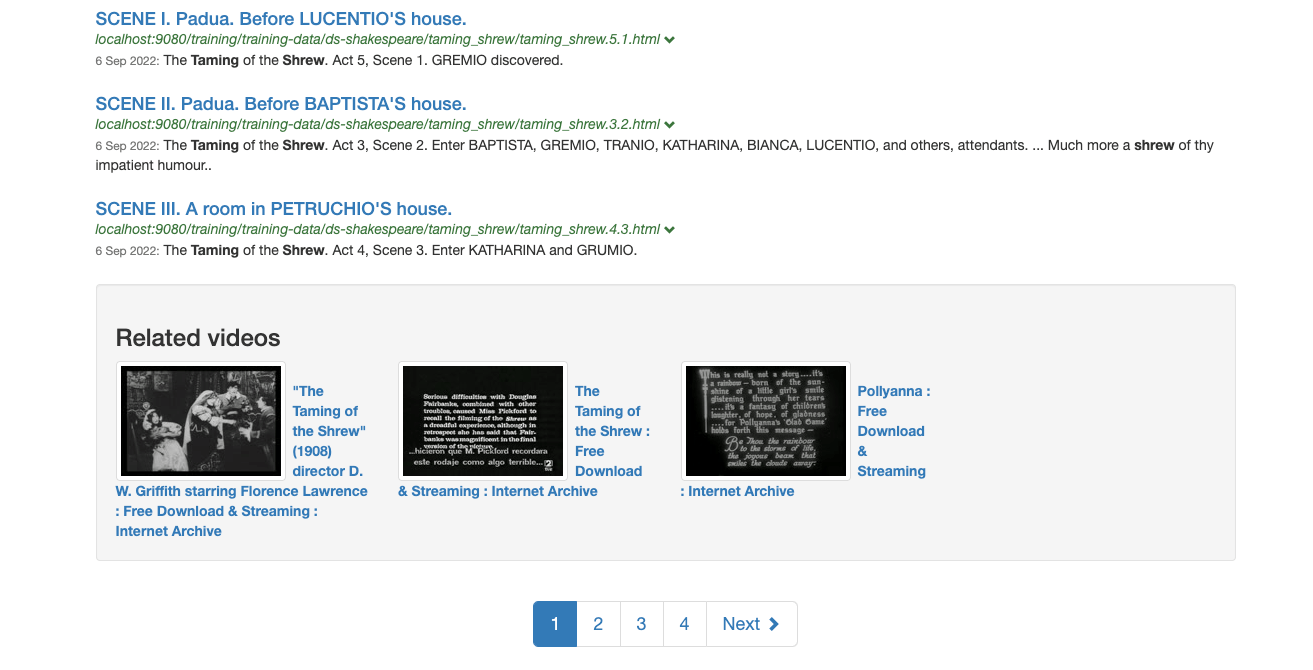

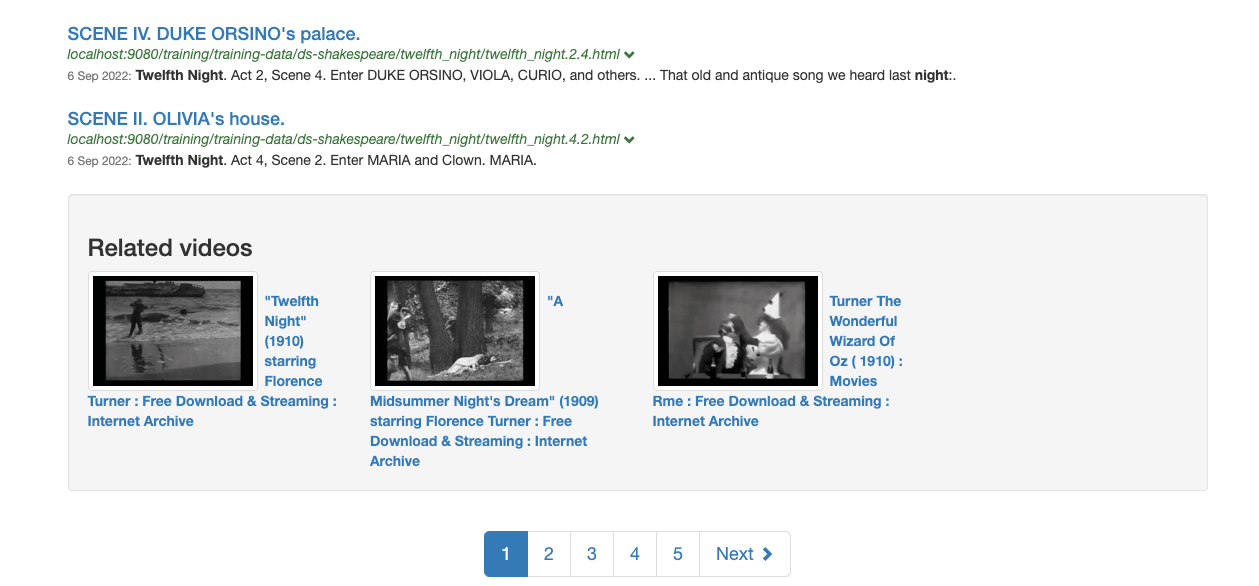

extra searches

Items that should be configured on the results page include:

-

templates

-

synonyms, best bets and curator rules

-

faceted navigation (note: addition of facets based on new metadata fields or generalized scopes require the affected data sources to be updated before the facet will be visible)

-

display options

-

ranking options

-

most auto-completion options

-

search lifecycle plugins

However, because the indexes are still built when the data sources update any changes that affect the update or index build process must be made in the data source configuration. These changes include:

-

metadata field mappings and external metadata

-

gscope mappings

-

indexer options

-

quicklinks generation

-

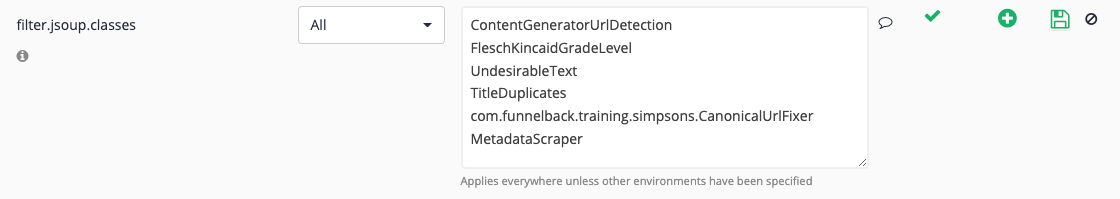

filters and filter plugins

-

spelling options

Tutorial: Define a scope for a results page

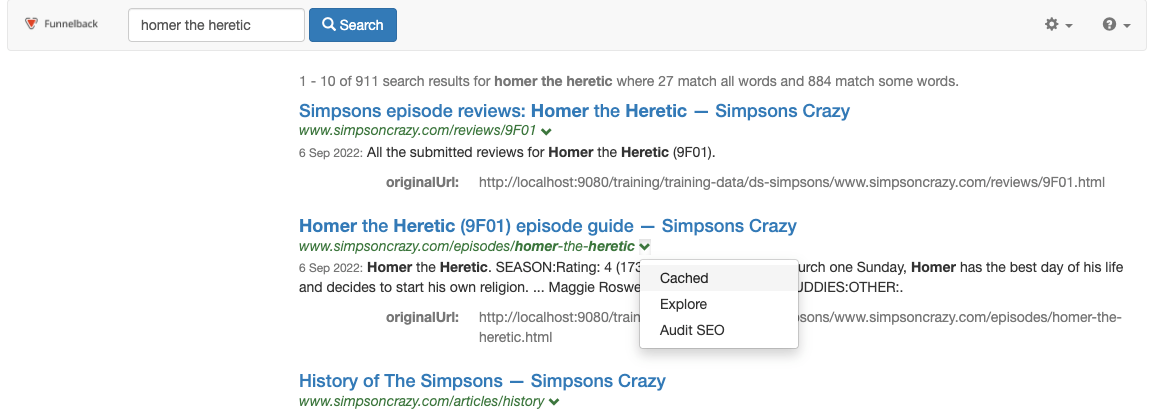

When a new results page is created it has the same content as the parent search package.

Results pages are commonly used to provide a search across a sub-set of content within the search package. To achieve this the results page should be configured to apply a default scope.

In this exercise the Shakespeare search results page will be scoped so that only URLs from the Shakespeare website are returned when the running searches using the Shakespeare search results page.

The Shakespeare search results page is selected by passing a profile parameter set to the results page ID (shakespeare-search) in the search query.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Locate the library search package.

-

Create another results page, but this time call the results page Shakespeare search.

-

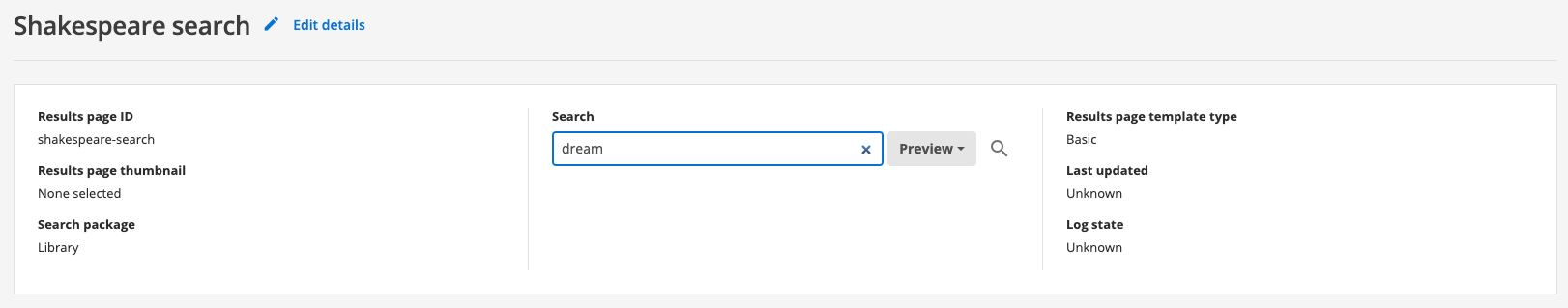

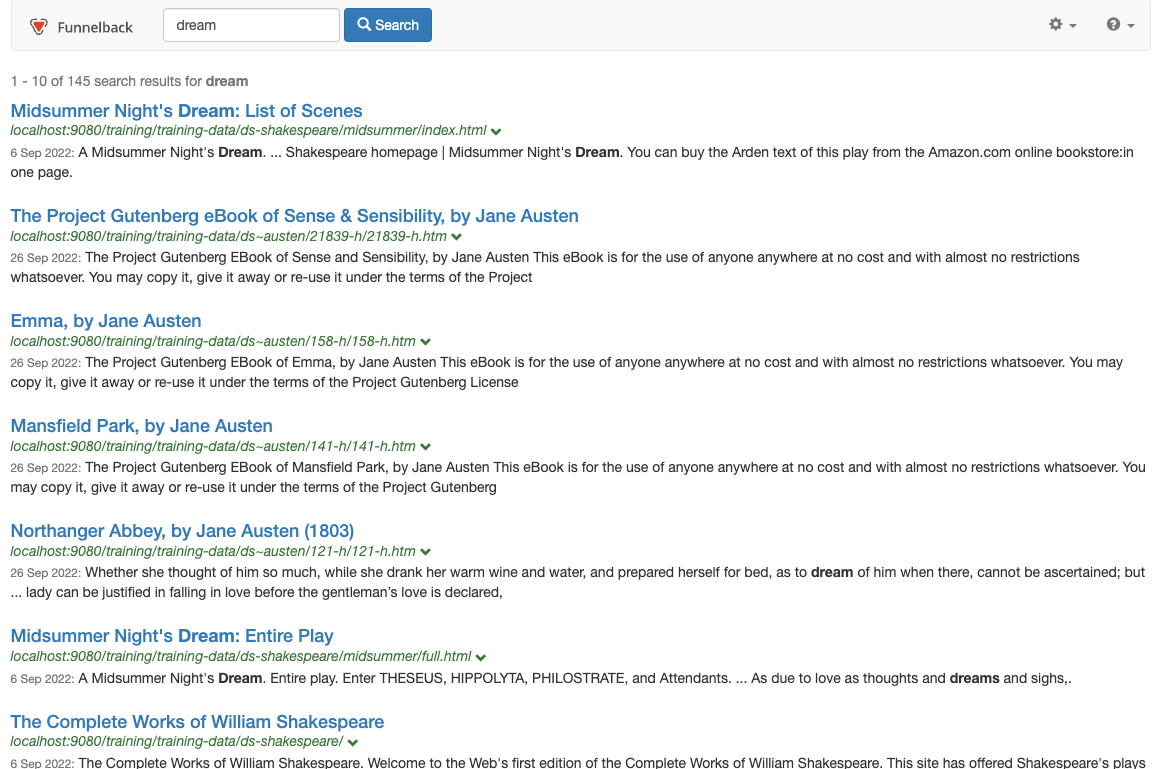

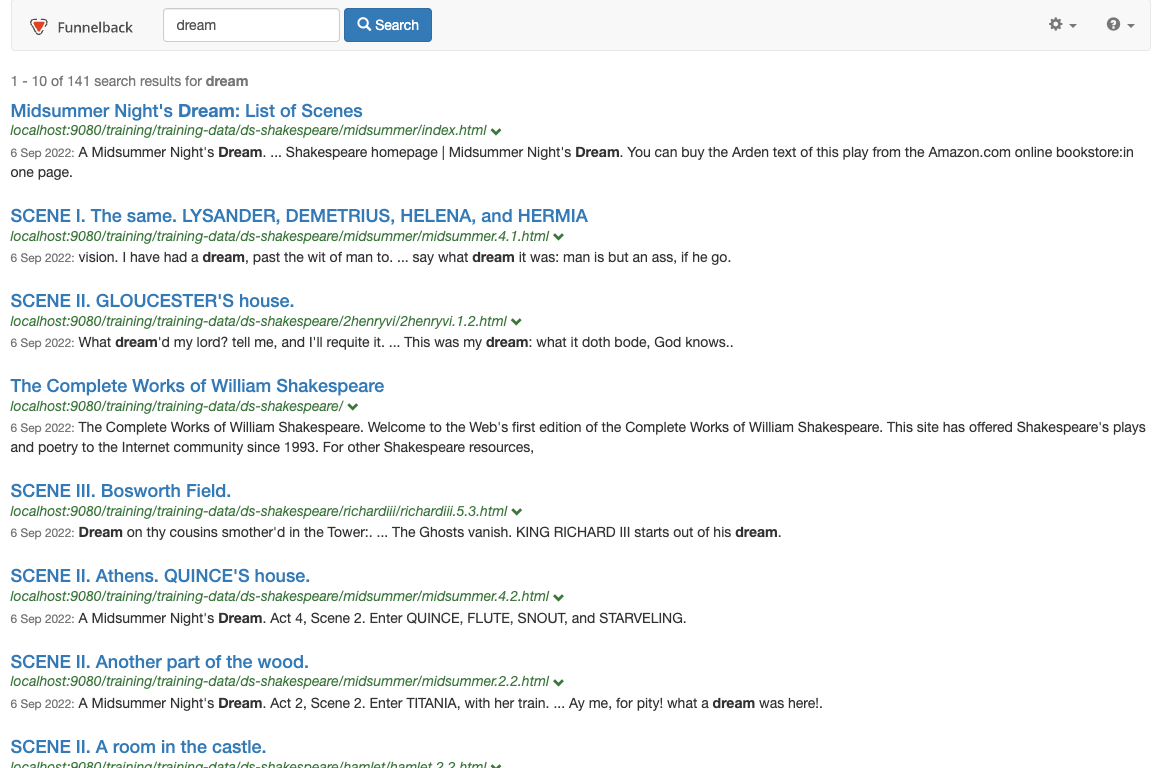

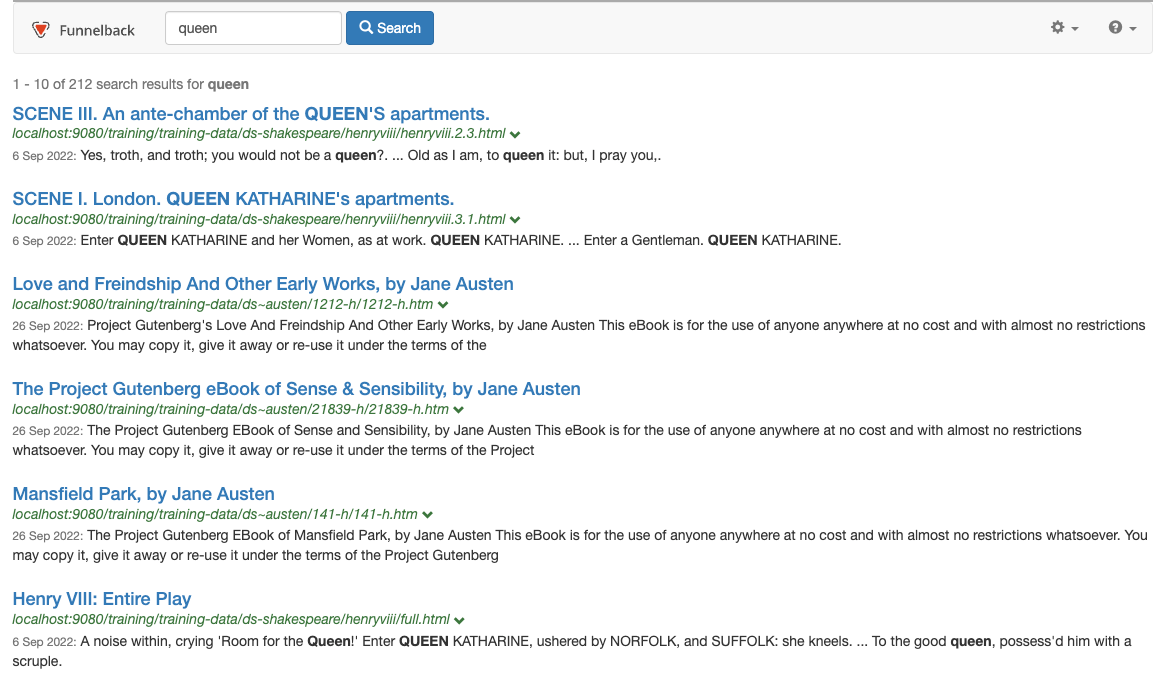

Run a search for dream ensuring the preview option is selected and observe that pages from both the Shakespeare and Austen sites are returned.

-

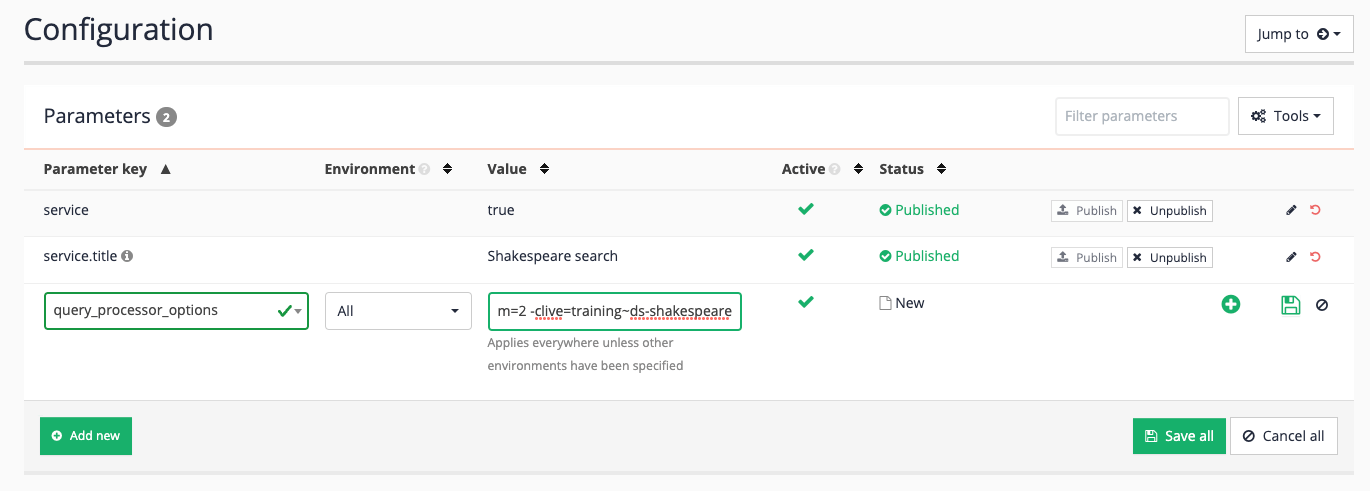

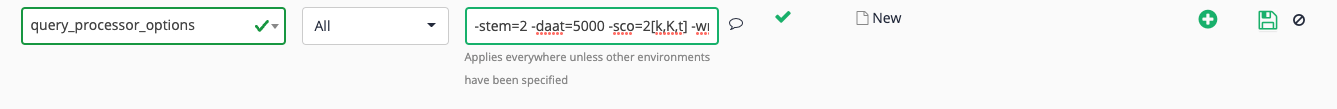

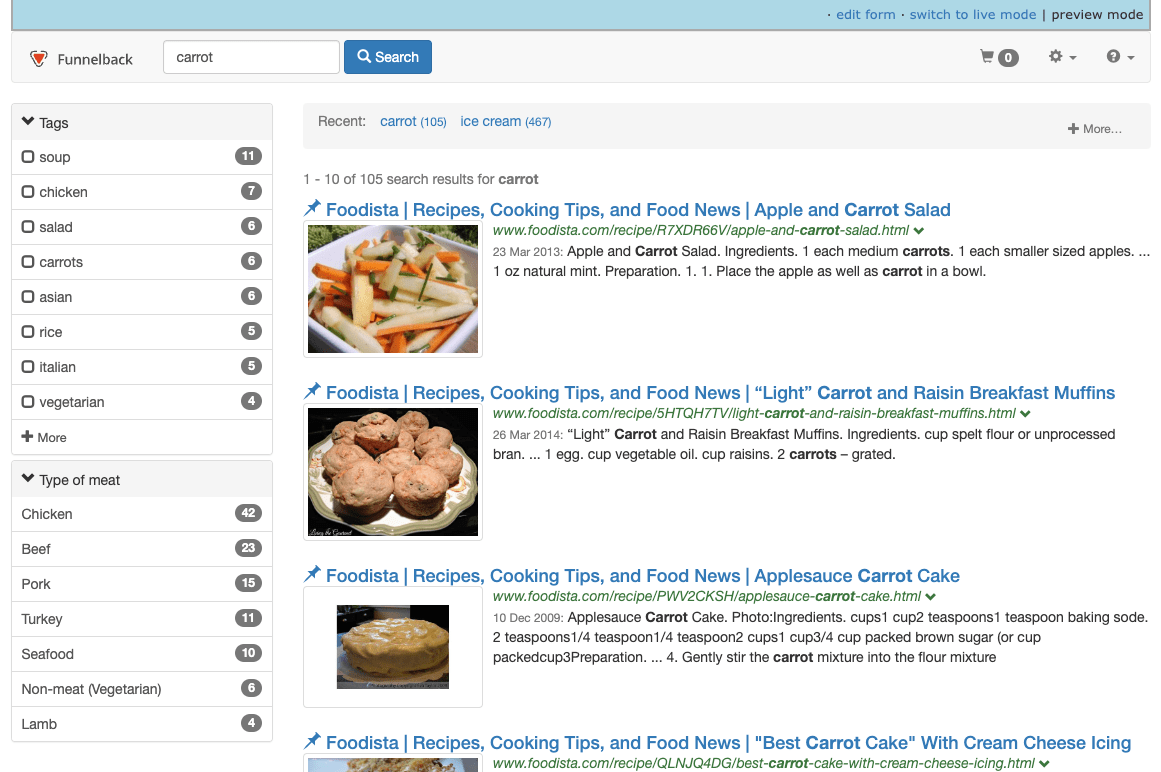

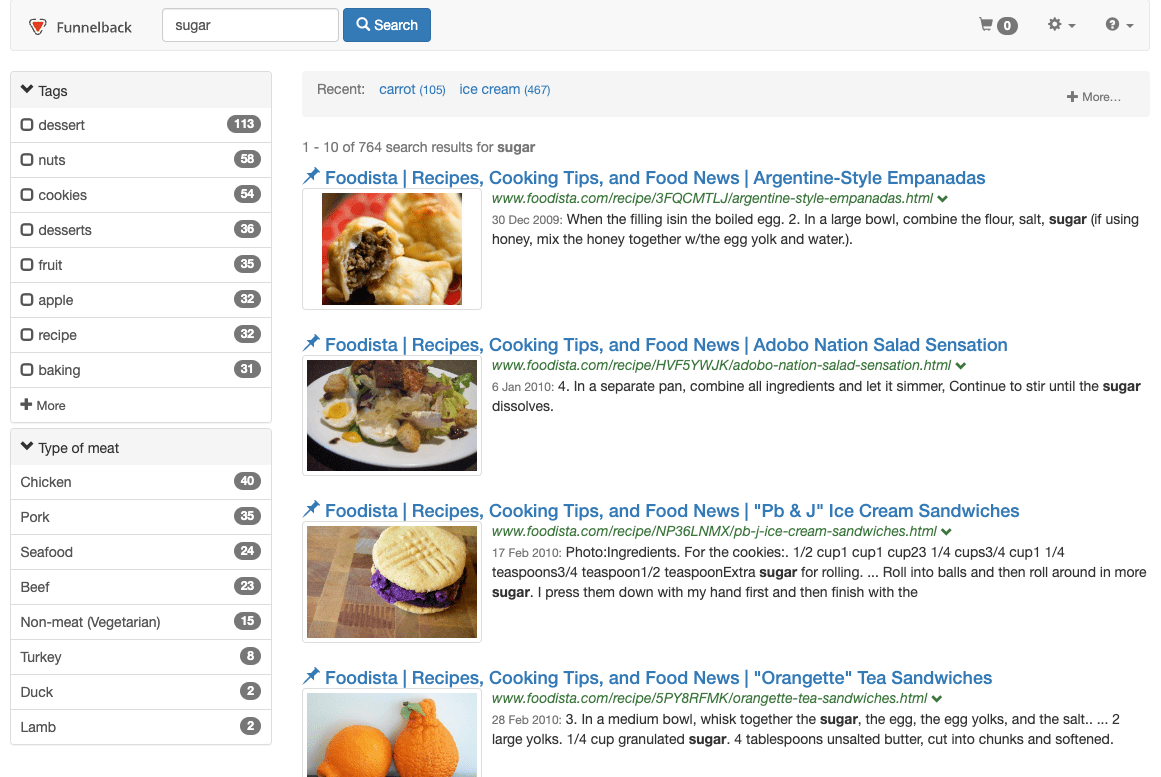

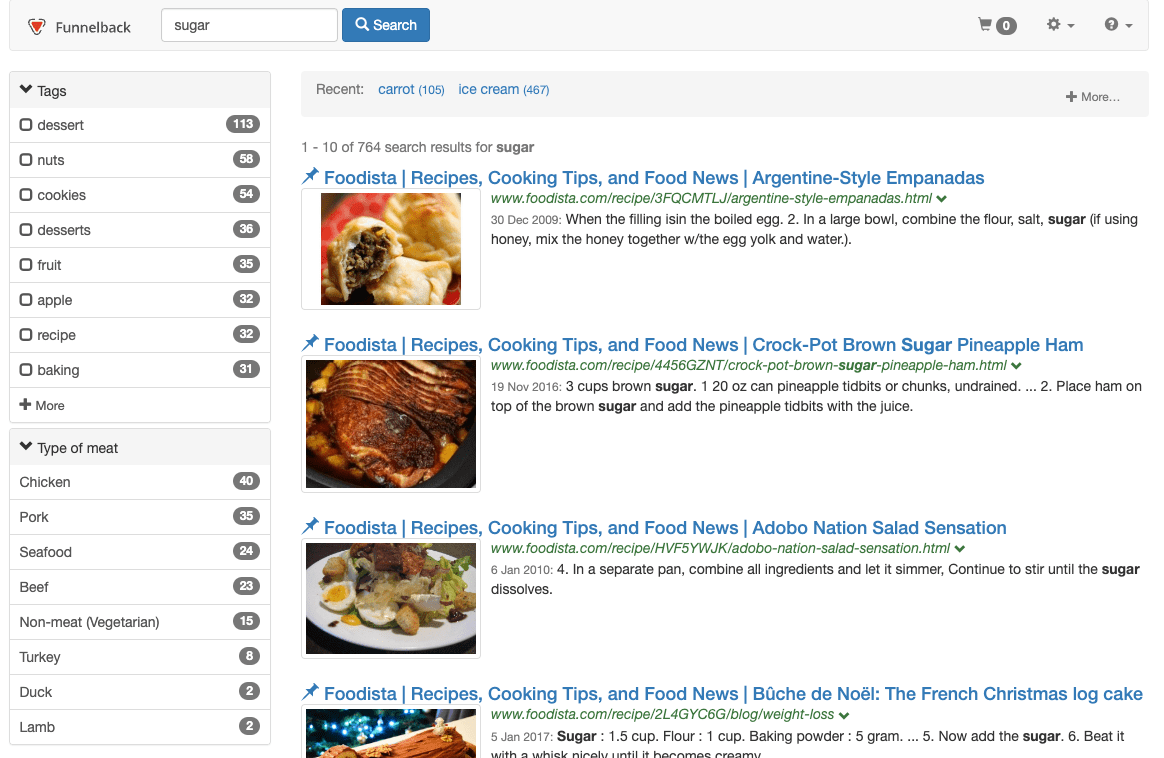

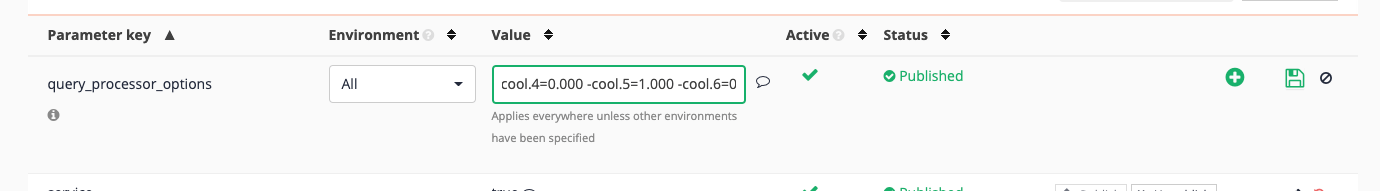

Select edit results page configuration from the customize section. The index can be scoped by setting an additional query processor option.

Query processor options are settings that can are applied dynamically to a Funnelback search when a query is made to the search index. The options can control display, ranking and scoping of the search results and can be varied for each query that is run. -

Add a query processor option to scope the results page - various options can be used to scope the results page including

scope,xscope,gscope1andclive. Thecliveparameter is a special scoping parameter that can be applied to results page to restrict the results to only include pages from a specified data source or set of data sources. The clive parameter takes a list of data source IDs to include in the scoped set of data. Add acliveparameter to scope the results page to pages from the training~ds-shakespeare data source then click the save button, (but do not publish) the file. You can find the data source ID in the information panel at the top of the results page management screen.-clive=training~ds-shakespeare

-

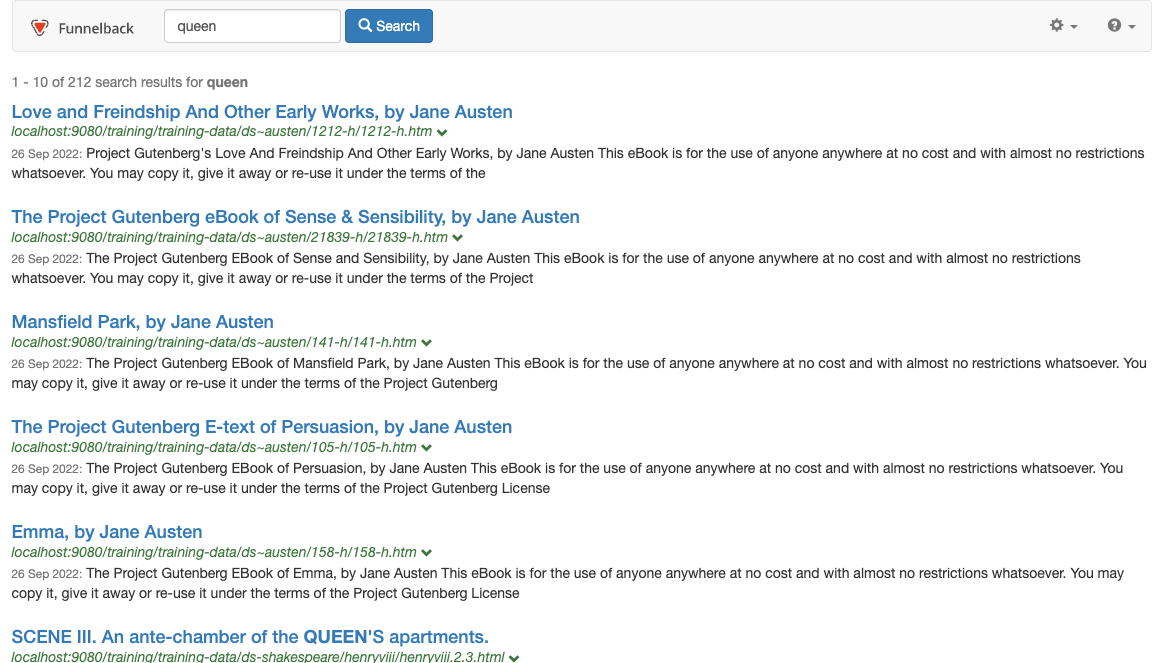

Rerun the search for dream against the preview version of the Shakespeare search results page and observe that the results are now restricted to only pages from the Shakespeare site.

-

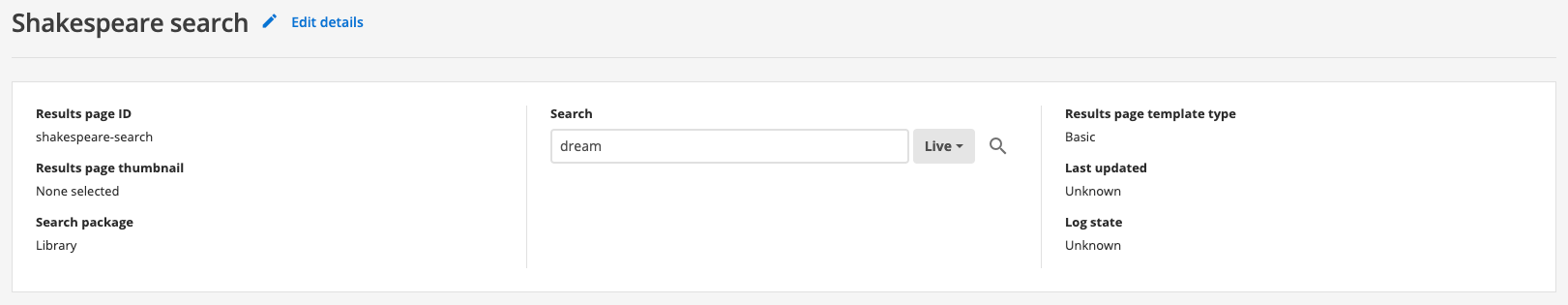

Rerun the search for dream against the live version of the Shakespeare search results page. Observe that pages are returned from both sites - this is because the query processor options must be published for the changes to take effect on the live version of the results page.

-

Edit results page configuration again, and publish the query processor options to make the setting affect the live version of the results page.

-

Rerun the search for dream against the Shakespeare search results page. Observe that pages are now restricted to Shakespeare site pages.

Tutorial: Set default results page display and ranking options

Display and ranking (and scoping) options can be set independently for each results page.

This allows the same search package to be used to serve search results with quite different ranking/display options or to be scoped to a subset of the search package’s included data. These options are set in the same way as the scoping from the previous exercise by adding options to the query processor options.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Navigate to the Shakespeare search results page

-

Select edit results page configuration from the customize section that was edited in the previous exercise. Set the results page to return five results per page and sort alphabetically then save and publish the changes.

-clive=training~ds-shakespeare -sort=title -num_ranks=5 -

Rerun the search for dream against the Shakespeare search results page specifying and observe that the results are sorted alphabetically by title and only 5 results are returned per page. Results are all from the Shakespeare website.

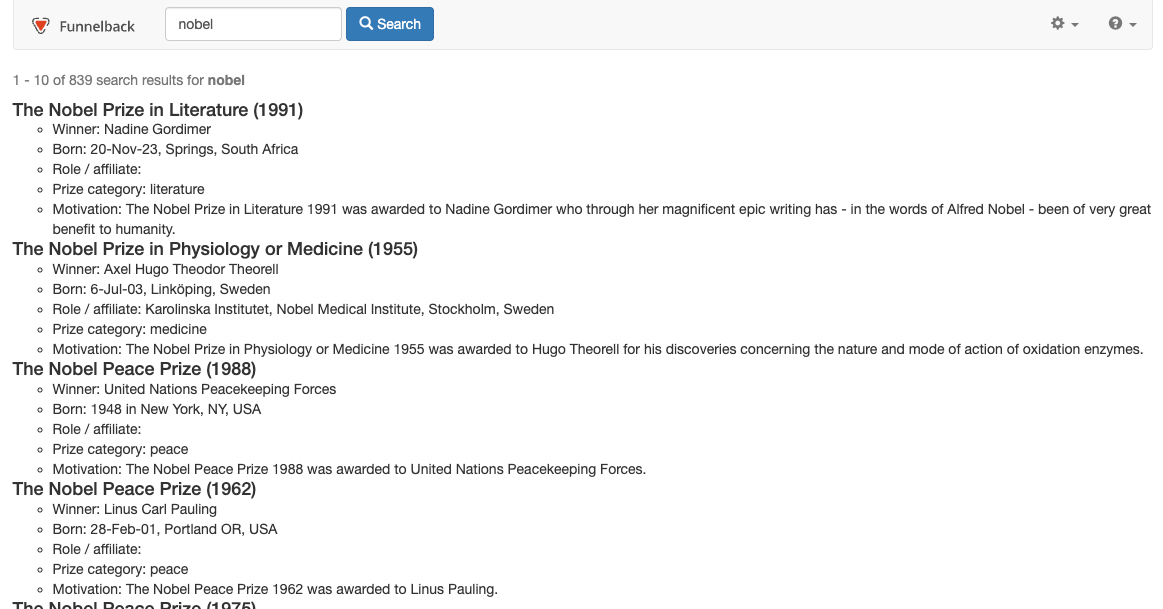

6. Working with XML content

Funnelback can index XML documents and there are some additional configuration files that are applicable when indexing XML files.

-

You can map elements in the XML structure to Funnelback metadata classes.

-

You can display cached copies of the document via XSLT processing.

Funnelback can be configured to index XML content, creating an index with searchable, fielded data.

Funnelback metadata classes are used for the storage of XML data – with configuration that maps XML element paths to internal Funnelback metadata classes – the same metadata classes that are used for the storage of HTML page metadata. An element path is a simple XML X-Path.

XML files can be optionally split into records based on an X-Path. This is useful as XML files often contain a number of records that should be treated as individual result items.

Each record is then indexed with the XML fields mapped to internal Funnelback metadata classes as defined in the XML mappings configuration file.

6.1. XML configuration

The data source’s XML configuration defines how Funnelback’s XML parser will process any XML files that are found when indexing.

The XML configuration is made up of two parts:

-

XML special configuration

-

Metadata classes containing XML field mappings

The XML parser is used for the parsing of XML documents and also for indexing of most non-web data. The XML parser is used for:

-

XML, CSV and JSON files,

-

Database, social media, directory, HP CM/RM/TRIM and most custom data sources.

6.2. XML element paths

Funnelback element paths are simple X-Paths that select on fields and attributes.

Absolute and unanchored X-Paths are supported, however for some special XML fields absolute paths are required.

-

If the path begins with

/then the path is absolute (it matches from the top of the XML structure). -

If the path begins with

//it is unanchored (it can be located anywhere in the XML structure).

XML attributes can be used by adding @attribute to the end of the path.

Element paths are case sensitive.

| Attribute values are not supported in element path definitions. |

Example element paths:

| X-Path | Valid Funnelback element path |

|---|---|

|

VALID |

|

VALID |

|

VALID |

|

VALID |

|

NOT VALID |

6.2.1. Interpretation of field content

CDATA tags can be used with fields that contain reserved characters or the characters should be HTML encoded.

Fields containing multiple values should be delimited with a vertical bar character or the field repeated with a single value in each repeated field.

For example: The indexed value of //keywords/keyword and //subject below would be identical.

<keywords>

<keyword>keyword 1</keyword>

<keyword>keyword 2</keyword>

<keyword>keyword 3</keyword>

</keywords>

<subject>keyword 1|keyword 2|keyword 3</subject>6.3. XML special configuration

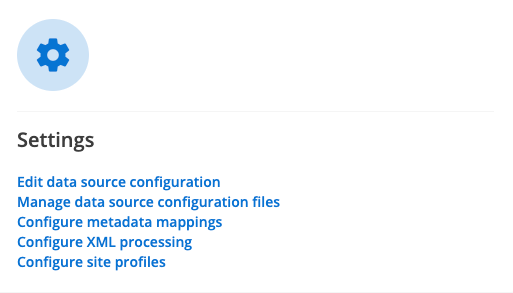

There are a number of special properties that can be configured when working with XML files. These options are defined from the XML configuration screen, by selecting configure XML processing from the settings panel on the data source management screen.

6.3.1. XML document splitting

A single XML file is commonly used to describe many items. Funnelback includes built-in support for splitting an XML file into separate records.

Absolute X-Paths must be used and should reference the root element of the items that should be considered as separate records.

| Splitting an XML document using this option is not available on push data sources. The split XML/HTML document plugin can be used to split documents for push data sources. |

6.3.2. Document URL

The document URL field can be used to identify XML fields containing a unique identifier that will be used by Funnelback as the URL for the document. If the document URL is not set then Funnelback auto-generates a URL based on the URL of the XML document. This URL is used by Funnelback to internally identify the document, but is not a real URL.

|

Setting the document URL to an XML attribute is not supported. Setting a document url is not available for push data sources. |

6.3.3. Document file type

The document file type field can be used to identify an XML field containing a value that indicates the filetype that should be assigned to the record. This is used to associate a file type with the item that is indexed. XML records are commonly used to hold metadata about a record (for example, from a records management system) and this may be all the information that is available to Funnelback when indexing a document from such as system.

6.3.4. Special document elements

The special document elements can be used to tell Funnelback how to handle elements containing content.

Inner HTML or XML documents

The content of the XML field will be treated as a nested document and parsed by Funnelback and must be XML encoded (i.e. with entities) or wrapped in a CDATA declaration to ensure that the main XML document is well-formed.

The indexer will guess the nested document type and select the appropriate parser:

The nested document will be parsed as XML if (once decoded) it is well-formed XML and starts with an XML declaration similar to <?xml version="1.0" encoding="UTF-8" />. If the inner document is identified as XML it will be parsed with the XML parser and any X-Paths of the nested document can also be mapped. Note: the special XML fields configured on the advanced XML processing screen do not apply to the nested document. For example, this means you can’t split a nested document.

The nested document will be parsed as HTML if (once decoded) when it starts with a root <html> tag. Note that if the inner document contains HTML entities but doesn’t start with a root <html> tag, it will not be detected as HTML. If the inner document is identified as HTML and contains metadata then this will be parsed as if it was an HTML document, with embedded metadata and content extracted and associated with the XML records. This means that metadata fields included in the embedded HTML document can be mapped in the metadata mappings along with the XML fields.

The inner document in the example below will not be detected as HTML:

<root>

<name>Example</name>

<inner>This is <strong>an example</strong></inner>

</root>This one will:

<root>

<name>Example</name>

<inner><html>This is <strong>an example</strong></html></inner>

</root>Indexable document content

Any listed X-Paths will be indexed as un-fielded document content - this means that the content of these fields will be treated as general document content but not mapped to any metadata class (similar to the non-metadata field content of an HTML file).

For example, if you have the following XML document:

<?xml version="1.0" encoding="UTF-8"?>

<root>

<title>Example</title>

<inner>

<![CDATA[

<html>

<head>

<meta name="author" content="John Smith">

</head>

<body>

This is an example

</body>

</html>

]]>

</inner>

</root>With an Indexable document content path to //root/inner, the document content will have This is an example; however, the metadata author will not be mapped to "John Smith". To have the metadata mapped as well, the Inner HTML or XML document path should be used instead.

Include unmapped elements as content

If there are no indexable document content paths mapped, Funnelback can optionally choose to how to handle the unmapped fields. When this option is selected then all unmapped XML fields will be considered part of the general document content.

Tutorial: Creating an XML data source

In this exercise you will create a searchable index based off records contained within an XML file.

A web data source is used to create a search of a content sourced by crawling one or more websites. This can include the fetching of one or more specific URLs for indexing.

For this exercise we will use a web data source, though XML content can exist in many different data sources types (for example, custom, database, directory, social media).

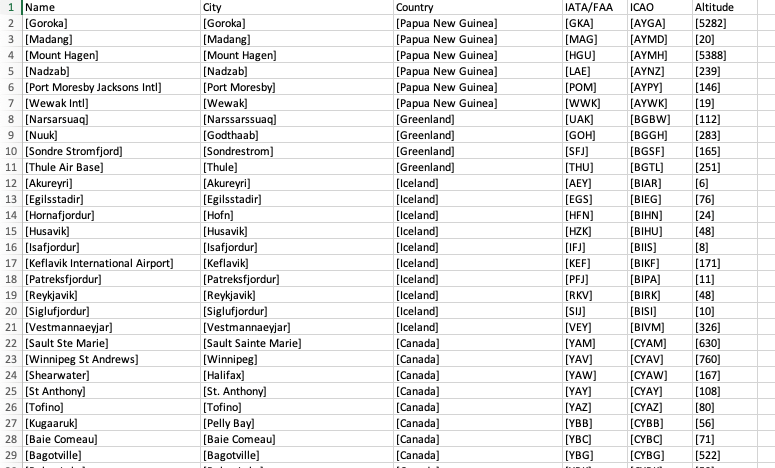

The XML file that we will be indexing includes a number of individual records that are contained within a single file, an extract of which is shown below.

<?xml version="1.0" encoding="UTF-8"?>

<tsvdata>

<row>

<Airport_ID>1</Airport_ID>

<Name>Goroka</Name>

<City>Goroka</City>

<Country>Papua New Guinea</Country>

<IATA_FAA>GKA</IATA_FAA>

<ICAO>AYGA</ICAO>

<Latitude>-6.081689</Latitude>

<Longitude>145.391881</Longitude>

<Altitude>5282</Altitude>

<Timezone>10</Timezone>

<DST>U</DST>

<TZ>Pacific/Port_Moresby</TZ>

<LATLONG>-6.081689;145.391881</LATLONG>

</row>

<row>

<Airport_ID>2</Airport_ID>

<Name>Madang</Name>

<City>Madang</City>

<Country>Papua New Guinea</Country>

<IATA_FAA>MAG</IATA_FAA>

<ICAO>AYMD</ICAO>

<Latitude>-5.207083</Latitude>

<Longitude>145.7887</Longitude>

<Altitude>20</Altitude>

<Timezone>10</Timezone>

<DST>U</DST>

<TZ>Pacific/Port_Moresby</TZ>

<LATLONG>-5.207083;145.7887</LATLONG>

</row>

<row>

<Airport_ID>3</Airport_ID>

<Name>Mount Hagen</Name>

<City>Mount Hagen</City>

<Country>Papua New Guinea</Country>

<IATA_FAA>HGU</IATA_FAA>

...Indexing an XML file like this requires two main steps:

-

Configuring Funnelback to fetch and split the XML file

-

Mapping the XML fields for each record to Funnelback metadata classes.

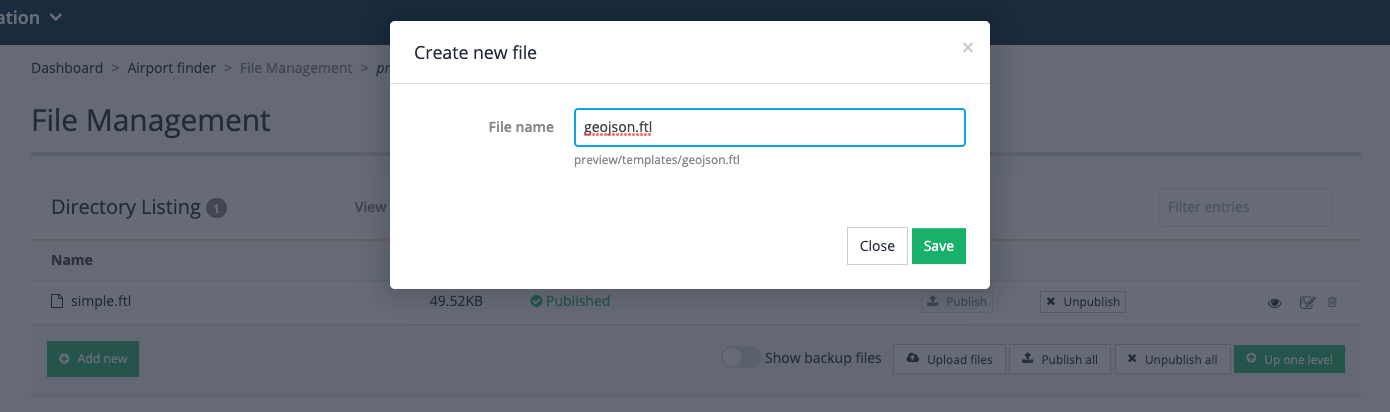

Exercise steps

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Create a new search package named

Airports. -

Add a web data source to this search package with the following attributes:

- Type

-

web

- Name

-

Airports data - What website(s) do you want to crawl?

-

https://docs.squiz.net/training-resources/airports/airports.xml

-

Update the data source.

-

Create a results page (as part of the Airports search package) named

Airport finder. -

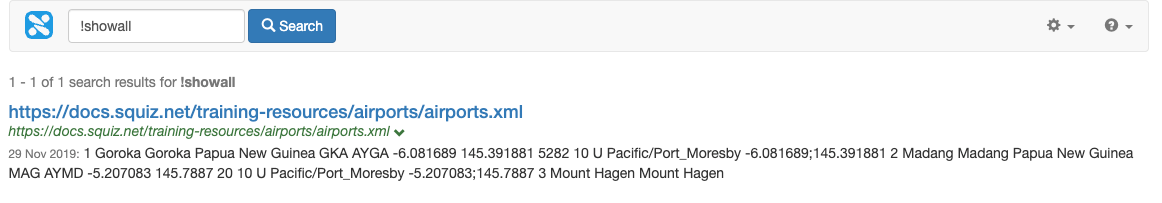

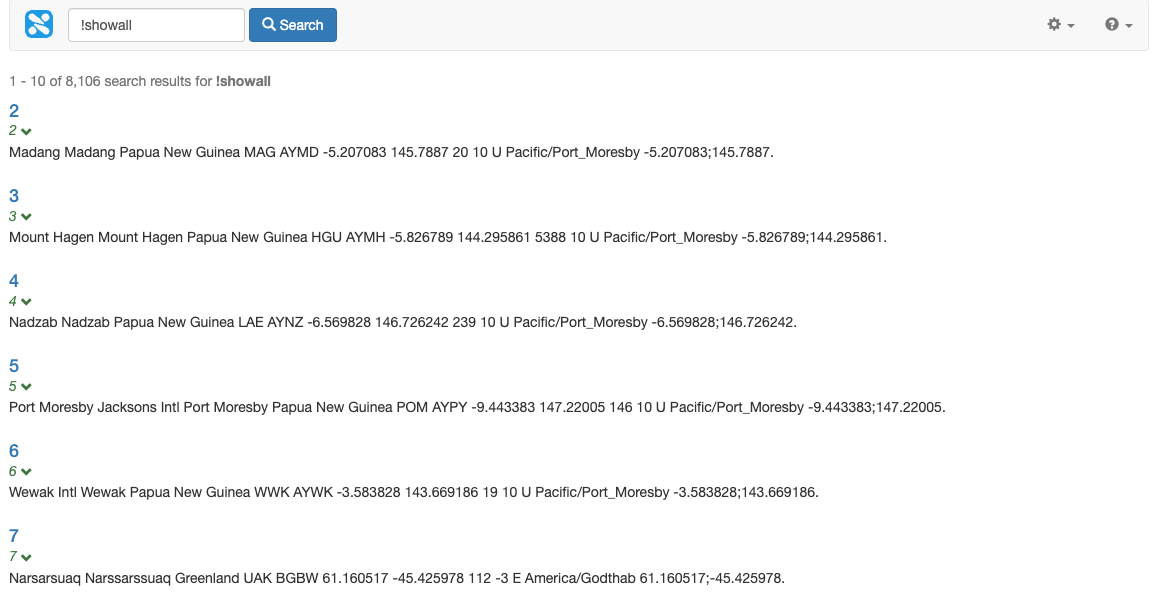

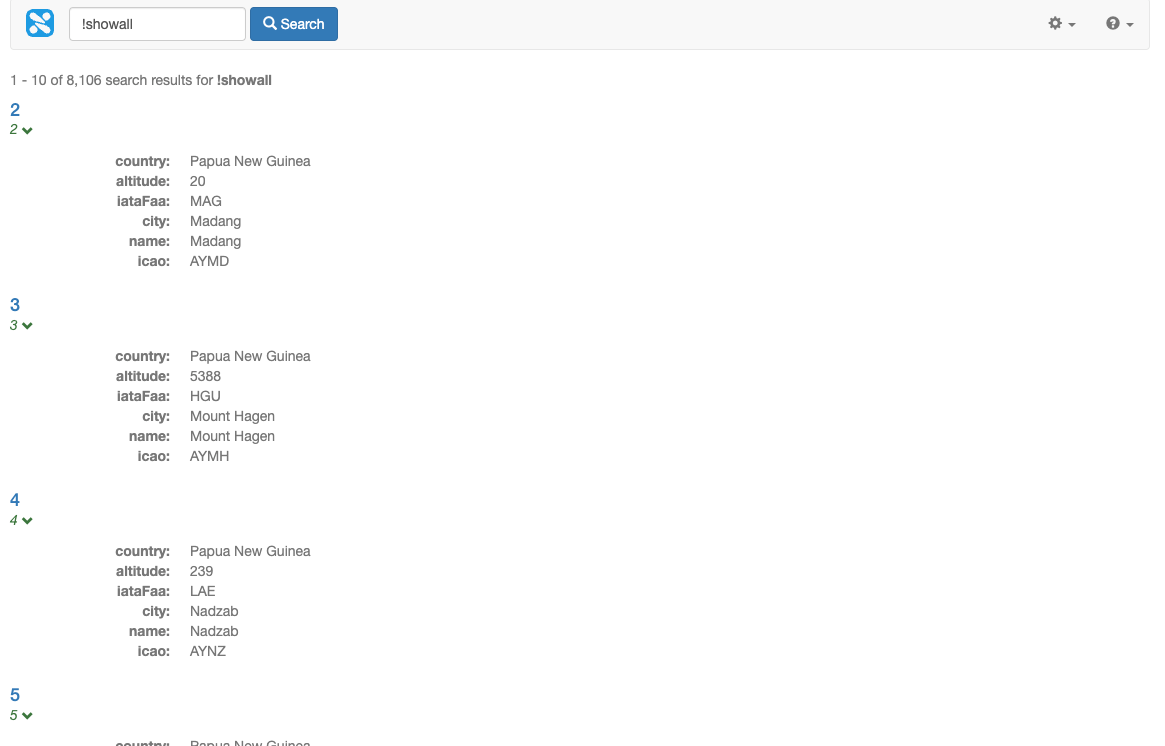

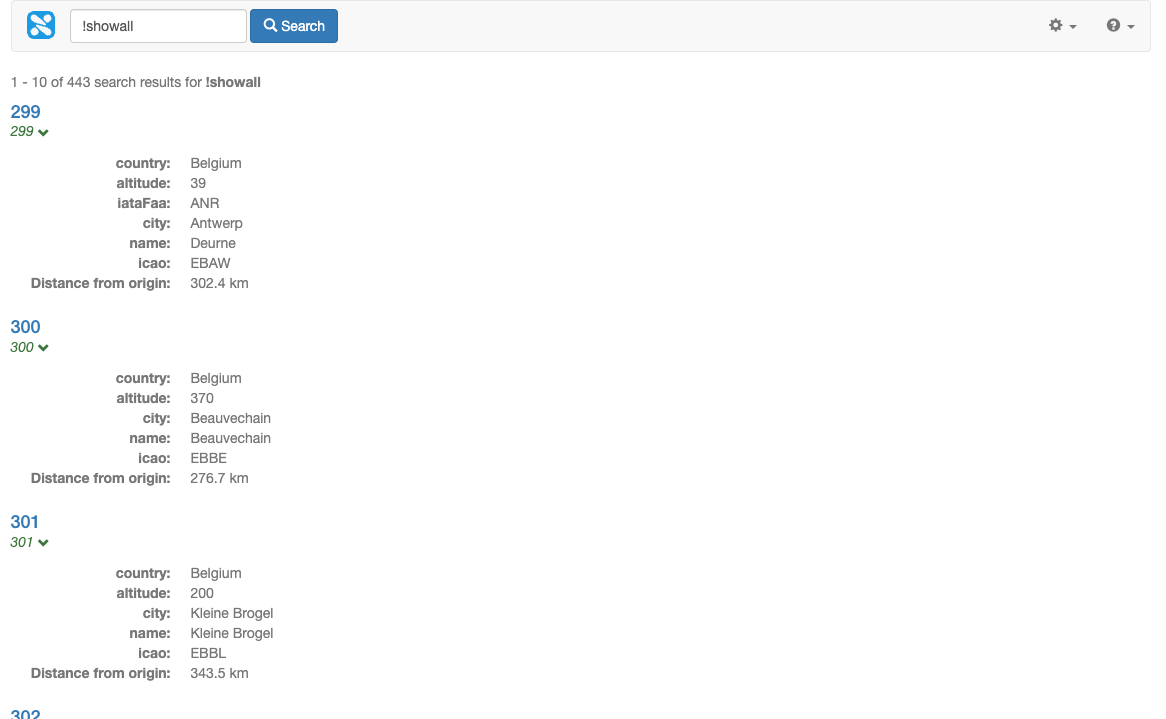

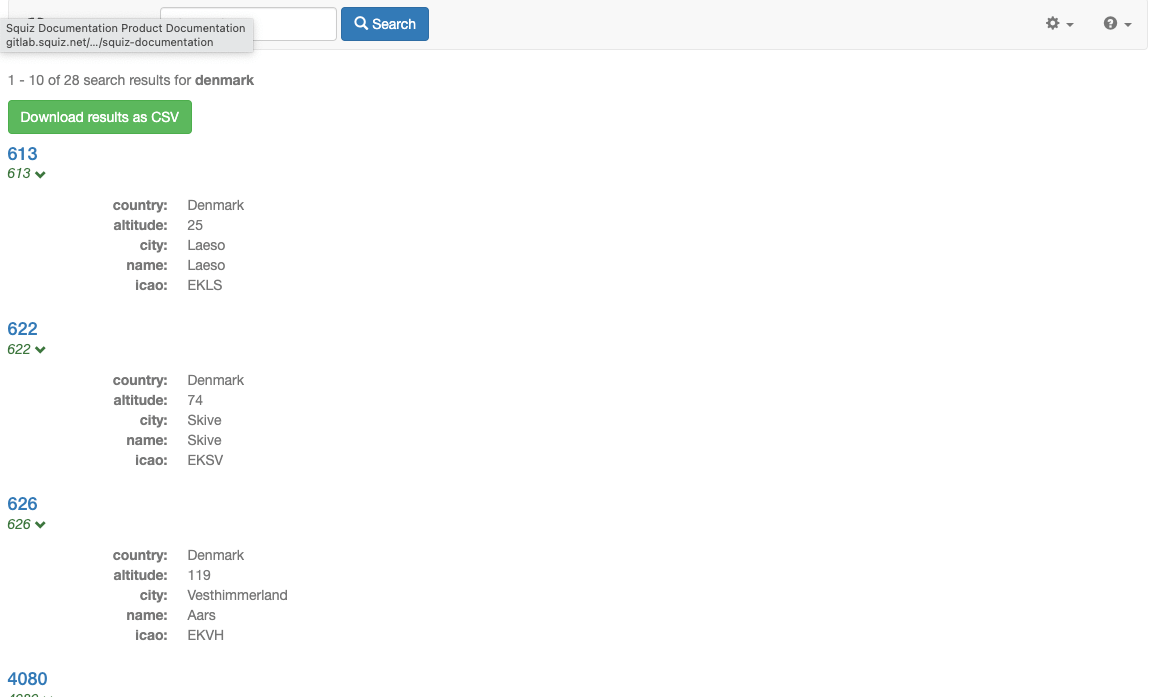

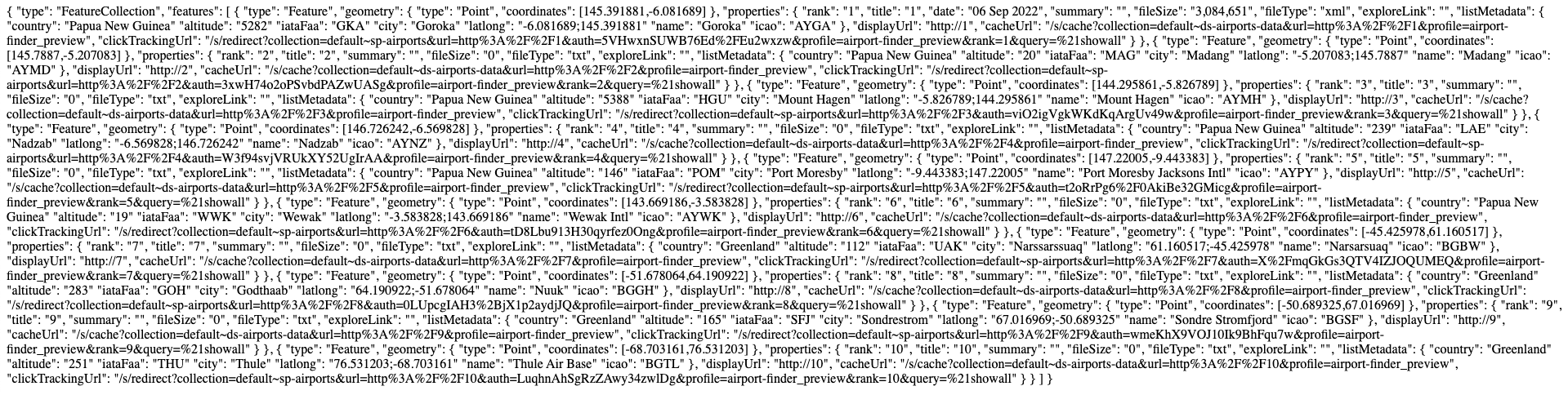

Run a search for !showall using the search preview. Observe that only one result (to the source XML file) is returned.

if you place an exclamation mark before a word in a query this negates the term and the results will include items that don’t contain the word. For example, !showall, used in the example above, means return results that do not contain the word showall.

-

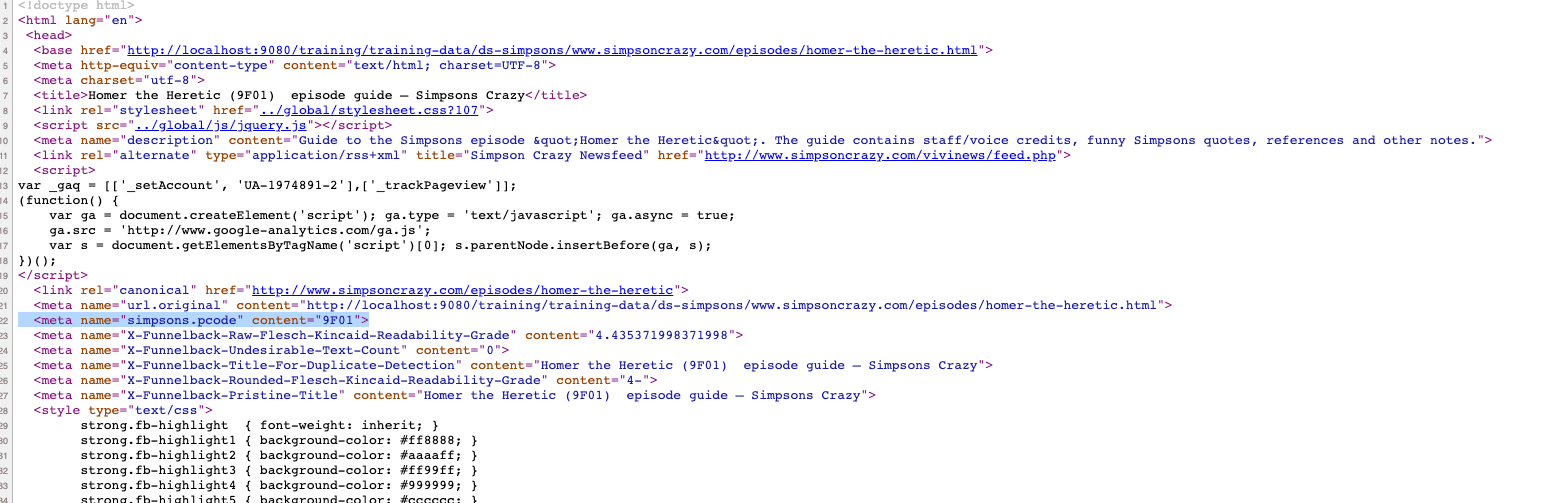

Configure Funnelback to split the XML files into records. To do this we need to inspect the XML file(s) to see what elements are available.

Ideally you will know the structure of the XML file before you start to create your collection. However, if you don’t know this and the XML file isn’t too large you might be able to view it by inspecting the cached version of the file, available from the drop down link at the end of the URL. If the file is too large the browser may not be able to display the file. Inspecting the XML (displayed above) shows that each airport record is contained within the

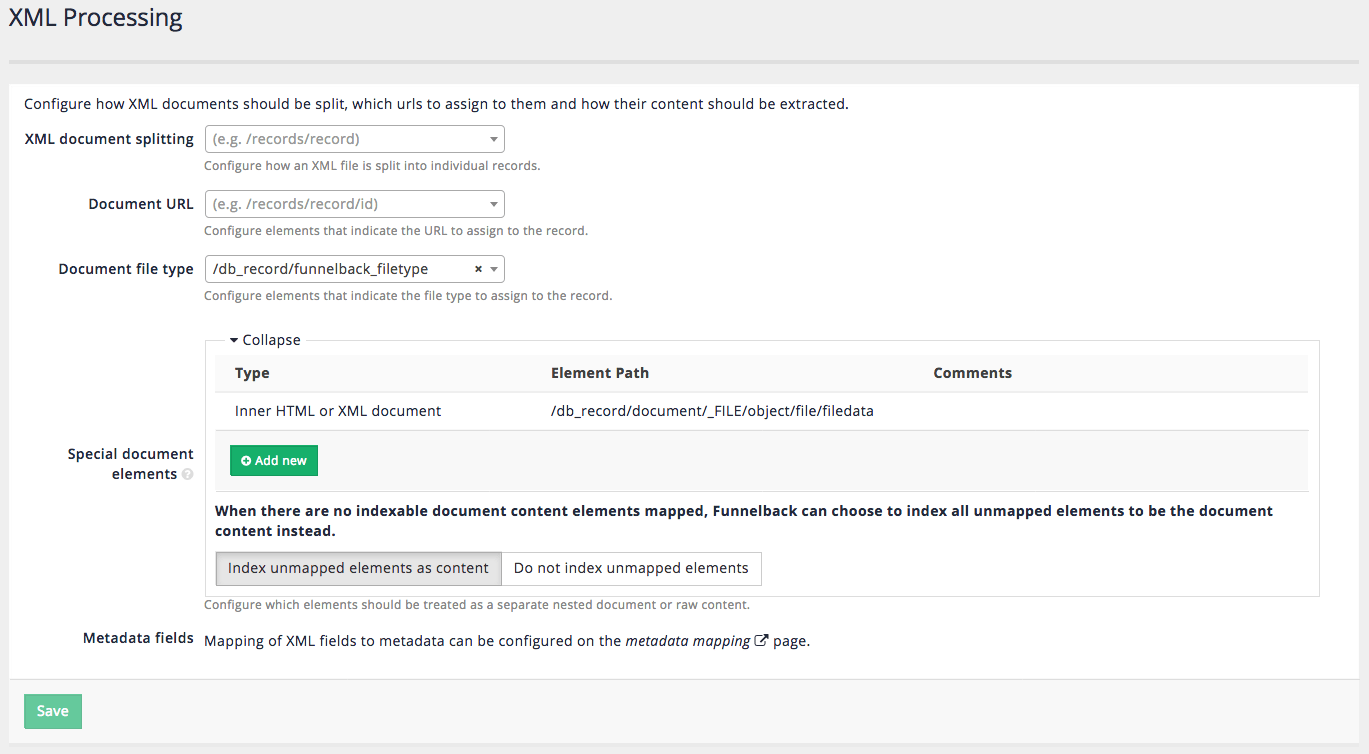

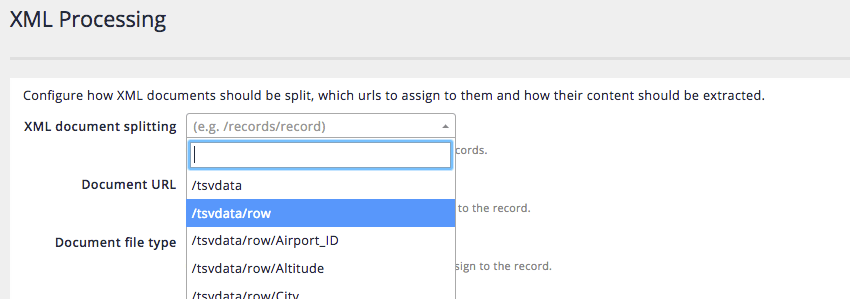

<row>element that is nested beneath the top level<tsvdata>element. This translates to an X-Path of/tsvdata/row.Navigate to the manage data source screen and select configure XML processing from the settings panel. The XML processing screen is where all the special XML options are set.

-

The XML document splitting field configures the X-Path(s) that are used to split an XML document. Select

/tsvdata/rowfrom the listed fields.

-

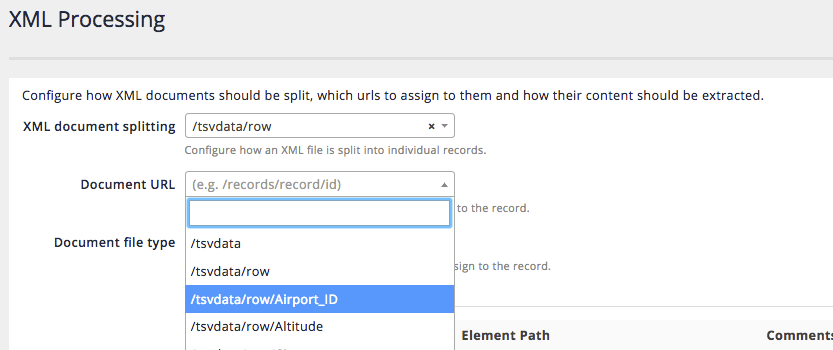

If possible also set the document URL to an XML field that contains a unique identifier for the XML record. This could be a real URL, or some other sort of ID. Inspecting the airports XML shows that the Airport_ID can be used to uniquely identify the record. Select

/tsvdata/row/Airport_IDfrom the dropdown for the document URL field.

If you don’t set a document URL Funnelback will automatically assign a URL. -

Leave the other fields unchanged then click the save button.

-

The index must be rebuilt for any XML processing changes to be reflected in the search results. Return to the manage data source screen then rebuild the index by selecting start advanced update from the update panel, then selecting rebuild live index.

-

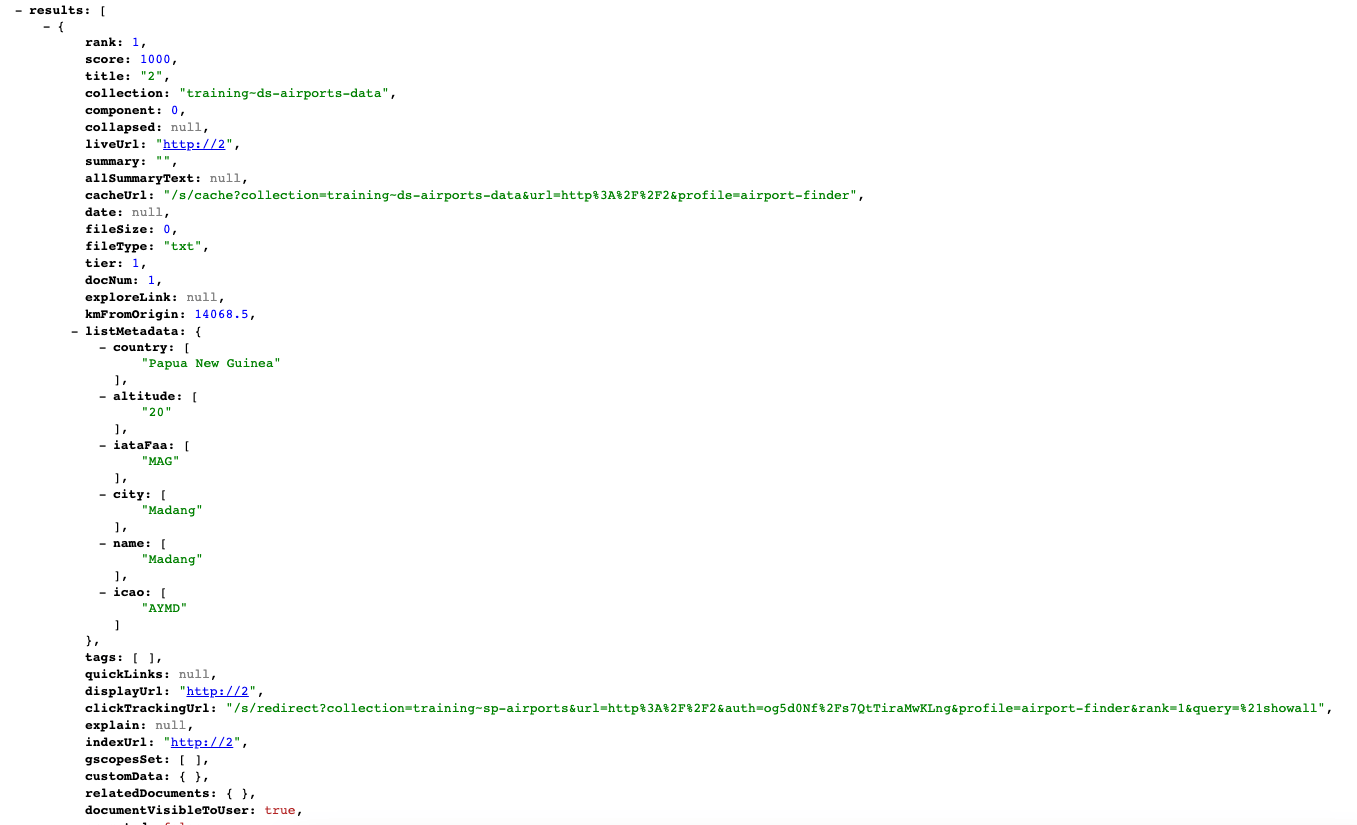

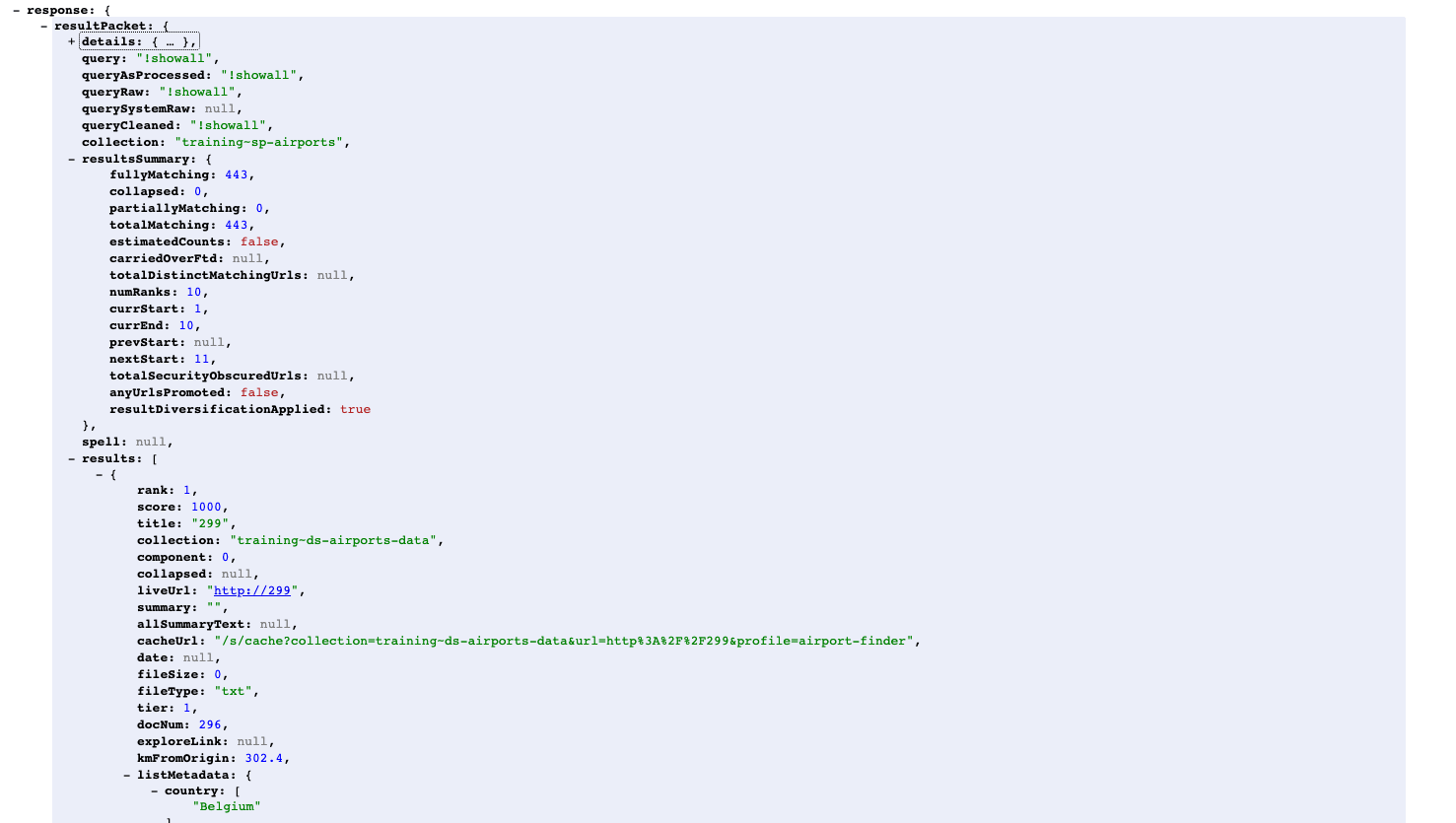

Run a search for !showall using the search preview and confirm that the XML file is now being split into separate items. The search results display currently only shows the URLs of each of the results (which in this case is just an ID number). In order to display sensible results the XML fields must be mapped to metadata and displayed by Funnelback.

-

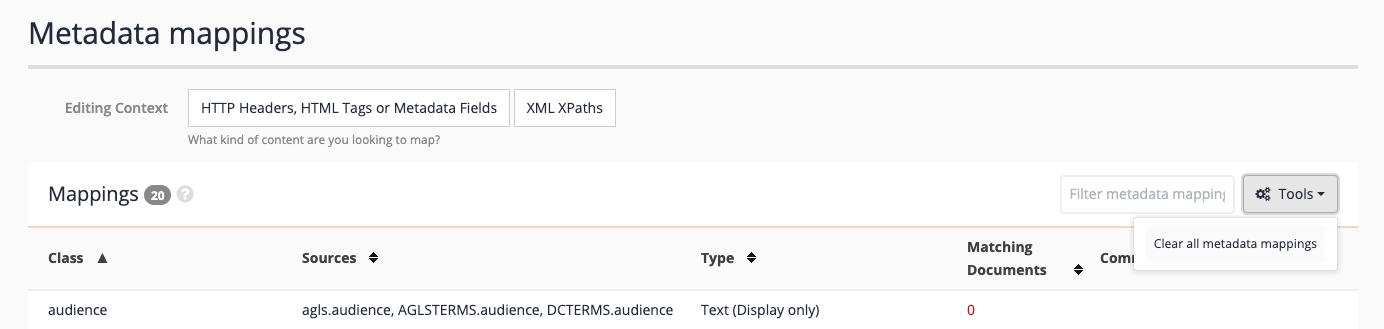

Map XML fields by selecting configure metadata mappings from the settings panel.

-

The metadata screen lists a number of pre-configured mappings. Because this is an XML data set, the mappings will be of no use so clear all the mappings by selecting clear all metadata mappings from the tools menu.

-

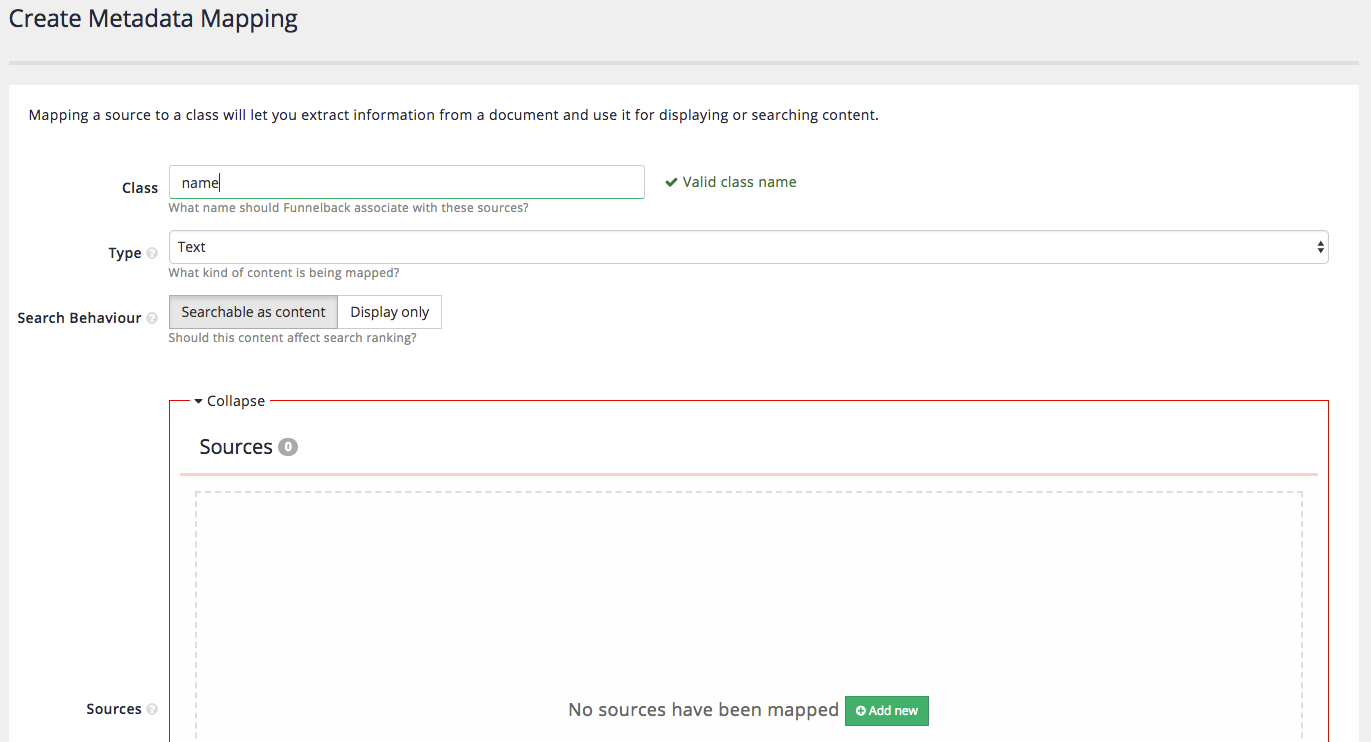

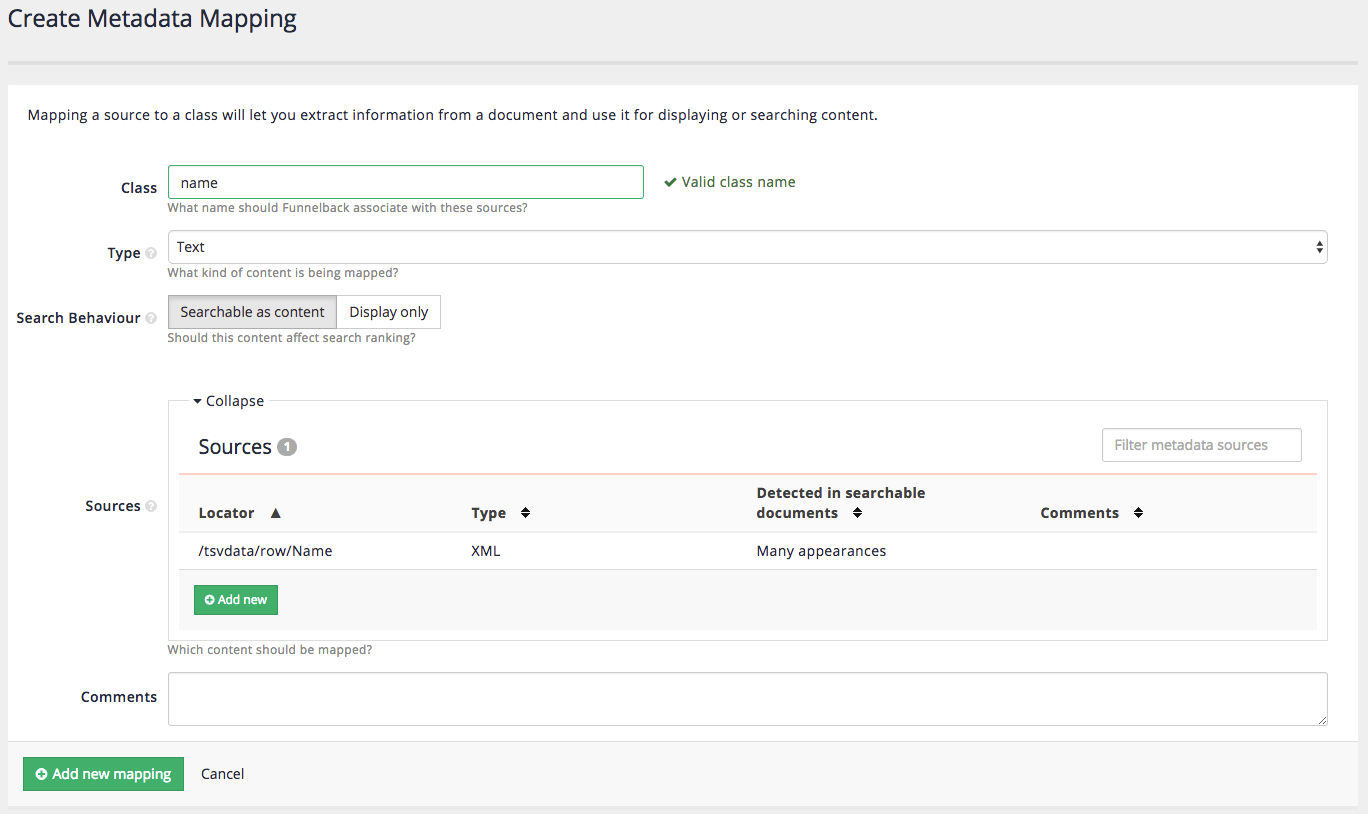

Click the add new button to add a new metadata mapping. Create a class called name that maps the

<Name>xml field. Enter the following into the creation form:- Class

-

name - Type

-

Text

- Search behavior

-

Searchable as content

-

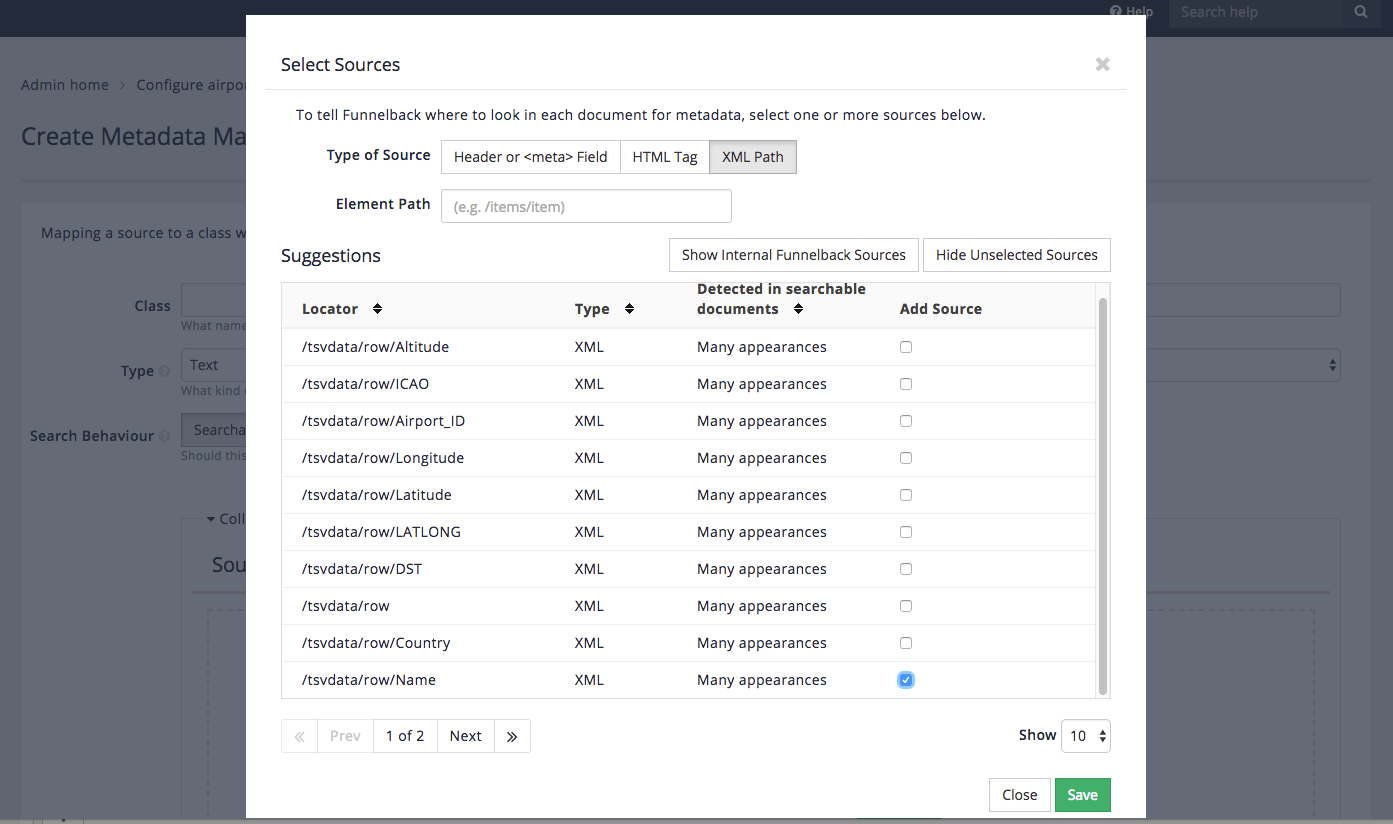

Add a source to the metadata mapping. Click the add new button in the sources box. This opens up a window that displays the metadata sources that were detected when the index was built. Display the detected XML fields by clicking on the XML path button for the type of source, then choose the Name (

/tsvdata/row/Name) field from the list of available choices the click the save button.

-

You are returned to the create mapping screen. Click the add new mapping button to create the mapping.

-

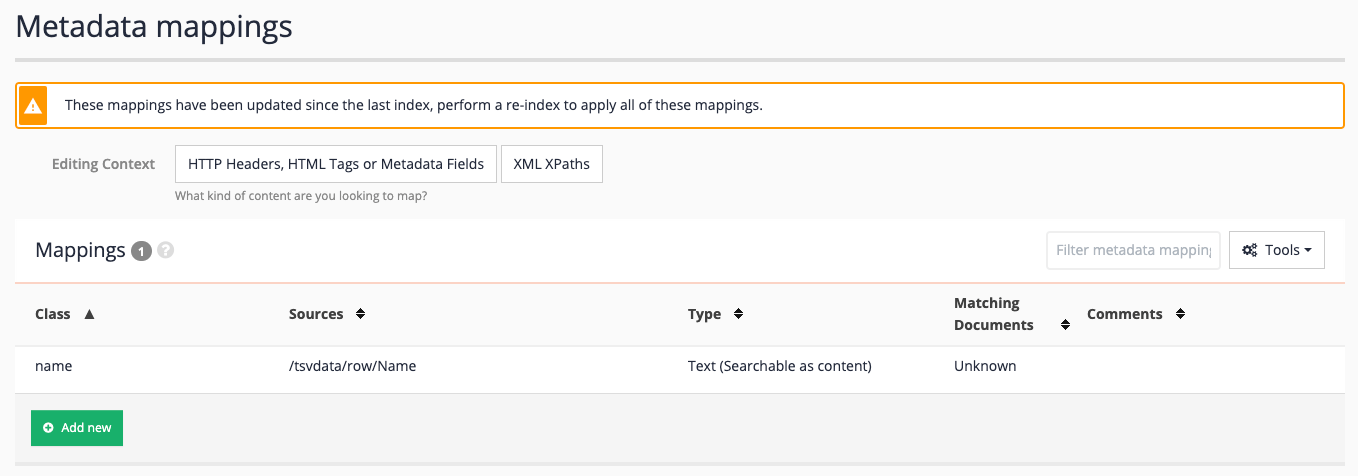

The metadata mappings screen updates to show the newly created mapping for Name.

-

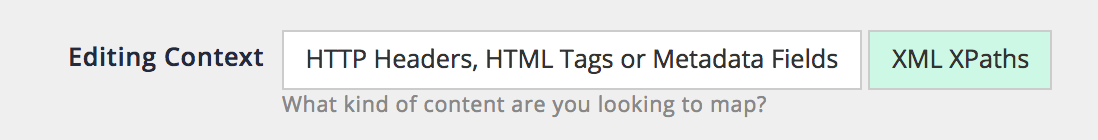

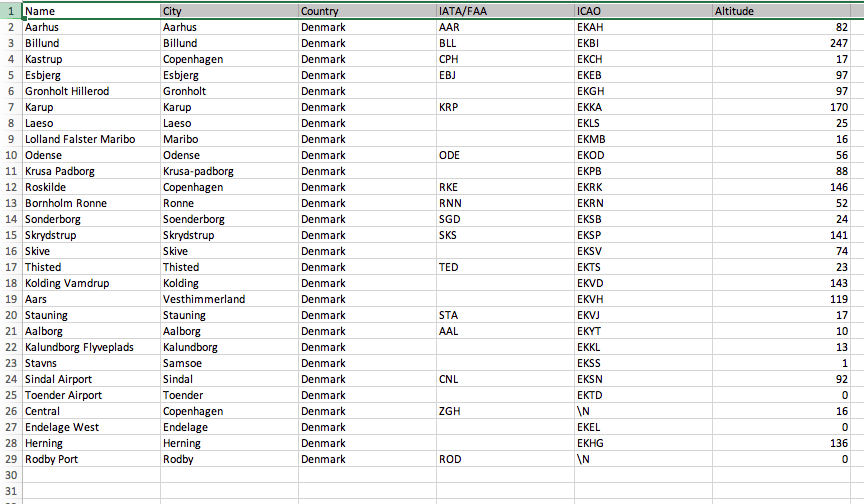

Repeat the above process to add the following mappings. Before adding the mapping switch the editing context to XML - this will mean that XML elements are displayed by default when selecting the sources.

Class name Source Type Search behaviour city/tsvdata/row/Citytext

searchable as content

country/tsvdata/row/Countrytext

searchable as content

iataFaa/tsvdata/row/IATA_FAAtext

searchable as content

icao/tsvdata/row/ICAOtext

searchable as content

altitude/tsvdata/row/Altitudetext

searchable as content

latlong/tsvdata/row/LATLONGtext

display only

latitude/tsvdata/row/Latitudetext

display only

longitude/tsvdata/row/Longitudetext

display only

timezone/tsvdata/row/Timezonetext

display only

dst/tsvdata/row/DSTtext

display only

tz/tsvdata/row/TZtext

display only

-

Note that the metadata mappings screen is displaying a message:

These mappings have been updated since the last index, perform a re-index to apply all of these mappings.

Rebuild the index by navigating to the manage data source screen then selecting start advanced update from the settings panel. Select rebuild live index from the rebuild live index section and click the update button.

-

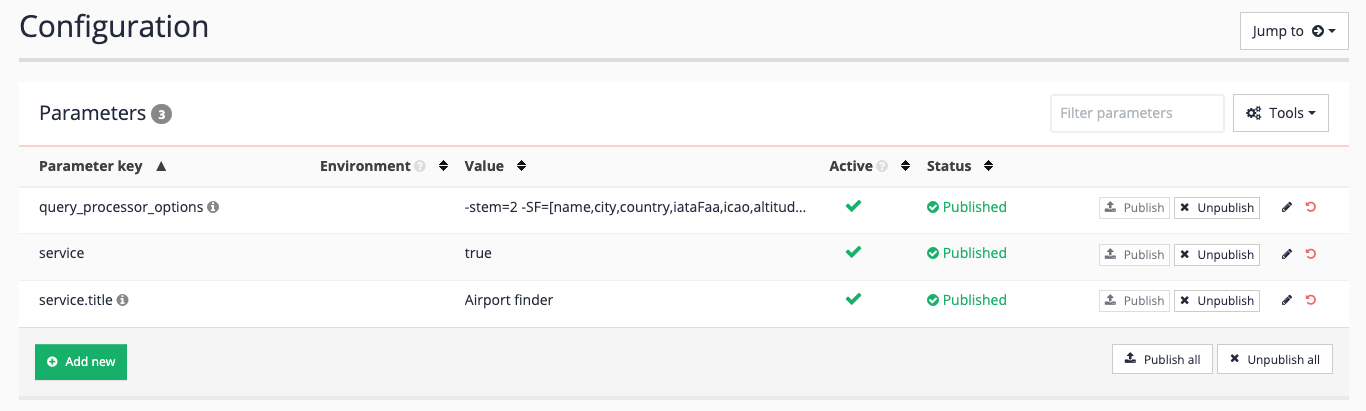

Display options will need to be configured so that the metadata is returned with the search results. Reminder: this needs to be set up on the airport finder results page. Edit the results page configuration, and add summary fields to include the name, city, country, iataFaa, icao and altitude metadata to the query processor options:

-stem=2 -SF=[name,city,country,iataFaa,icao,altitude] -

Rerun the search for !showall and observe that metadata is now returned for the search result.

-

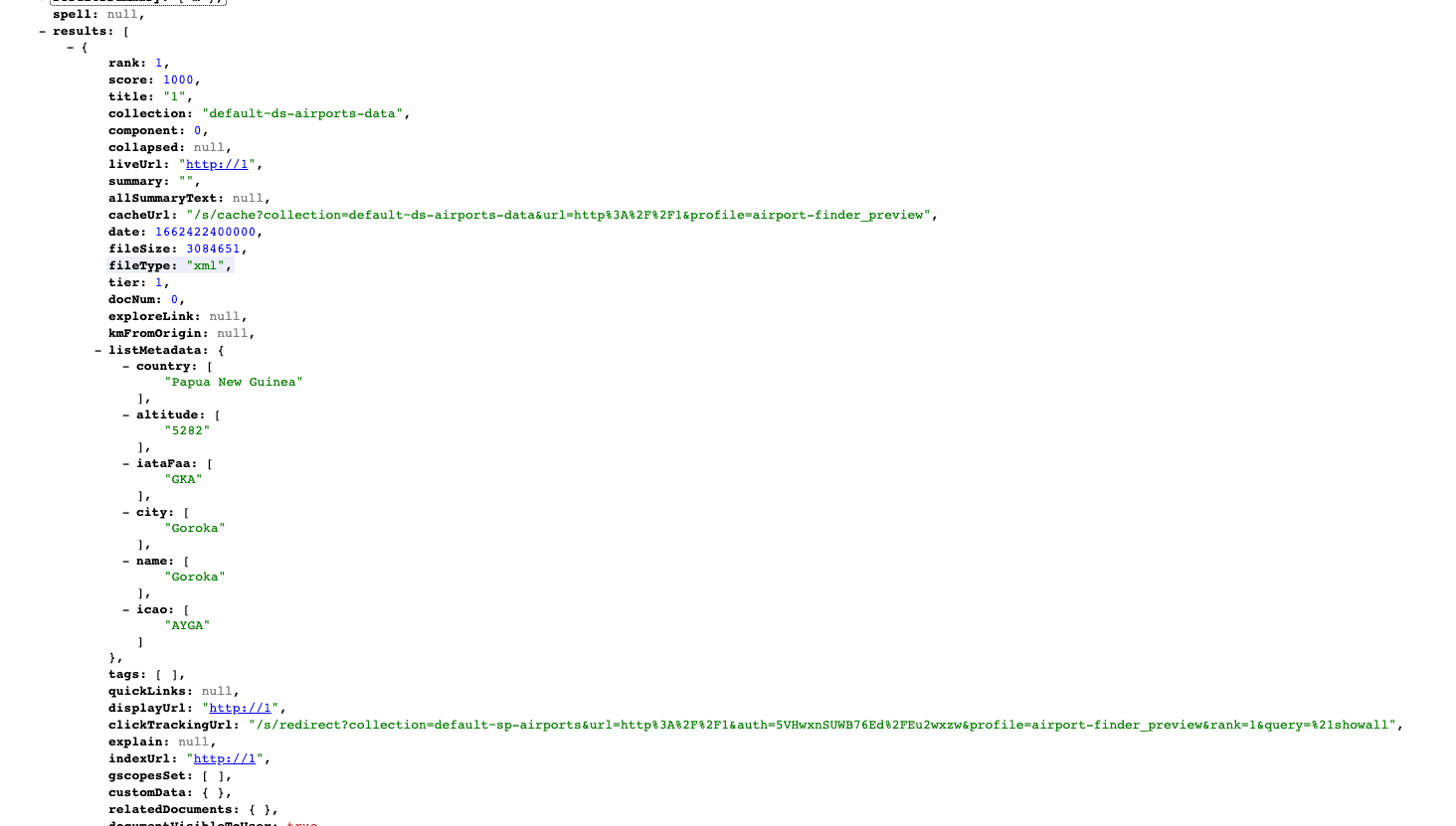

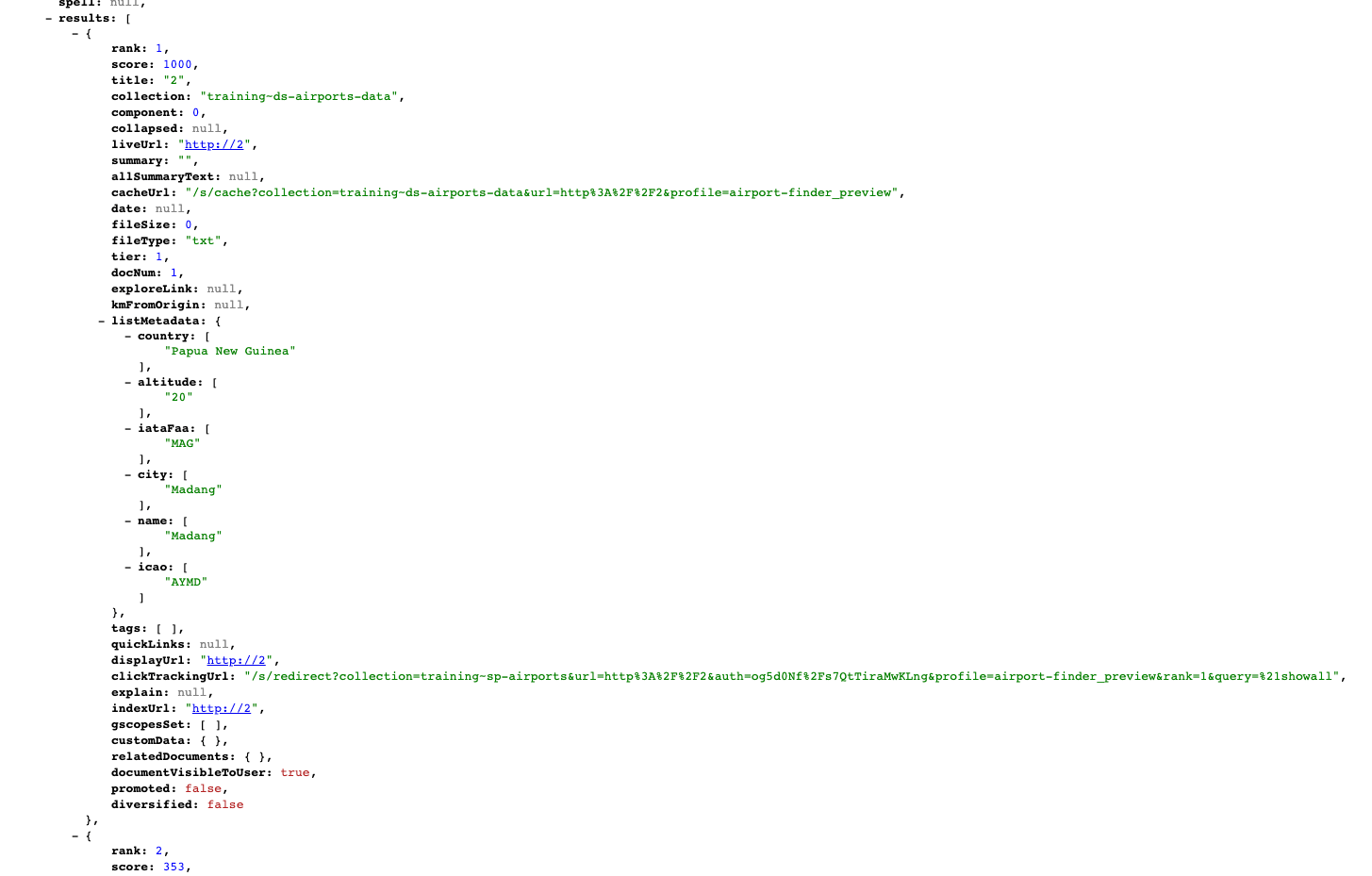

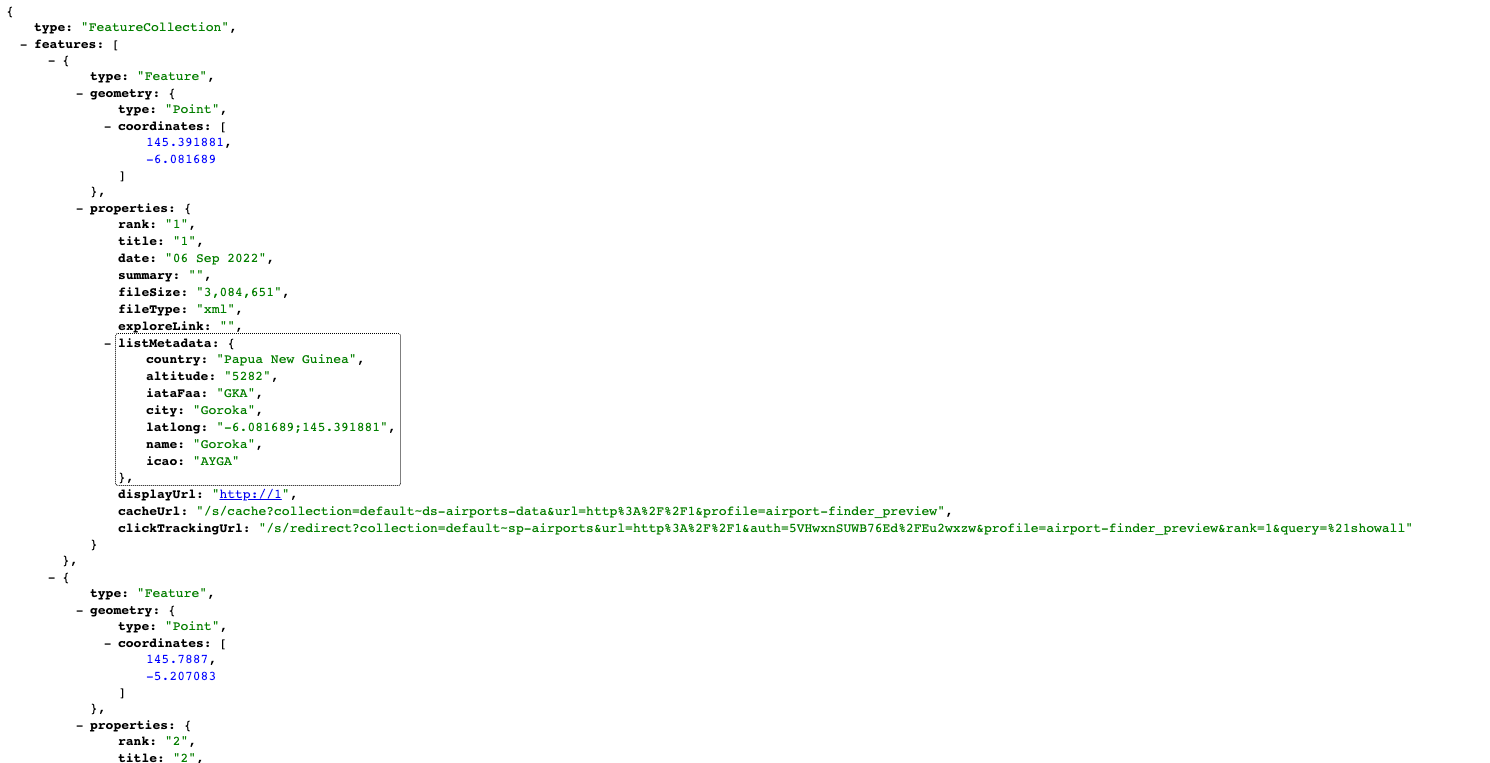

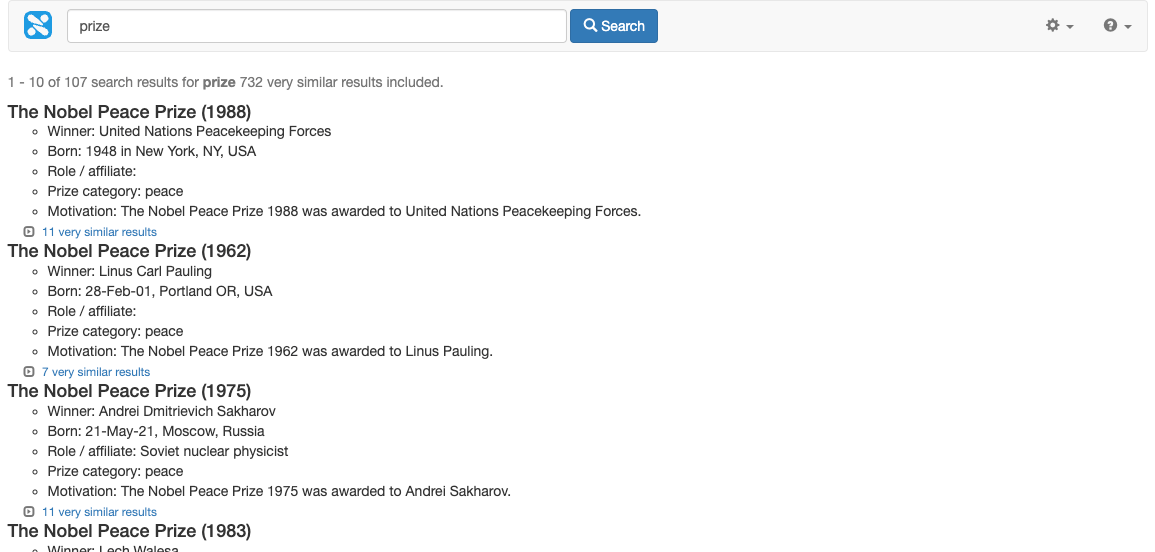

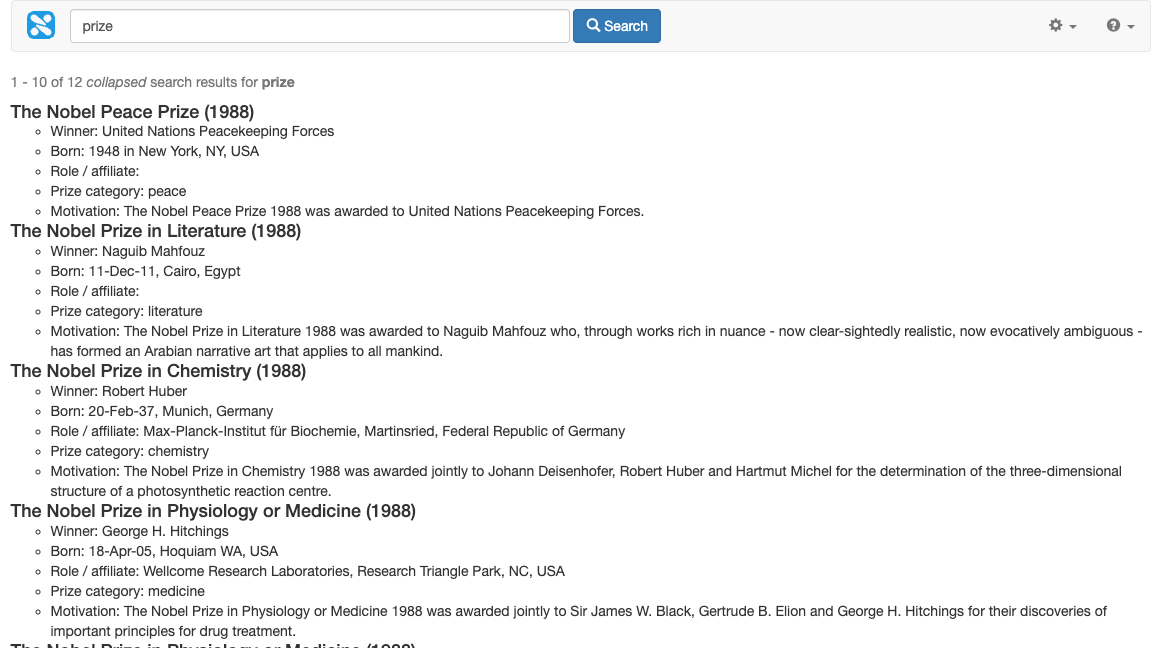

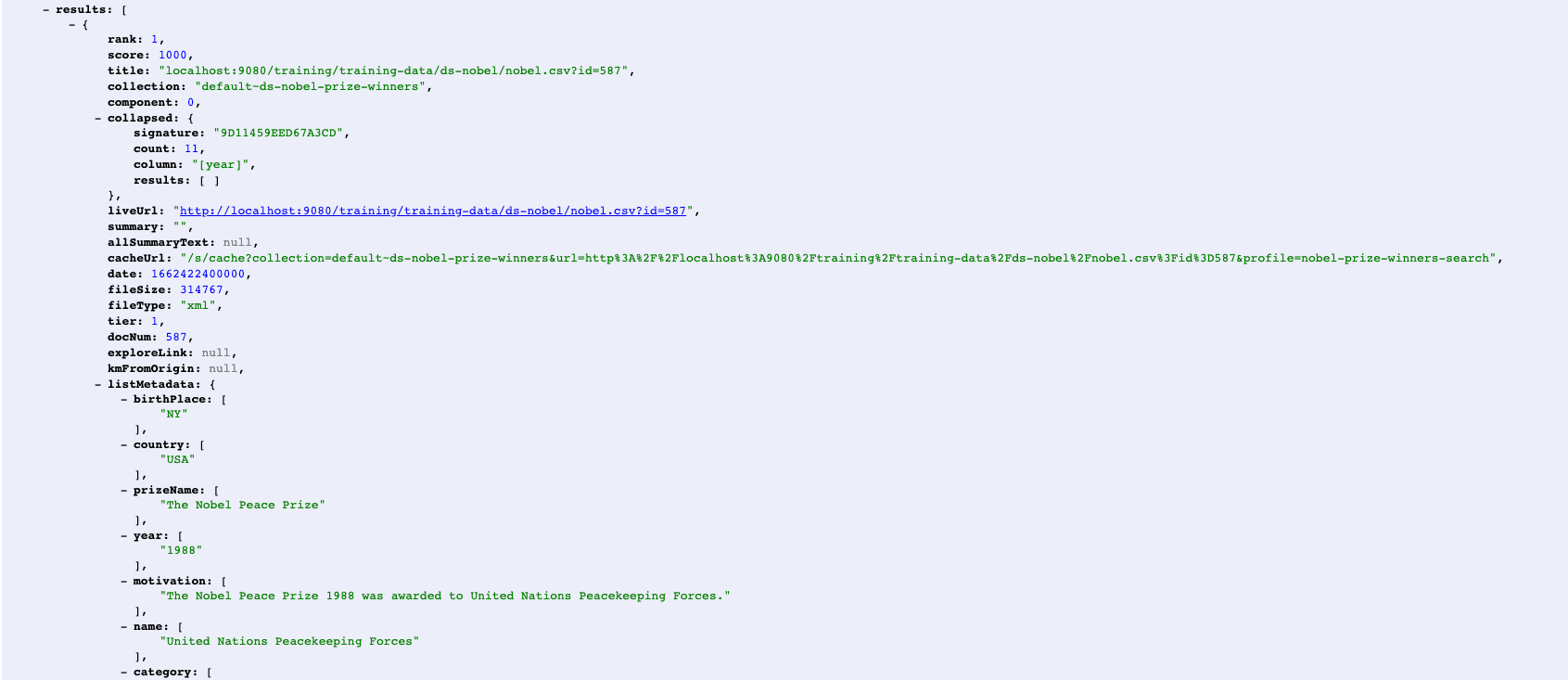

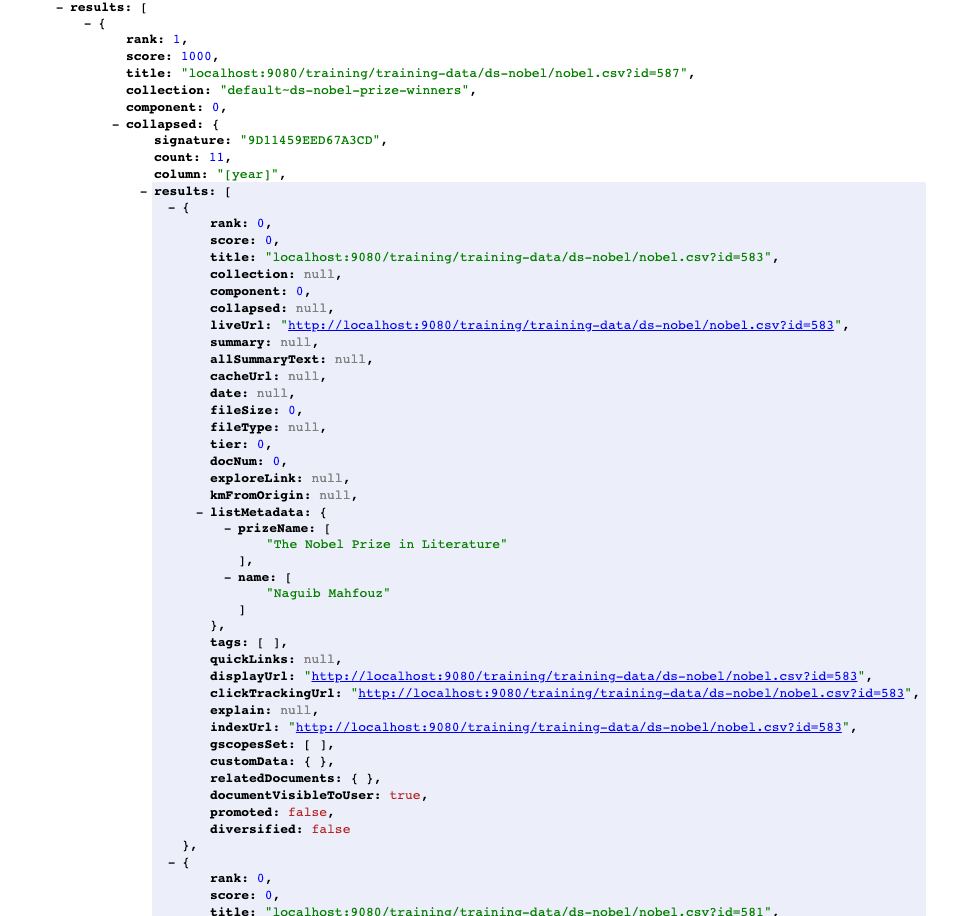

Inspect the data model. Reminder: edit the url changing

search.htmltosearch.json. Inspect the response element - each result should have fields populated inside thelistMetadatasub elements of the result items. These can then be accessed from your Freemarker template and printed out in the search results.

7. Advanced metadata

7.1. Geospatial and numeric metadata

Recall that Funnelback supports five types of metadata classes:

-

Text: The content of this class is a string of text.

-

Geospatial x/y coordinate: The content of this field is a decimal latlong value in the following format:

geo-x;geo-y(for example,2.5233;-0.95) This type should only be used if there is a need to perform a geospatial search (for example, This point is within X km of another point). If the geospatial coordinate is only required for plotting items on a map then a text field is sufficient. -

Number: The content of this field is a numeric value. Funnelback will interpret this as a number. This type should only be used if there is a need to use numeric operators when performing a search (for example,

X > 2050) or to sort the results in numeric order. If the field is only required for display within the search results text field is sufficient. -

Document permissions: The content of this field is a security lock string defining the document permissions. This type should only be used when working with an enterprise collection that includes document level security.

-

Date: A single metadata class supports a date, which is used as the document’s date for the purpose of relevance and date sorting. Additional dates for the purpose of display can be indexed as either a text or number type metadata class, depending on how you wish to use the field.

Funnelback’s text metadata type is sufficient for inclusion of metadata in the index appropriate for the majority of use cases.

The geospatial x/y coordinate and number metadata types are special metadata types that alter the way the indexed metadata value is interpreted, and provide type specific methods for working with the indexed value.

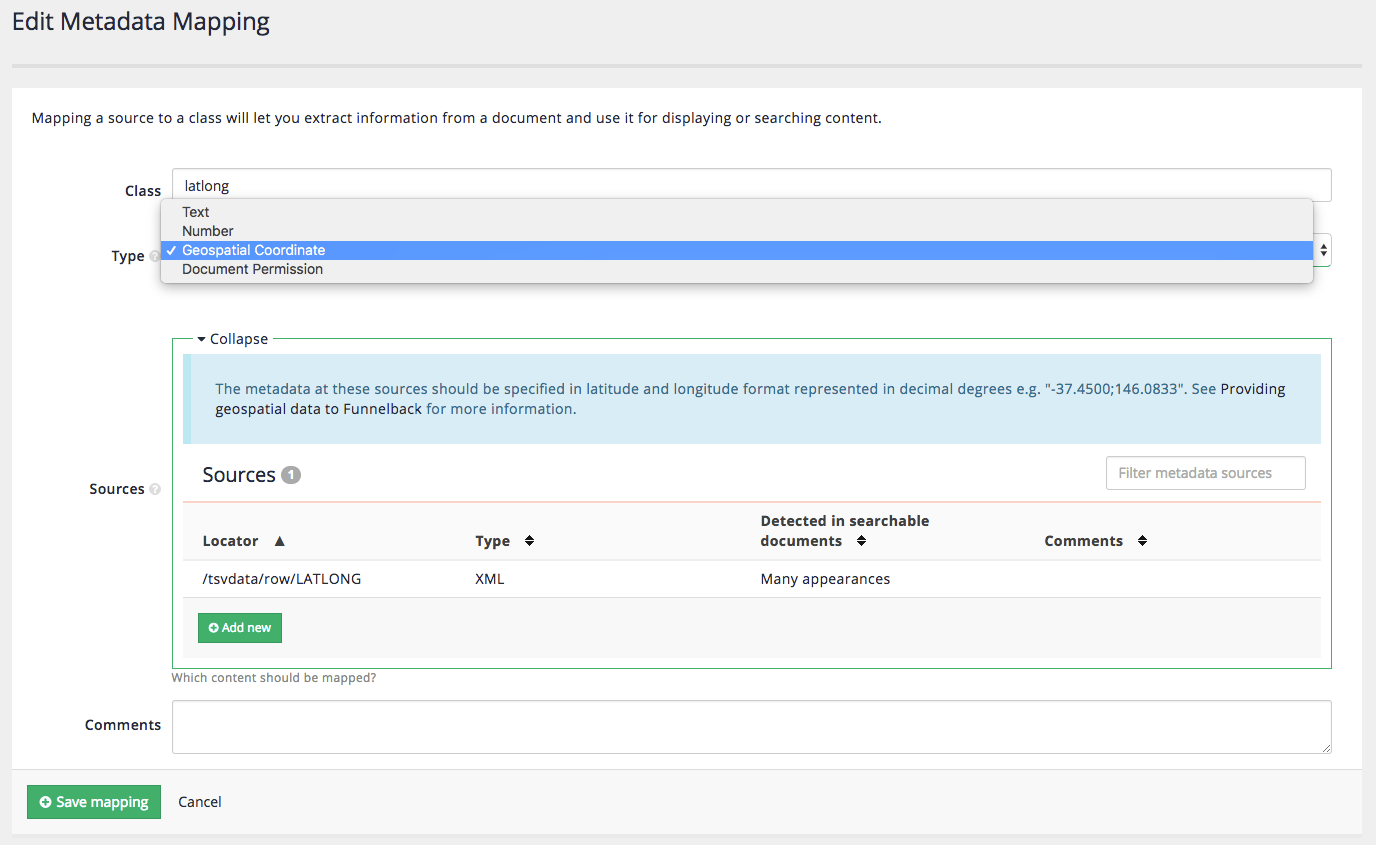

Defining a field as a geospatial x/y coordinate tells Funnelback to interpret the contents of the field as a decimal lat/long coordinate. (for example, -31.95516;115.85766). This is used by Funnelback to assign a geospatial coordinate to an index item (effectively pinning it to a single point on a map). A geospatial metadata field is useful if you wish to add any location-based search constraints such as (show me items within a specified distance to a specified origin point), or sort the results by proximity (closeness) to a specific point.

A geospatial x/y coordinate is not required if you just want to plot the item onto a map in the search results (a text type value will be fine as it’s just a text value you are passing to the mapping API service that will generate the map).

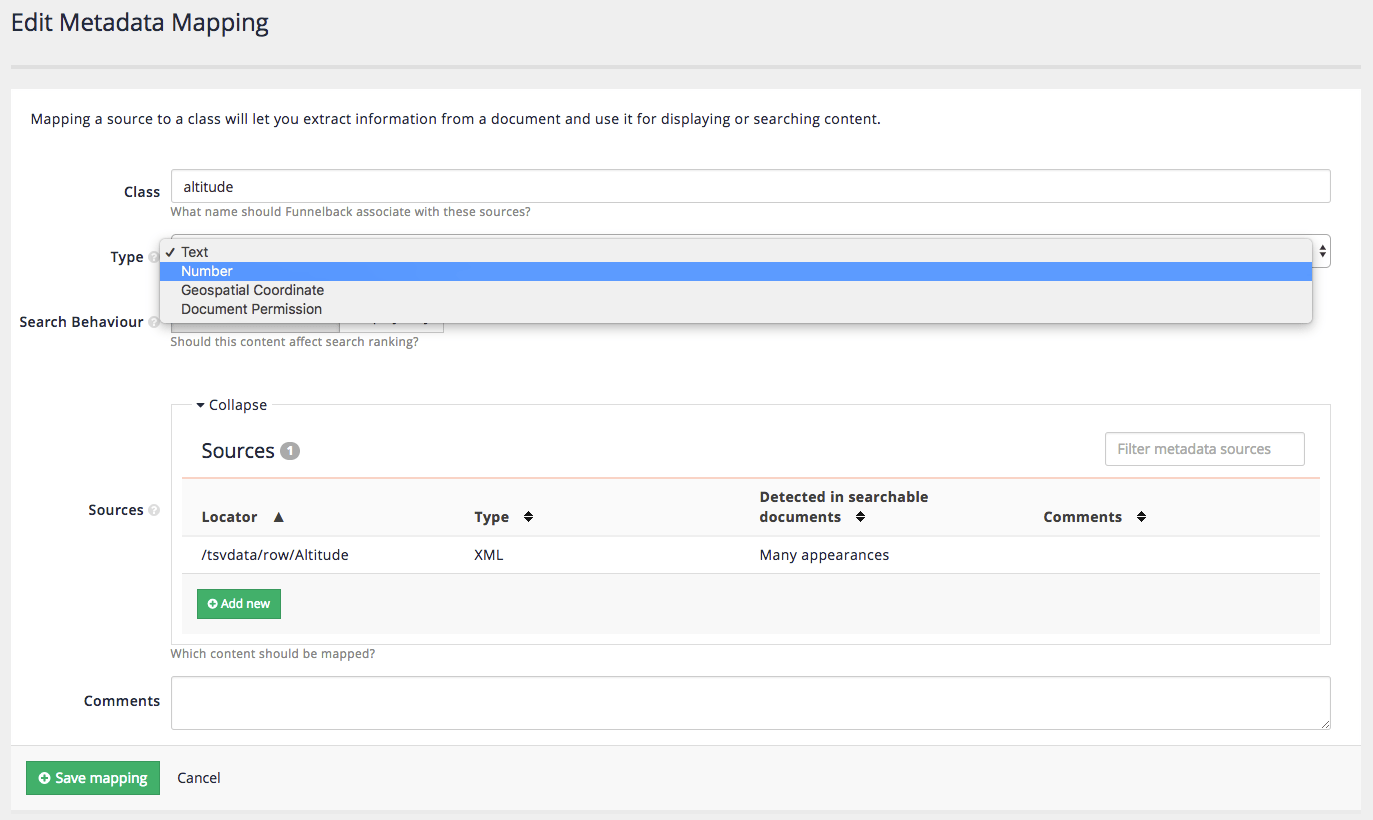

Defining a field as a number tells Funnelback to interpret the contents of the field as a number. This allows range and equality comparisons (==, !=, >=, >, <, <=) to be run against the field. Numeric metadata is only required if you wish to make use of these range comparisons. Numbers for the purpose of display in the search results should be defined as text type metadata.

| Only use geospatial and numeric values if you wish to make use of the special type-specific query operators. Be careful when selecting your class names because these will be merged with the classes from other data sources that are included in the same search package. |

Tutorial: Geospatial and numeric metadata

In this exercise we will extend the metadata that is extracted from the XML example. We will include both a geospatial metadata field and a numeric metadata field. Recall the record format for the XML data:

<row>

<Airport_ID>1</Airport_ID>

<Name>Goroka</Name>

<City>Goroka</City>

<Country>Papua New Guinea</Country>

<IATA_FAA>GKA</IATA_FAA>

<ICAO>AYGA</ICAO>

<Latitude>-6.081689</Latitude>

<Longitude>145.391881</Longitude>

<Altitude>5282</Altitude>

<Timezone>10</Timezone>

<DST>U</DST>

<TZ>Pacific/Port_Moresby</TZ>

<LATLONG>-6.081689;145.391881</LATLONG>

</row>The <LATLONG> field contains the geospatial metadata that will be associated with the item.

| when working with geospatial metadata Funnelback expects the format of the field to contain a decimal X/Y coordinate in the format above (X coordinate;Y coordinate). If the format of the field doesn’t match (for example, is delimited with a comma) or the X/Y values are supplied separately you will need to clean the XML before Funnelback indexes it (or provide an additional field in the correct format within the source data). |

The <Altitude> field will be used as the source of numeric metadata for the purpose of this exercise.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Locate the airports search package.

-

Navigate to the manage data source screen for the airports data data source.

-

Edit the metadata mappings. (Settings panel, configure metadata mappings).

-

Modify the mapping for the

<LATLONG>field to set the type as a geospatial coordinate.the <LATLONG>field was mapped previously so edit the existing entry.

-

Modify the mapping for the

<Altitude field>to be number then save the changes.the <Altitude>field was mapped previously so edit the existing entry.

-

Rebuild the index (as you have changed the metadata configuration). Reminder: Update panel, start advanced update, rebuild live index.

-

Run a search for !showall and inspect the JSON noting that

kmFromOriginelements now appear (due to the elements containing geospatial metadata).

the kmFromOriginfield returns the distance (in km) from an origin point, which is defined by passing in anoriginparameter, set to a geo-coordinate. It’s returningnullbecause we haven’t defined this. -

Return to the HTML results and add numeric constraints to the query to return only airports that are located between 2000 ft and 3000 ft: add

<_altitude=3000&ge_altitude=2000to the URL observing that the number of matching results is reduced and that altitudes of the matching results are all now between 2000 and 3000. -

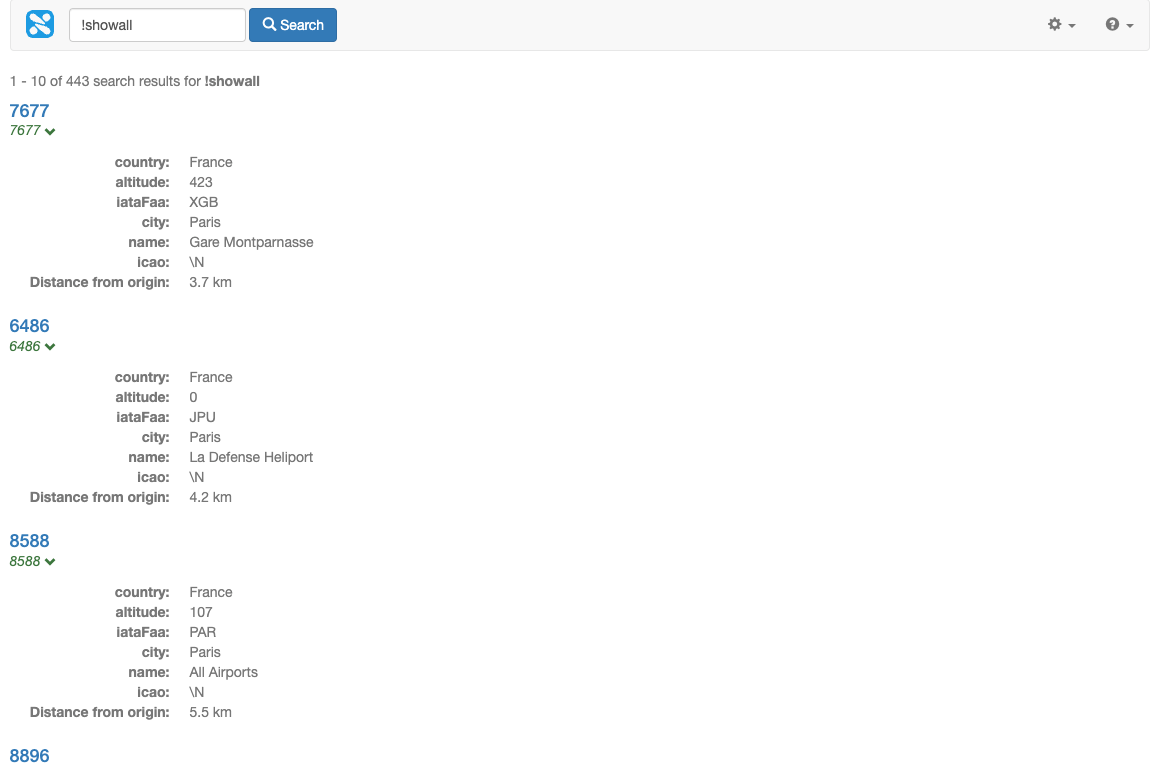

Remove the numeric parameters and add an origin parameter to the URL:

&origin=48.8588336;2.2769957(this is the lat/long value for Paris, France). Observe that thekmFromOriginfield now contains values.

-

Geospatial searches can be limited to a radius measured from the origin (in km). Supply an optional

maxdistparameter and set this to 500km, by adding&maxdist=500to the URL. Note that the number of results has dropped dramatically and are all airports within 500km of Paris.When working with geospatial search you may want to consider setting the origin value by reading the location data from your web browser (which might be based on a mobile phone’s GPS coordinates, or on IP address location). Once you’ve read this value you can pass it to Funnelback along with the other search parameters.

-

Edit the template to print out the

kmFromOriginvalue in the results. Add the following below the metadata (for example, immediately before the</dl>tag at approx line 595) that is printed in the result template:<#if s.result.kmFromOrigin??> <dt>Distance from origin:</dt><dd>${s.result.kmFromOrigin} km</dd> </#if> -

Run the !showall search again and observe the distance is now returned in the results.

-

Sort the results by proximity to the origin by adding

&sort=proxand observe that thekmFromOriginvalues are now sorted by distance (from nearest to farthest).

Change the sort to be from farthest to nearest by setting the sort to dprox (descending proximity).

|

Extended exercises: Geospatial search and numeric metadata

-

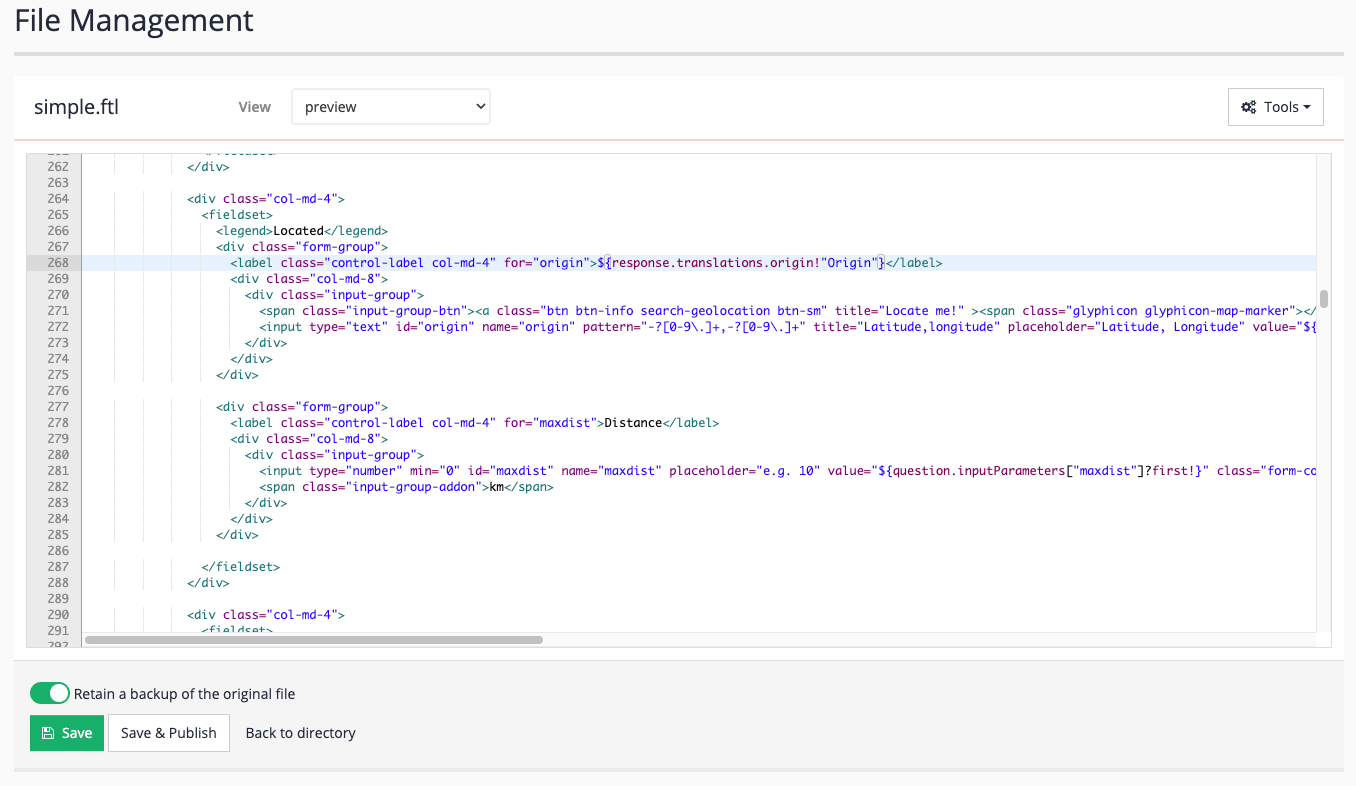

Modify the search box to include controls to set the origin using the browser’s location support and to adjust the

maxdist. Hint: examine the advanced search form for an example. -

Add sort options to sort the results by proximity to the

origin. -

Modify the search box to set the

origininside a hidden field. -

Modify the template to plot the search results onto a map. See: Using Funnelback search results to populate a map

-

Add sort options to sort the results numerically by

altitude. Observe that the sort order is numeric (1, 2, 10, 11). Update the metadata mappings so that altitude is a standard text metadata field and re-index the live view. Refresh the search results and observe the sort order is now alphabetic (1, 10, 11, 2). This distinction is important if you have a metadata field that you need to sort numerically.

8. Configuring url sets (generalized scopes)

The generalized scopes mechanism in Funnelback allows an administrator to group sets of documents that match a set of URL patterns (for example, */publications/*), or all the URLs returned by a specified query (for example, author:shakespeare).

Once defined these groupings can be used for:

-

Scoped searches (provide a search that only looks within a particular set of documents)

-

Creating additional services (providing a search service with separate templates, analytics and configuration that is limited to a particular set of documents).

-

Faceted navigation categories (Count the number of documents in the result set that match this grouping).

The patterns used to match against the URLs are Perl regular expressions allowing for very complex matching rules to be defined. If you don’t know what a regular expression is don’t worry as simple substring matching will also work.

The specified query can be anything that is definable using the Funnelback query language.

Generalized scopes are a good way of adding some structure to an index that lacks any metadata, by either making use of the URL structure, or by creating groupings based on pre-defined queries.

| Metadata should always be used in preference to generalized scopes where possible as gscopes carry a much higher maintenance overhead. |

URLs can be grouped into multiple sets by having additional patterns defined within the configuration file.

Tutorial: Configuring URL sets that match a URL pattern

The process for creating configuration for generalized scopes is very similar to that for external metadata.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Navigate to the manage data source screen for the silent films - website data source.

-

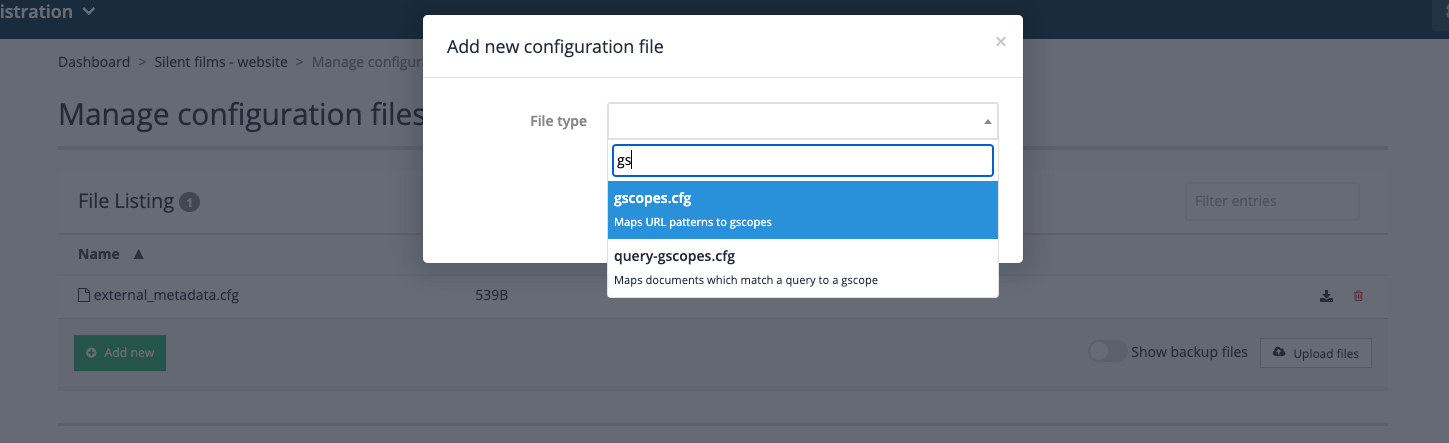

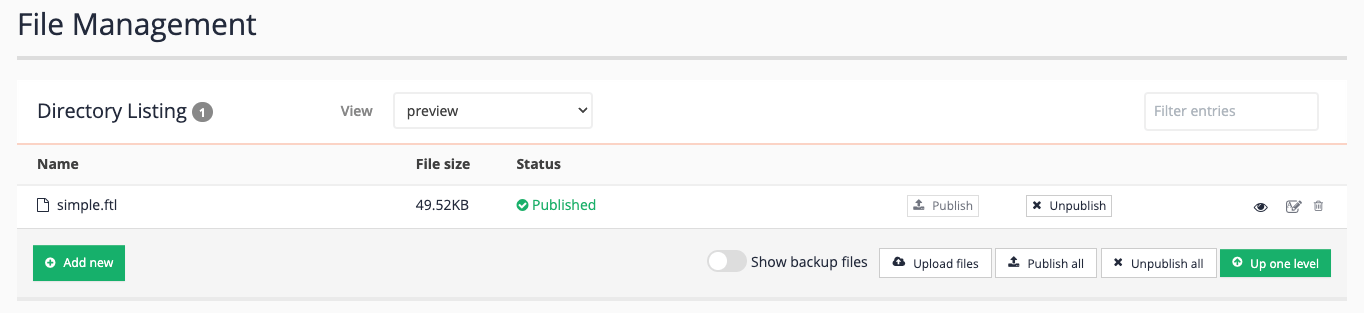

Select manage data source configuration files from the settings panel.

-

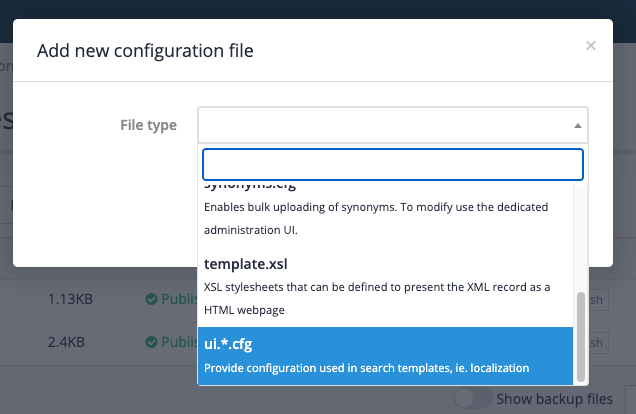

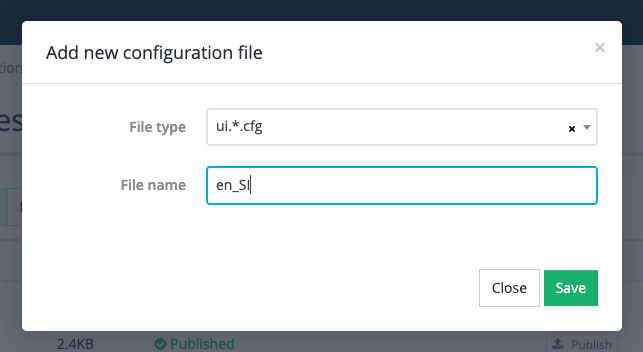

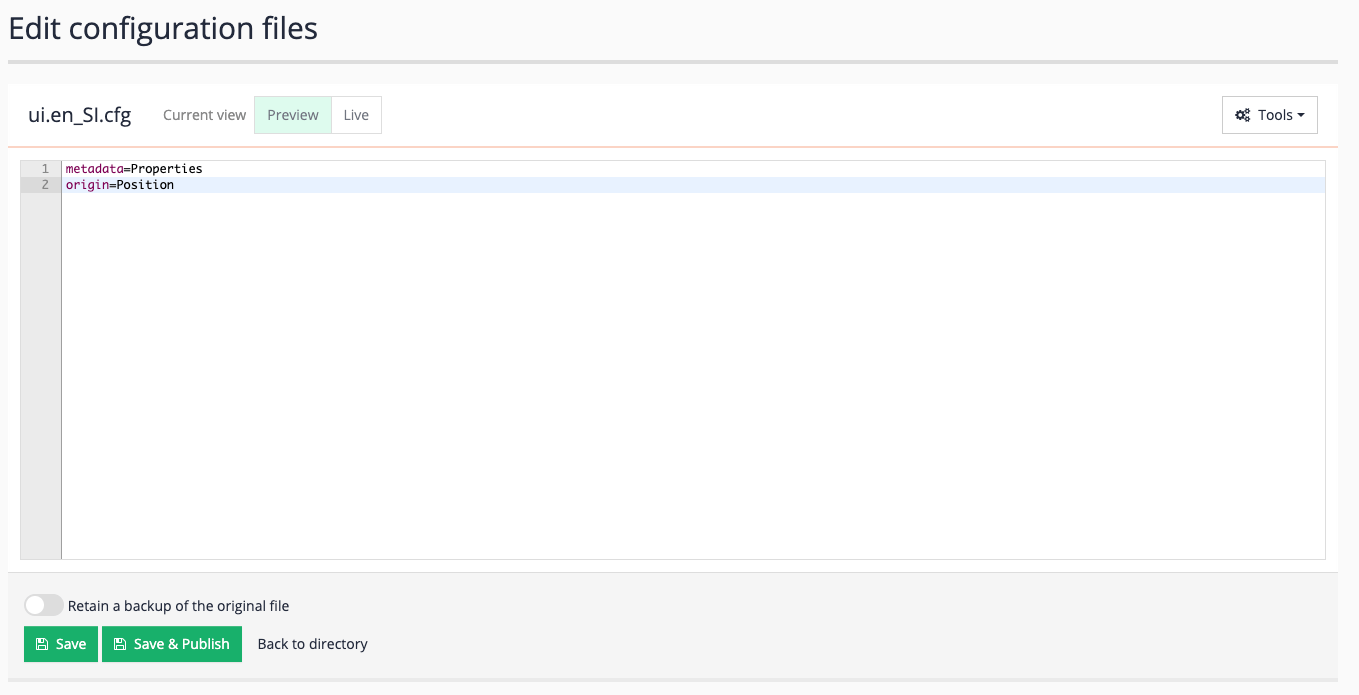

Create a

gscopes.cfgby clicking the add new button, then selectinggscopes.cfgfrom the file type menu, then clicking the save button.

-

Click on the gscopes.cfg item in the file listing. A blank file editor screen will load. We will define URL groupings that groups together a set of pages about Charlie Chaplin.

When defining gscopes there is often many different ways of achieving the same result.

The following pattern tells Funnelback to create a set of URLs with a gscope ID of

charliethat is made up of any URL containing the substring/details/CC_:charlie /details/CC_The following would probably also achieve the same result. This tells Funnelback to tag the listed URLs with a gscope ID of charlie.

the match is still a substring but this time the match is much more precise so each item is likely to only match a single URL. Observe also that it is possible to assign the same gscope ID to many patterns: charlie https://archive.org/details/CC_1916_05_15_TheFloorwalker charlie https://archive.org/details/CC_1916_07_10_TheVagabond charlie https://archive.org/details/CC_1914_03_26_CruelCruelLove charlie https://archive.org/details/CC_1914_02_02_MakingALiving charlie https://archive.org/details/CC_1914_09_07_TheRounders charlie https://archive.org/details/CC_1914_05_07_ABusyDay charlie https://archive.org/details/CC_1914_07_09_LaffingGas charlie https://archive.org/details/CC_1916_09_04_TheCount charlie https://archive.org/details/CC_1915_02_01_HisNewJob charlie https://archive.org/details/CC_1914_06_13_MabelsBusyDay charlie https://archive.org/details/CC_1914_11_07_MusicalTramps charlie https://archive.org/details/CC_1916_12_04_TheRink charlie https://archive.org/details/CC_1914_12_05_AFairExchange charlie https://archive.org/details/CC_1914_06_01_TheFatalMallet charlie https://archive.org/details/CC_1914_06_11_TheKnockout charlie https://archive.org/details/CC_1914_03_02_FilmJohnny charlie https://archive.org/details/CC_1914_04_27_CaughtinaCaberet charlie https://archive.org/details/CC_1914_10_10_TheRivalMashers charlie https://archive.org/details/CC_1914_11_09_HisTrystingPlace charlie https://archive.org/details/CC_1914_08_27_TheMasquerader charlie https://archive.org/details/CC_1916_05_27_Police charlie https://archive.org/details/CC_1916_10_02_ThePawnshop charlie https://archive.org/details/CC_1915_10_04_CharlieShanghaied charlie https://archive.org/details/CC_1916_06_12_TheFireman charlie https://archive.org/details/CC_1914_02_28_BetweenShowers charlie https://archive.org/details/CC_1918_09_29_TheBond charlie https://archive.org/details/CC_1918_xx_xx_TripleTrouble charlie https://archive.org/details/CC_1914_08_31_TheGoodforNothing charlie https://archive.org/details/CC_1914_04_20_TwentyMinutesofLove charlie https://archive.org/details/CC_1914_03_16_HisFavoritePasttime charlie https://archive.org/details/CC_1917_10_22_TheAdventurer charlie https://archive.org/details/CC_1914_06_20_CharlottEtLeMannequin charlie https://archive.org/details/CC_1917_06_17_TheImmigrant charlie https://archive.org/details/CC_1916_11_13_BehindtheScreen charlie https://archive.org/details/CC_1914_08_10_FaceOnTheBarroomFloor charlie https://archive.org/details/CC_1914_10_29_CharlottMabelAuxCourses charlie https://archive.org/details/CC_1914_10_26_DoughandDynamite charlie https://archive.org/details/CC_1914_12_07_HisPrehistoricpast charlie https://archive.org/details/CC_1914_02_09_MabelsStrangePredicament charlie https://archive.org/details/CC_1914_11_14_TilliesPuncturedRomance charlie https://archive.org/details/CC_1915_12_18_ABurlesqueOnCarmen charlie https://archive.org/details/CC_1914_08_01_CharolotGargonDeTheater charlie https://archive.org/details/CC_1917_04_16_TheCure charlie https://archive.org/details/CC_1916_08_07_One_A_M charlie https://archive.org/details/CC_1914_08_13_CharliesRecreation charlie https://archive.org/details/CC_1914_02_07_KidsAutoRaceAtVenice charlie https://archive.org/details/CC_1914_04_04_TheLandladysPetFinally, the following regular expression would also achieve the same result.

charlie archive.org/details/CC_.*$This may seem a bit confusing, but you need to keep in mind that the defined pattern can be as general or specific as you like - the trade-off is on what will match. The pattern needs to be specific enough to match the items you want but exclude those that shouldn’t be matched.

Copy and paste the following into your

gscopes.cfgand click the save button. This will set up two URL sets - the first (charlie) matching a subset of pages about Charlie Chaplin and the second (buster) matching a set of pages about Buster Keaton.charlie /details/CC_ buster archive.org/details/Cops1922 buster archive.org/details/Neighbors1920 buster archive.org/details/DayDreams1922 buster archive.org/details/OneWeek1920 buster archive.org/details/Convict13_201409 buster archive.org/details/HardLuck_201401 buster archive.org/details/ThePlayHouse1921 buster archive.org/details/College_201405 buster archive.org/details/TheScarecrow1920 buster archive.org/details/MyWifesRelations1922 buster archive.org/details/TheHighSign_201502 buster archive.org/details/CutTheGoat1921 buster archive.org/details/TheFrozenNorth1922 buster archive.org/details/BusterKeatonsThePaleface -

Rebuild the index (Select start advanced update from the update panel, then select reapply gscopes to live view and click the update button) to apply these generalized scopes to the index.

-

Confirm that the gscopes are applied. Run a search for day dreams and view the JSON/XML data model. Locate the results and observe the values of the

gscopesSetfield. Items that match one of the Buster Keaton films listed above should have a value ofbusterset.If you see gscopes set that look like FUN followed by a random string of letters and numbers, these are gscopes that are defined by Funnelback when you create faceted navigation based on queries. -

Use gscopes to scope the search. Run a search for !showeverything. Add

&gscope1=charlieto the URL and press enter. Observe that all the results are now restricted to films featuring Charlie Chaplin (and more specifically all the URLs contain/details/CC_as a substring). Change the URL to have&gscope1=busterand rerun the search. This time all the results returned should be links to films featuring Buster Keaton. Advanced scoping, combining gscopes is also possible using reverse polish notation when configuring query processor options. See the documentation above for more information.

Tutorial: Configuring URL sets that match a Funnelback query

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Navigate to the manage data source screen for the silent films - website data source.

-

Select manage data source configuration files from the settings panel.

-

Create a

query-gscopes.cfgby clicking the add new button, then selectingquery-gscopes.cfgfrom the file type menu, then clicking the save button. -

A blank file editor screen will load. We will define a URL set containing all silent movies about christmas.

The following pattern tells Funnelback to create a set of URLs with a gscope ID of

XMASthat is made up of the set of URLs returned when searching for christmas:XMAS christmasThe query is specified using Funnelback’s query language and supports any advanced operators that can be passed in via the search box.

-

Rebuild the index (Select start advanced update from the update panel, then select reapply gscopes to live view and click the update button) to apply these generalized scopes to the index.

-

Confirm that the gscopes are applied. Run a search for christmas and view the JSON/XML data model. Locate the results and observe the values of the

gscopesSetfield. The returned items should have a value ofXMASset. -

Use gscopes to scope the search. Run a search for !showeverything. Add

&gscope1=XMASto the URL and press enter. Observe that all the results are now restricted to the films about christmas. Replacegscope1=XMASwithgscope1=xmasand observe that the gscope value is case-sensitive.

Extended exercises and questions: URL sets (gscopes)

-

Redo the first gscopes exercise, but with the alternate pattern sets defined in step 4 of the exercise. Compare the results and observe that a similar result is achieved with the three different pattern sets.

-

Create a generalised scope that contains all documents where the director is Alfred Hitchcock

-

Why is using gscopes to apply keywords higher maintenance than using a metadata field?

-

Construct a reverse-polish gscope expression that includes charlie OR christmas but not buster. Hint: Gscope expressions

9. Social media data sources

Funnelback has the ability to index content from the following social media services:

-

YouTube

-

Facebook

-

Flickr

-

Twitter

-

Instagram (via the Instagram gatherer plugin)

Additional services can be added by implementing a custom gatherer plugin.

There are a number of pre-requisites that must be satisfied before social media services can be indexed. These vary depending on the type of service, but generally involve having an account, channel/service identifier and API key for access to the service.

Tutorial: Download and index a YouTube channel

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Create a new search package called Squiz. Skip the step where you are asked about adding data sources.

-

Once the search package is created scroll to the components section and click the create a data source button.

-

Create a data source with the following properties:

-

Data source type:

youtube -

Data source name:

Squiz videos -

YouTube API key:

AIzaSyDBFGGkZfR79YsdSpw3jNzfRUgsvXVrVKo -

Channel IDs?:

UC19PRS-wlngHv06TRnEQxDA -

Include channel’s liked videos?: no

-

Playlist IDs?:

PLMOOwxQHsNyl—x_Nsyooa_gFFjOI3bUR

-

-

Update the data source by selecting update data source from the update panel.

-

Inspect the metadata mappings (settings panel, configure metadata mappings) and observe that a set of YouTube specific fields are automatically mapped.

-

Return to the Squiz search package and create a new results page called Squiz video search

-

Add some display options to display the YouTube metadata. Add the following to the query processor options (customize panel, edit results page configuration):

-SF=[c,t,viewCount,likeCount,dislikeCount,duration,imageSmall]

-

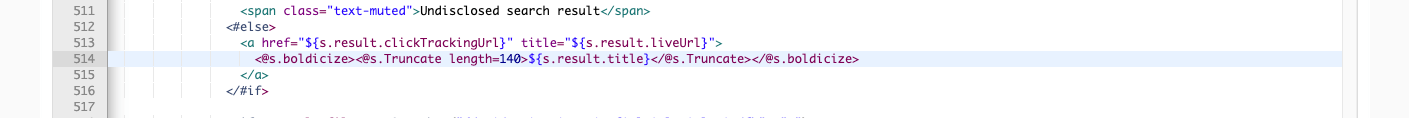

Update the search template (select edit results page templates from the templates panel). Replace the contents of the

<@s.Results>tag (approx line 495) with the following code:<#if s.result.class.simpleName == "TierBar"> <#-- A tier bar --> <#if s.result.matched != s.result.outOf> <li class="search-tier"><h3 class="text-muted">Results that match ${s.result.matched} of ${s.result.outOf} words</h3></li> <#else> <li class="search-tier"><h3 class="hidden">Fully-matching results</h3></li> </#if> <#-- Print event tier bars if they exist --> <#if s.result.eventDate??> <h2 class="fb-title">Events on ${s.result.eventDate?date}</h2> </#if> <#else> <li data-fb-result="${s.result.indexUrl}" class="result<#if !s.result.documentVisibleToUser>-undisclosed</#if> clearfix"> <h4 <#if !s.result.documentVisibleToUser>style="margin-bottom:4px"</#if>> <#if s.result.listMetadata["imageSmall"]?first??> <img class="img-thumbnail pull-left" style="margin-right:0.5em;" src="${s.result.listMetadata["imageSmall"]?first?replace("\\|.*$","","r")}" /> </#if> <#if question.currentProfileConfig.get("ui.modern.session")?boolean><a href="#" data-ng-click="toggle()" data-cart-link data-css="pushpin|remove" title="{{label}}"><small class="glyphicon glyphicon-{{css}}"></small></a></#if> <a href="${s.result.clickTrackingUrl}" title="${s.result.liveUrl}"> <@s.boldicize><@s.Truncate length=70>${s.result.title}</@s.Truncate></@s.boldicize> </a> <#if s.result.fileType!?matches("(doc|docx|ppt|pptx|rtf|xls|xlsx|xlsm|pdf)", "r")> <small class="text-muted">${s.result.fileType?upper_case} (${filesize(s.result.fileSize!0)})</small> </#if> <#if question.currentProfileConfig.get("ui.modern.session")?boolean && session?? && session.getClickHistory(s.result.indexUrl)??><small class="text-warning"><span class="glyphicon glyphicon-time"></span> <a title="Click history" href="#" class="text-warning" data-ng-click="toggleHistory()">Last visited ${prettyTime(session.getClickHistory(s.result.indexUrl).clickDate)}</a></small></#if> </h4> <p> <#if s.result.date??><small class="text-muted">${s.result.date?date?string("d MMM yyyy")}:</small></#if> <span class="search-summary"><@s.boldicize><#noautoesc>${s.result.listMetadata["c"]?first!"No description available."}</#noautoesc></@s.boldicize></span> </p> <p> <span class="glyphicon glyphicon-time"></span> ${s.result.listMetadata["duration"]?first!"N/A"} Views: ${s.result.listMetadata["viewCount"]?first!"N/A"} <span class="glyphicon glyphicon-thumbs-up"></span> ${s.result.listMetadata["likeCount"]?first!"N/A"} </p> </li> </#if> -

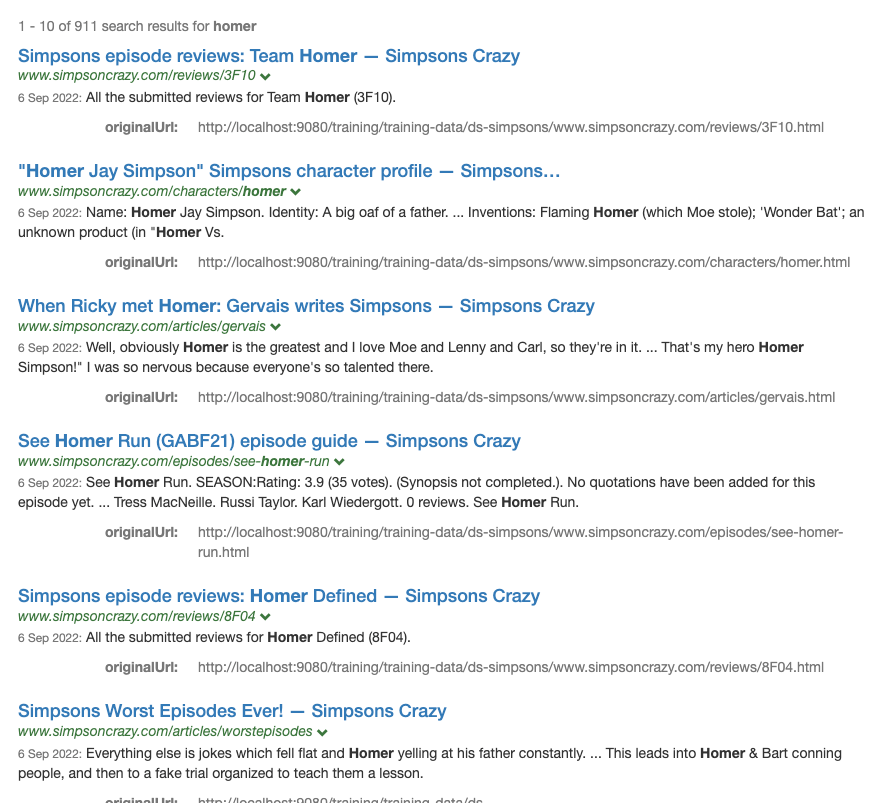

Run a search for !showall and observe the YouTube results:

Extended exercises: social media

-

Find a random YouTube channel and determine the channel ID. Add this as a second channel ID and update the collection.

-

Set up a new social media data source using one of the other templates (such as Facebook or Twitter). To do this you will need an appropriate API key for the repository and a channel to consume.

10. Introduction to Funnelback plugins

Funnelback plugins provide custom functionality that have been vetted and reviewed to be shared and reused between different search packages, data sources and results pages.

Custom functionality is implemented as one or more plugins which can be enabled by a Funnelback implementer. Plugins are a way for the community to contribute common solutions to re-occurring problems, or for highly unique solutions to be implemented for a particular problem.

10.1. Types of plugins

Plugins provide functionality that includes:

-

modification of the data model as a query is run

-

filters that can be added to a data source filter chains to modify or analyze content prior to indexing

-

custom gatherers for fetching content from unsupported data source types

Many plugins have been created and the plugin framework allows you to write additional plugins to implement additional functionality.

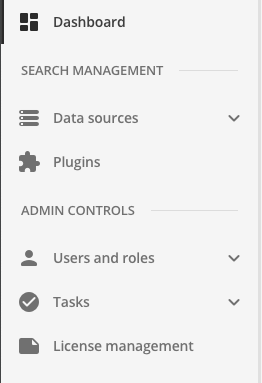

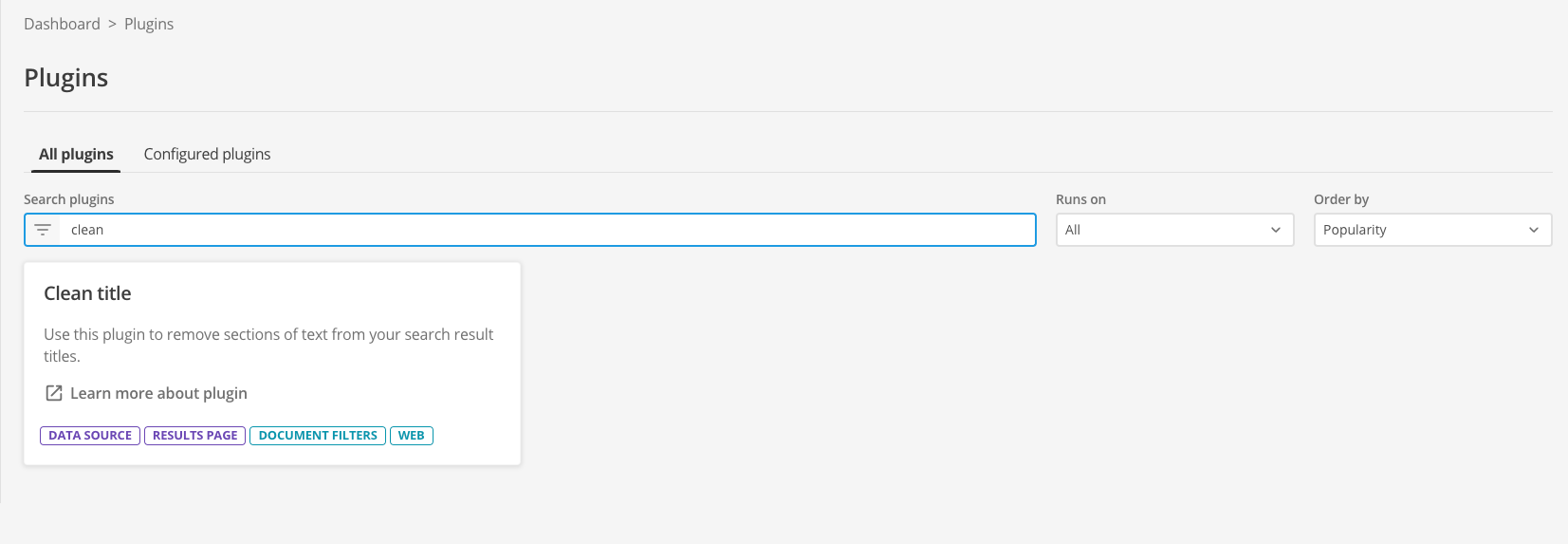

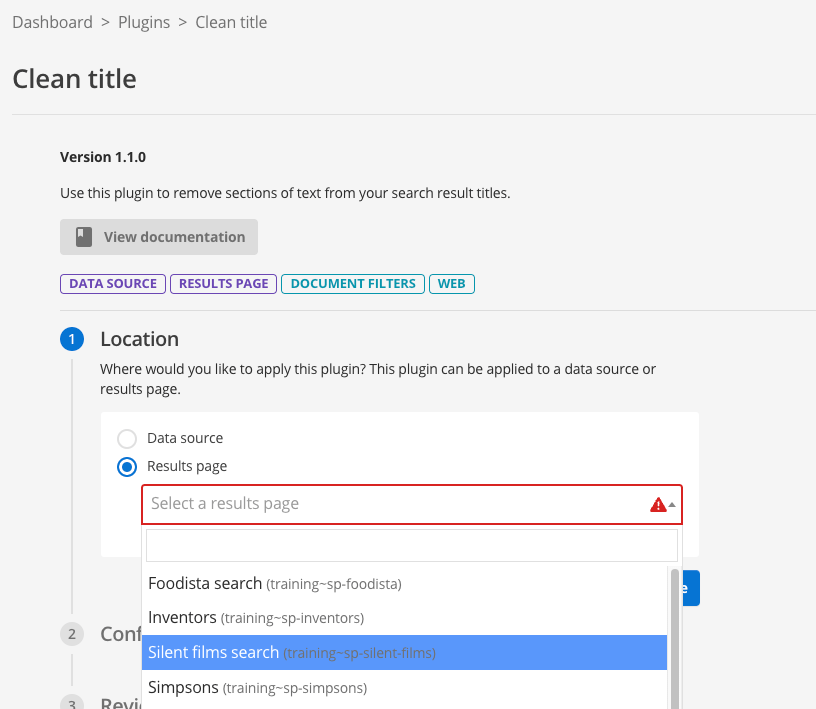

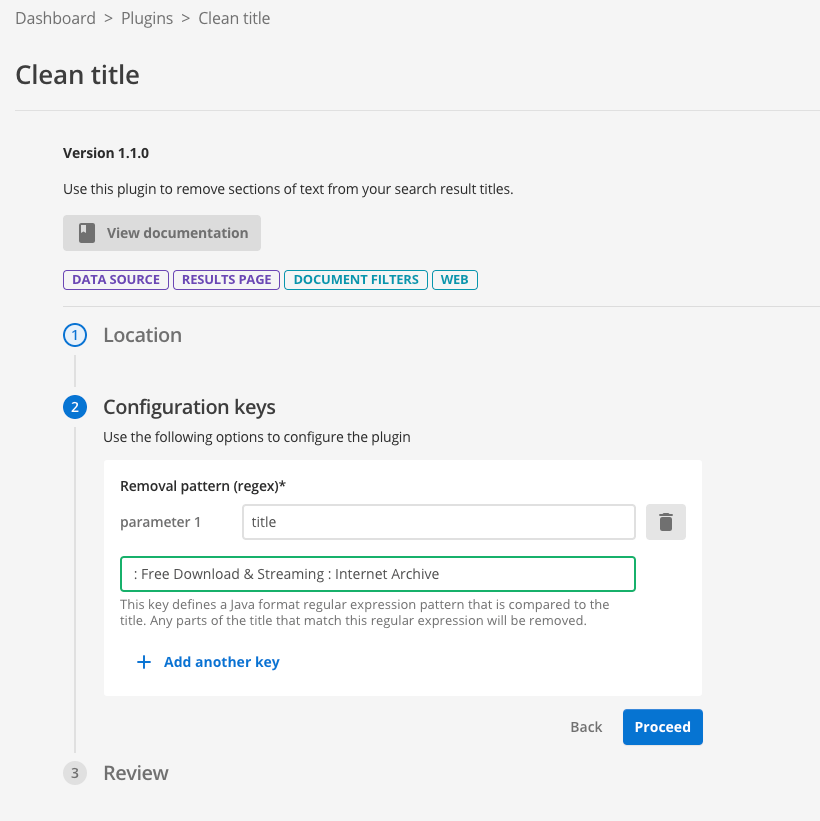

10.2. Accessing plugins

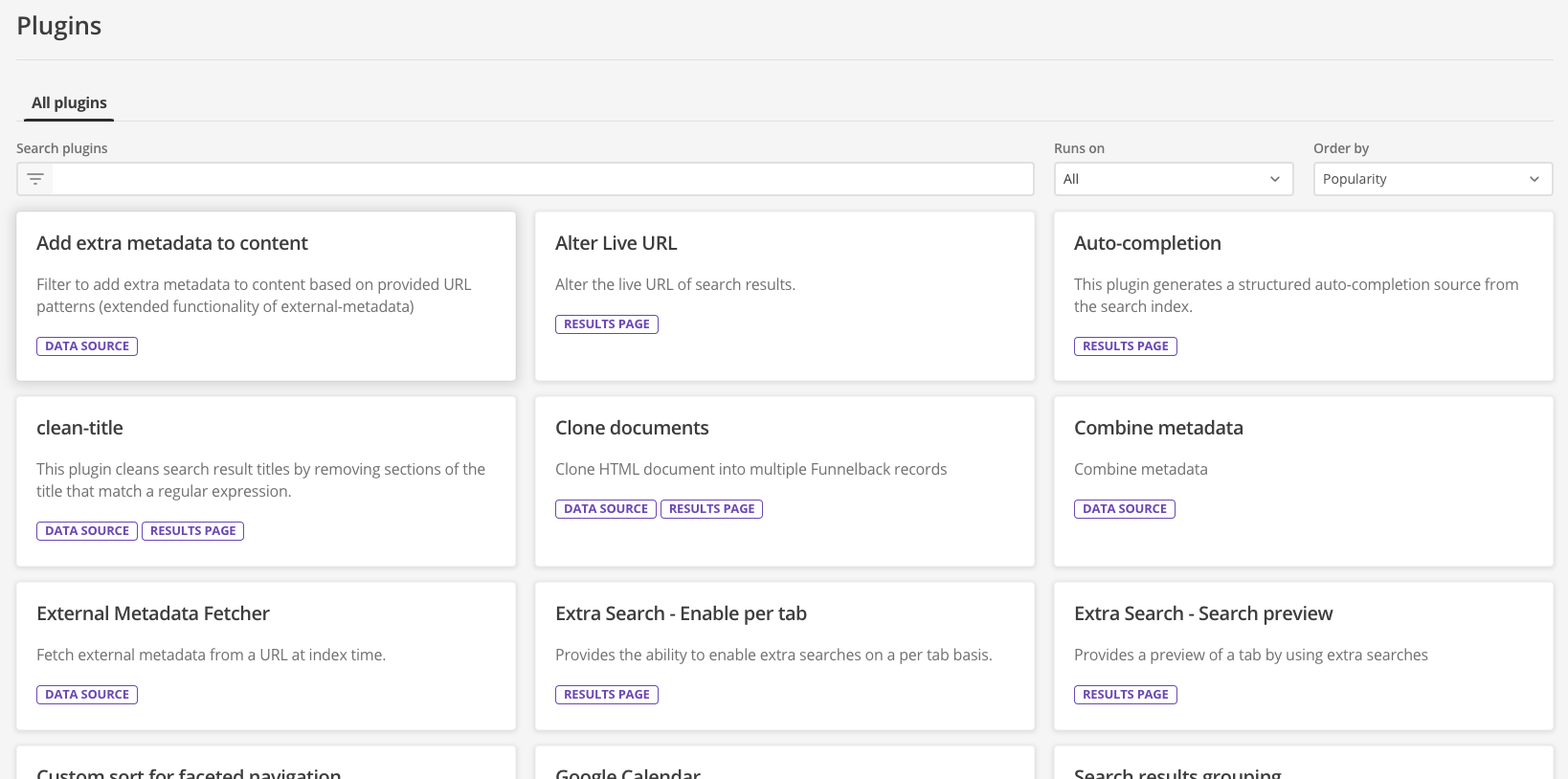

Available plugins are listed on the extensions screen, which can be accessed from the navigation panel within the search dashboard.

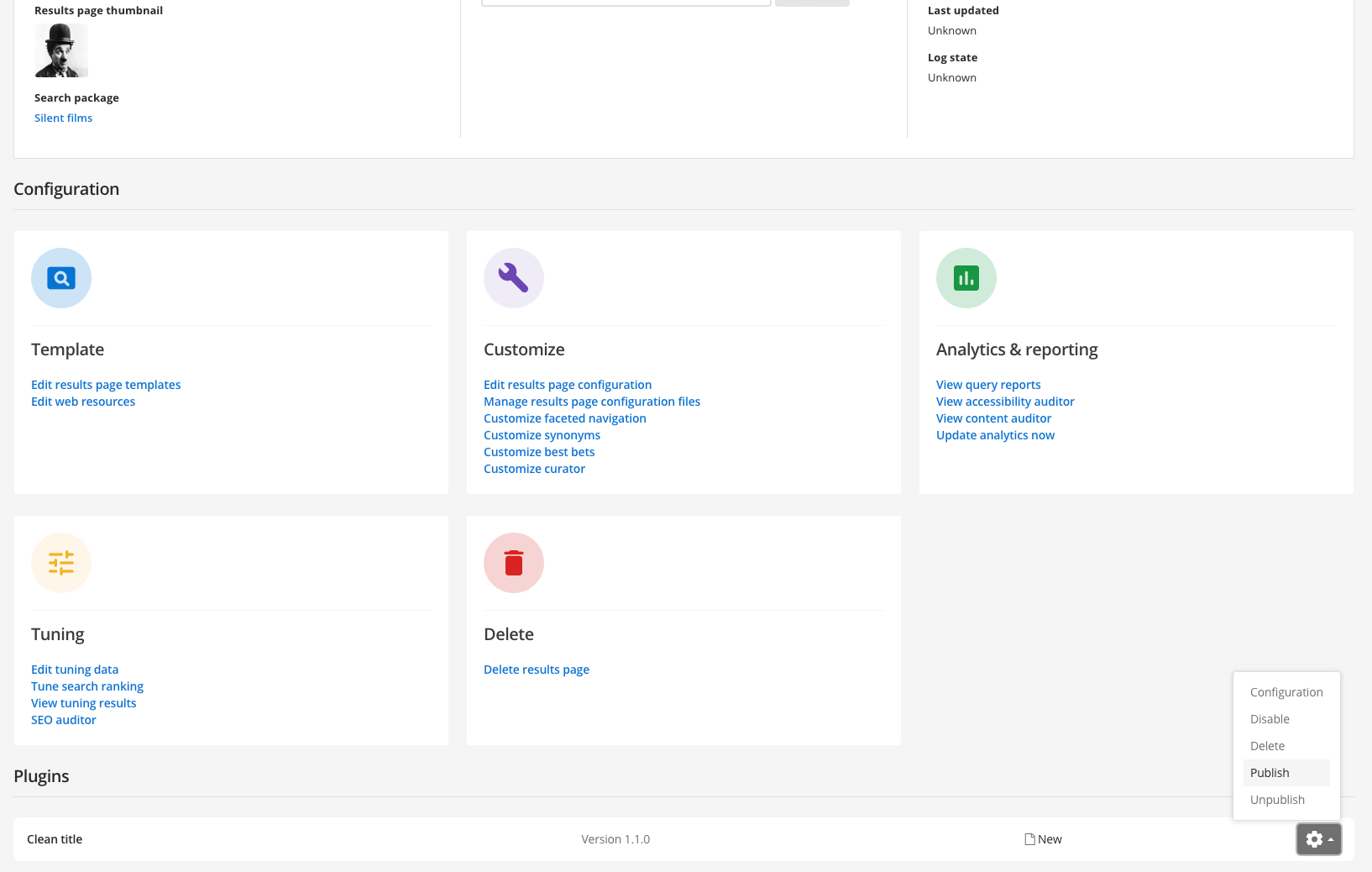

The extension screen lists all the installed plugins and provides options to view plugin information, view plugin documentation and apply the plugin.

Once applied, a plugin must be configured as outlined in the corresponding plugin documentation.

The next few sections introduce you to using plugins that are available in the plugins library. Writing of additional plugins will be covered separately.

Tutorial: Introduction to plugins

This exercise shows you where you can find and enable Funnelback plugins.

-

Log in to the search dashboard where you are doing your training.

See: Training - search dashboard access information if you’re not sure how to access the training. Ignore this step if you’re treating this as a non-interactive tutorial. -

Click the plugins item on the navigation panel.

-

This opens the plugins screen, which lists all the currently available plugins. The list of available plugins will increase as new plugins are released.

-

Have a quick scroll through the available plugins to get a feel for the different types of plugins and how they extend the available functionality.

-

Type facet into the search plugins box and observe that the tiles update to only display plugins relating to faceted navigation.

-

Clear the search box and then choose data source from the runs on menu. Observe that the listed plugins are now filtered to include only those that can be applied to a data source.

-

Change the sort order to be reverse alphabetic by selecting name Z-A from the order by menu.

Popularity is based on how often a plugin is used on your Funnelback instance. For the training VM the display will look like an alphabetic sort because plugins are not actually in use on your training environment.

11. Manipulating search result content

Funnelback offers a number of options for manipulating the content of search results.

There are three main places where search result content can be manipulated. Which one to choose will depend on how the modifications need to affect the results. The options are (in order of difficulty to implement):

-

Modify the content as it is being displayed to the end user.

-

Modify the content after it is returned from the indexes, but before it is displayed to the end user.