Update workflow

| This feature is not available in the Squiz DXP. |

| This feature is deprecated and will be removed in a future version. Please update any existing implementations to use supported features. |

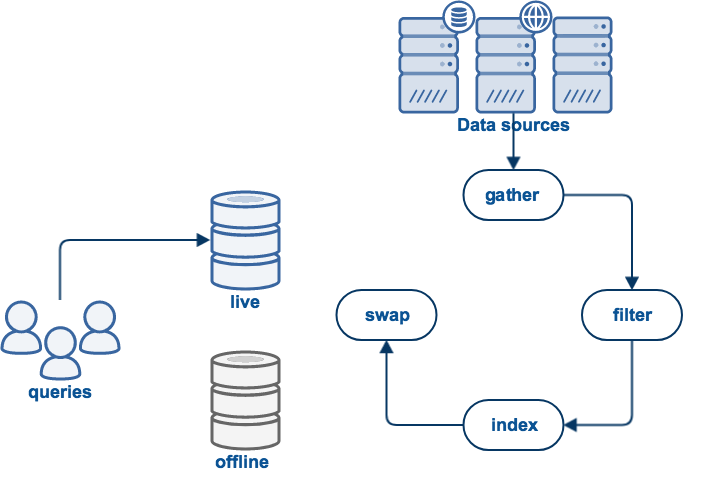

Funnelback provides a workflow mechanism that allows a series of commands to be executed before and after each update phase that occurs during an update.

This provides a huge amount of flexibility and allows the update to perform a lot of additional tasks as part of the update.

These commands can be used to pull in external content, connect to external APIs, to manipulate the content or index, or generate output.

Effective use of workflow commands requires a good understanding of the Funnelback update cycle and what happens at each stage of the update. It also requires an understanding of how Funnelback is structured as many of the workflow commands operate directly on the file system.

Each phase in the update has a corresponding pre phase and post phase command. If any workflow command returns a non-zero exit code, the update process will stop immediately.

The exact commands that are available will depend on the type of collection.

| Workflow is not supported when using push collections. |

Common workflow commands

The most commonly used workflow commands are:

Pre gather

This command runs immediately before the gather process begins. Example uses:

-

Pre-authenticate and download a session cookie to pass to the web crawler.

-

Download an externally generated seed list

-

Specified in

collection.cfgusingpre_gather_command=<COMMAND>.

Pre index

This command runs immediately before indexing starts. Example uses:

-

Download and validate external metadata from an external source

-

Connect to an external REST API to fetch XML content

-

Download and convert a CSV file to XML for a local collection

-

Specified in

collection.cfgusingpre_index_command=<COMMAND>.

Post index

This command runs immediately after indexing completes. Example uses:

-

Generate a structured auto-completion CSV file from metadata within the search index and apply this back to the index

-

Specified in

collection.cfgusingpost_index_command=<COMMAND>.

Post swap

This command runs immediately after the indexes are swapped. Example uses:

-

Publish index files to a multi-server environment

-

Specified in

collection.cfgusingpost_swap_command=<COMMAND>.

Post update

This command runs immediately after the update is completed. Example uses:

-

Perform post update cleanup operations such as deleting any temporary files generated by earlier workflow commands

-

Specified in

collection.cfgusingpost_update_command=<COMMAND>.

The is treated differently to the other workflow commands:

-

The command is run in the background; and

-

The command’s exit value is ignored - this means if the post update command fails the update will still be marked as successful.

Specifying workflow commands

Workflow commands are specified in the collection’s main configuration file (collection.cfg).

Each workflow command has a corresponding collection.cfg option. The command takes the form pre/post_phase_command=<command>. For example: pre_gather_command; post_index_command; post_update_command.

Each of these collection.cfg options takes a single value, which is the command to run.

The workflow command can be one or more system commands. System commands are commands that can be executed on the command line. These commands operate on the underlying filesystem and will run as the user that runs the Funnelback service (usually the search user).

| Care needs to be exercised when using workflow commands as it is possible to execute destructive code. |

The command that is specified in collection.cfg can contain the following collection.cfg variables, which should generally be used to assist with collection maintenance:

-

$SEARCH_HOME: this expands to the home folder for the installation. (for example, /opt/funnelback) -

$COLLECTION_NAME: this expands to the collection’s ID -

$CURRENT_VIEW: this expands to the current view (live or offline) depending on which phase is currently running and the type of update. This is particularly useful for commands that operate on the gather and index phases as the$CURRENT_VIEWcan change depending on the type of update that is running. If you run a re-index of the live view$CURRENT_VIEWis set to live for all the phases. For other update types$CURRENT_VIEWis set to offline for all workflow commands before a swap takes place. The view being queried on a search query can be specified by setting the view CGI parameter (for example, &view=offline). -

$GROOVY_COMMAND: this expands to the binary path for running groovy on the command line.

If multiple commands are required for a single workflow step then it is best practice to create a bash script that scripts all the commands, taking the relevant collection.cfg variables as parameters so that you can make use of the values from within your script. If you wish to make use of the collection.cfg variables above then pass these variables to your script as parameters.

Advanced workflow commands

The workflow commands mentioned above should be enough to meet the needs of most Funnelback administrators. However, there are several more update 'phases' which can have workflow commands applied if absolutely necessary. These include several phases that are specific to instant updates: instant-gather, instant-index, delete-prefix, delete-list. So the following configuration parameters may also be added:

-

post_gather_command: Run immediately after the update’s gather phase completes. Similar to thepre_index_commandbut not executed when the collection is re-indexed, or and advanced update is started from the indexing phase. -

pre_archive_command: Run immediately before the update’s archive phase commences. The archive phase manages archiving of the collection’s query and click logs. -

post_archive_command: Run immediately after the update’s archive phase completes. The archive phase manages archiving of the collection’s query and click logs. -

pre_reporting_command: Run immediately before analytics reports are built. -

post_reporting_command: Run immediately after analytics reports are built. -

pre_meta_dependencies_command: Run immediately before the update’s meta dependencies phase commences. The meta dependencies phase handles any required updates (such as auto-completion and spelling suggestions) for any meta collections to which the updating collection is a member. -

post_meta_dependencies_command: Run immediately after the update’s meta dependencies phase completes. The meta dependencies phase handles any required updates (such as auto-completion and spelling suggestions) for any meta collections to which the updating collection is a member -

pre_swap_command: Run immediately before the collection’s indexes are swapped. -

post_collection_create_command: Run immediately after collection was created. -

pre_collection_delete_command: Run immediately before collection will be permanently deleted.

Instant update workflow commands

The following workflow commands apply only to instant updates, and replace equivalent workflow commands for a normal update.

-

pre_instant-gather_command: Used in place ofpre_gather_command- run immediately before the instant update’s gather phase commences. -

post_instant-gather_command: Used in place ofpost_gather_command- run immediately after the instant update’s gather phase completes. -

pre_instant-index_command: Used in place ofpre_index_command- run immediately before the instant update’s index phase commences. -

post_instant-index_command: Used in place ofpost_index_command- run immediately after the instant update’s index phase completes. -

pre_delete-prefix_command: Run immediately before running an instant delete (remove sites). -

post_delete-prefix_command: Run immediately after running an instant delete (remove sites). -

pre_delete-list_command: Run immediately before running an instant delete (remove list of urls). -

post_delete-list_command: Run immediately after running an instant delete (remove list of urls).

Example: download a cookie for use with the crawler

Download a copy of the cookie generated when visiting +http://www.example.com +:

pre_gather_command=curl cookies.txt -k -c http://www.example.comExample: generate data

In this example, you have XML data residing in a file called /tmp/data.zip. Since this data is periodically updated you want to extract the latest data before indexing:

pre_index_command=sh ~/bin/extract-data.shThe extract-data shell script could be:

#!/bin/sh

cd /opt/funnelback/data/my-collection/offline/data

# cleanup

rm -rf unzipped

mkdir unzipped

# extract the files from the archive...

cd unzipped && unzip /tmp/data.zipExample: processing log files

If the update is successful, the swap process archives the query logs in /opt/funnelback/data/COLLECTION-ID/archive and should you want to perform some processing of the log files, you could start a process at the very end of each update:

post_update_command=/temp/analyse-logs.sh -collection shakespeareSubstituting values

In order to avoid repeating values, it is possible to pass the value of another collection.cfg parameter to a workflow command script. This is supported with the standard shell ${variable_name} syntax, as in the following example.

pre_index_command=/opt/funnelback/custom/process_crawled_data.sh ${collection_type}Assuming the collection_type was defined in the config file to be web, which would cause the following expansion when running the pre index step.

/opt/funnelback/custom/process_crawled_data.sh web

Also, please note that the special value $SEARCH_HOME is automatically available to substitute the installation location of Funnelback, even though it is not normally defined in a collection.cfg file.

You can also avoid placing in the Groovy binary and class paths when using Groovy scripts, as in the following example.

post_index_command=$GROOVY_COMMAND my_command.groovyThe default value for $GROOVY_COMMAND is defined in executables.cfg.